Hand Gestures And Speech Recognition System For Deaf Dumb-PDF Free Download

Gesture includes any bodily motion or states particularly any hand motion or face motion [10]. In our system implementation, recogni-tion of gestures will be done by using the Leap Motion Controller. Those gestures will be motion of the hand, and as a result, we can access the Drone by using simple gestures from the human hand. 2 REVIEW OF .

speech recognition has acts an important role at present. Using the speech recognition system not only improves the efficiency of the daily life, but also makes people's life more diversified. 1.2 The history and status quo of Speech Recognition The researching of speech recognition technology is started in 1950s. H . Dudley who had

Title: Arabic Speech Recognition Systems Author: Hamda M. M. Eljagmani Advisor: Veton Këpuska, Ph.D. Arabic automatic speech recognition is one of the difficult topics of current speech recognition research field. Its difficulty lies on rarity of researches related to Arabic speech recognition and the data available to do the experiments.

developer GUI application was created to sample sensor data; create and capture custom gestures; classify hand gestures with a support vector machine and output text & speech; and a hand animation to display the user's hand gestures. All of the GUI features were developed with multi threading

speech or audio processing system that accomplishes a simple or even a complex task—e.g., pitch detection, voiced-unvoiced detection, speech/silence classification, speech synthesis, speech recognition, speaker recognition, helium speech restoration, speech coding, MP3 audio coding, etc. Every student is also required to make a 10-minute

The task of Speech Recognition involves mapping of speech signal to phonemes, words. And this system is more commonly known as the "Speech to Text" system. It could be text independent or dependent. The problem in recognition systems using speech as the input is large variation in the signal characteristics.

Speech Recognition Helge Reikeras Introduction Acoustic speech Visual speech Modeling Experimental results Conclusion Introduction 1/2 What? Integration of audio and visual speech modalities with the purpose of enhanching speech recognition performance. Why? McGurk effect (e.g. visual /ga/ combined with an audio /ba/ is heard as /da/)

Hand Gesture Recognition using Deep Learning 2 Abstract Human Computer Interaction (HCI) is a broad field involving different types of interactions including gestures. Gesture recognition concerns non-verbal motions used as a means of communication in HCI. A system may be utilised to identify human gestures to convey

Motion tracking served as gesture feature extraction, which forms the motion track of gesturing hand to express the meaningful gestures, gesturing hand is detected in each frame and its center point is used for tracking the movement. The recognition rate of gestures will highly depend on the reliability of this .

Speech Enhancement Speech Recognition Speech UI Dialog 10s of 1000 hr speech 10s of 1,000 hr noise 10s of 1000 RIR NEVER TRAIN ON THE SAME DATA TWICE Massive . Spectral Subtraction: Waveforms. Deep Neural Networks for Speech Enhancement Direct Indirect Conventional Emulation Mirsamadi, Seyedmahdad, and Ivan Tashev. "Causal Speech

For the analysis of the speech characteristics and speech recognition experiments, we used Lombard speech database recorded in Slovenian language. The Slovenian Lombard Speech Database1 (Vlaj et al., 2010) was recorded in studio environment. In this section Slovenian Lombard Speech Database will be presented in more detail. Acquisition of raw audio

To reduce the gap between performance of traditional speech recognition systems and human speech recognition skills, a new architecture is required. A system that is capable of incremental learning offers one such solution to this problem. This thesis introduces a bottom-up approach for such a speech processing system, consisting of a novel .

speech data is a major stumbling block towards creating such a speech recognition service. To overcome this, it is desirable to have a privacy preserving speech recognition system which can perform recognition without having access to the speech data.

ow and speech recognition toolkit Kaldi. After reproducing state-of-the-art speech and speaker recognition performance using TIK, I then developed a uni ed model, JointDNN, that is trained jointly for speech and speaker recognition. Experimental results show that the joint model can e ectively perform ASR and SRE tasks. In particular, ex-

2.3 Hand Gesture Input The use of hand gestures to communicate information is a large and diverse field. For brevity, we will reference the taxonomy work of Karam and Schraefel [23] to identify 5 types of gestures relevant to human-computer interaction: deictic, manipulative, sem-aphoric, gesticulation, and language gestures [24].

Introduction 1.1 Overview of Speech Recognition 1.1.1 Historical Background Speech recognition has a history of more than 50 years. With the emerging of powerful computers and advanced algorithms, speech recognition has undergone a great amount of progress over the last 25 years. The earliest attempts to build systems for

Speech Recognition is Sequential Pattern Recognition Signal Model Generation Pattern Matching Input Output Training Testing Processing Goal: recognise the sequence of words from time waveform of speech. Two phases: Training (learning) and Testing (recognition) Samudravijaya K TIFR, samudravijaya@gmail.com Introduction to Automatic Speech .

translation. Speech recognition plays a primary role in human-computer interaction, so speech recognition research has essential academic value and application value. Speech recognition refers to the conversion from audio to text. In the early stages of the research work, since it was impossible to directly model the audio-to-text con-

Nuance, the developers of Dragon NaturallySpeaking (one of the most common speech recognition programs) , claim that users of speech recognition software can write three times faster than most people can type. 1 Speech recognition potentially offers particular benefits for learners with additional support

18-794 Pattern Recognition Theory! Speech recognition! Optical character recognition (OCR)! Fingerprint recognition! Face recognition! Automatic target recognition! Biomedical image analysis Objective: To provide the background and techniques needed for pattern classification For advanced UG and starting graduate students Example Applications:

* Correspondence: wu.bowen@irl.sys.es.osaka-u.ac.jp (B.W.); carlos.ishi@riken.jp (C.T.I.) Abstract: Co-speech gestures are a crucial, non-verbal modality for humans to communicate. Social agents also need this capability to be more human-like and comprehensive. This study aims to model the distribution of gestures conditioned on human speech .

Arabic letter recognition. In this paper, we aim to design an ArSL recognition system that captures the ArSL alphabet gestures from an image in order to recognize automatically the 30 gestures displayed in Fig. 1. More specifically, we intend to investiga

Sitting postures for pranayama sadhana: 1. Easy pose 2-Half-lotus 3-Swastikasana 4-siddha yoni asana Week 3 Mudras (Yoga Gestures) The practice of Mudra hand gestures is an ancient facet of yoga. Performing gestures effects the energy flow of the body and can change a person’s spiritual and mental characteristics. Jnana mudra Chin mudra

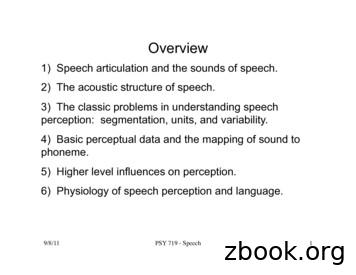

9/8/11! PSY 719 - Speech! 1! Overview 1) Speech articulation and the sounds of speech. 2) The acoustic structure of speech. 3) The classic problems in understanding speech perception: segmentation, units, and variability. 4) Basic perceptual data and the mapping of sound to phoneme. 5) Higher level influences on perception.

speech 1 Part 2 – Speech Therapy Speech Therapy Page updated: August 2020 This section contains information about speech therapy services and program coverage (California Code of Regulations [CCR], Title 22, Section 51309). For additional help, refer to the speech therapy billing example section in the appropriate Part 2 manual. Program Coverage

1 11/16/11 1 Speech Perception Chapter 13 Review session Thursday 11/17 5:30-6:30pm S249 11/16/11 2 Outline Speech stimulus / Acoustic signal Relationship between stimulus & perception Stimulus dimensions of speech perception Cognitive dimensions of speech perception Speech perception & the brain 11/16/11 3 Speech stimulus

Speech SDK, including features of the web service and client libraries. 2.1 Speech API Overview The Speech API provides speech recognition and generation for third-party apps using a client-server RESTful architecture. The Speech API supports HTTP 1.1 clients and is not tied to any wireless carrier. The Speech API includes the following web .

Voice Activity Detection. Fundamentals and Speech Recognition System Robustness 3 Figure 1. Speech coding with VAD for DTX. 2.2 Speech enhancement Speech enhancement aims at improving the performance of speech communication systems in noisy environments. It mainly dea

that, the spectral subtraction algorithm improves speech quality but not speech intelligibility [2]. Consequently, in this research work, the most recent . namely, speech or speaker recognition, speech coding and speech signal enhancement. By using only a few wavelet coefficients, it is possible to obtain a

Xcode 7 for iOS and connected it to a state-of-the-art speech recognition system, Baidu Deep Speech 2 [ 1]. The speech recognition system runs entirely on a server. As we were connected to Stanford University's high-speed network, there was no noticeable latency between the client iPhone and the speech server.

speech recognition which captures the electric potentials that are generated by the human articulatory muscles. EMG speech . and classified of sub-vocal speech. 1. INTRODUCTION Human speech communication typically takes place in complex acoustic backgrounds with environmental sound sources, competing voices, and ambient noise. .

The Speech Application Programming Interface or SAPI is an API developed by Microsoft to allow the use of speech recognition and speech synthesis within Windows applications. 2. PROPOSED ALGORITHM In this work, there are two main parts: Optical Character Recognition System for Paper Text Text to Speech Conversion 2.1.

ferred to as the speech dialog circle, using an example in the telecommunications context. The customer initially makes a request by speaking an utterance that is sent to a machine, which attempts to recognize, on a word-by-word basis, the spoken speech. The process of recognizing the words in the speech is called automatic speech recognition (ASR)

Keywords: Speech Enhancement, Speech Recognition, Spectral Subtraction, Windowing techniques, Noise reduction. I. INTRODUCTION M any systems rely on automatic speech recognition (ASR) to carry out their required tasks. Using speech as its input to perform certain tasks, it is important to

speech recognition technology over the past few decades. More importantly, we present the steps involved in the design of a speaker-independent speech recognition . about in the 1960‟s and 1970‟s via the introduction of advanced speech representations based on LPC analysis and cepstral analysis methods, and in the 1980‟s through the .

pare the recognition of dysarthric speech by a computerized voice recognition (VR) system and non-hearing-impaired adult listeners . Intelligibility "functions" were obtained for six . INTRODUCTION The dysarthrias comprise a group of motor speech disorders that result from damage to the central and/or peripheral nervous system. Dysarthria is .

Introduction 1.1 Background Automatic speech recognition (ASR) describes the task of transcribing the content of a speech recording to written text using computers. The task is traditionally decom-posed as two sub-tasks, acoustic modeling (AM) and language modeling (LM), where

speech recognition applications, even though a simpli ed task (digits and natural number recognition) has been considered for model evaluation. Di erent kinds of phone models have . INTRODUCTION 1.2 Automatic Speech Recognizer An Automatic Speech Recognizer (ASR) is a system whose task is to convert an

Speech emotion recognition is one of the latest challenges in speech processing and Human Computer Interaction (HCI) in order to address the operational needs in real world applications. Besides human facial expressions, speech has proven to be one of the most promising modalities for automatic human emotion recognition.

to speech recognition under less constrained environments. The use of visual features in audio-visual speech recognition (AVSR) is motivated by the speech formation mechanism and the natural ability of humans to reduce audio ambigu-ity using visual cues [1]. In addition, the visual information provides complementary features that cannot be .