A Comprehensive Review And Analysis Of Maturity Assessment .

Proceedings of the World Congress on Engineering and Computer Science 2009 Vol IIWCECS 2009, October 20-22, 2009, San Francisco, USAA Comprehensive Review and Analysis of MaturityAssessment Approaches for Improved Decision Support toAchieve Efficient Defense AcquisitionNazanin Azizian, Dr. Shahram Sarkani, Dr. Thomas MazzuchiAbstract— Based on multiple reviews of major defenseacquisition programs, the Government Accountability Office(GAO) has consistently reported that the Department ofDefense (DoD) acquisition programs are experiencingdifficulties in terms of schedule slips, cancellations, and failureto meet performance objectives due to insufficiently maturetechnology, unstable design, and a lack of manufacturingmaturity. The GAO claimed that “maturing new technologybefore it is included in a product is perhaps the mostdeterminant of the success of the eventual product or weaponsystem.” As a result, the DoD adopted the TechnologyReadiness Level (TRL) metric as a systematic method to assesstechnology maturity. However, as a result of increasingcomplexity of defense systems and the lack of objectivity of thetool, the TRL metric is deficient in comprehensively providinginsight into the maturity of technology. Objective and robustmethods that can assess technology maturity accurately andprovide insight into risks that lead to cost overruns, scheduledelays, and performance degradation are imperative formaking well-informed procurement decisions.Realizing this challenge, numerous other models andmethods have been developed to efficiently supplement andaugment the TRL scale, as well as provide new means ofevaluating technology maturity and readiness. The workpresented in this paper has investigated the literature, andleading research and industrial practices for technologymaturity assessment techniques, which are then analyzed usingthe SWOT (Strength, Weakness, Opportunity, Strength) model.The right maturity assessment techniques at the right time canenable government agencies and contractors to produceproducts that are cheaper, better, and made faster by closingknowledge gaps at critical decision points. This paper providesa comprehensive review and analysis of the prominent maturityassessment techniques in order to provide a selection criterionfor decision makers to choose the best fit method for theirprogram.Index Terms—Technology Maturity, Technology Readiness,TRL.I. INTRODUCTIONSuccessful parallel development and integration ofcomplex systems result in successful programs. ComplexNazanin Azizian is a doctoral candidate at The George WashingtonUniversity, Department of Engineering Management and SystemsEngineering, Washington DC, 20052. She is also employed as a SystemsEngineer by Lockheed Martin, Undersea Systems and Sensors (USS) (phone:571-214-4055; e-mail: nazanin@gwmail.gwu.edu).Dr. Sarkani and Dr. Mazzuchi are professor and department chair,respectively, at The George Washington University, Department ofEngineering Management and Systems Engineering, Washington DC, 20052(e-mail: sarkani@gwu.edu; mazzu@gwu.edu ).ISBN:978-988-18210-2-7systems are comprised of multiple technologies and theirintegrations. Due to scale and complexity of systems,stakeholders want confidence that risk is minimal and theprobability of successful technology development andintegration is high before investing large sums of money.Confidence is achieved when it is ensured that the developedor improved technologies can meet system requirements. Asa result, quantitative assessment tools that can provide insighton whether a group of separate technologies at variousmaturity levels can be integrated into a complex system at alow risk is beneficial to the success of a program and can helpsupport decision making of stakeholders.Tetlay and John [1] call the 21st century “The SystemsCentury” due the increasing complexity and high-integrationof technological products. They contend that assessingsystem maturity and readiness during the life-cycle ofdevelopment is imperative to the overall success of thesystem. Tetlay and John [1] argue that in recent years highinterest is taken in metrics such as the Technology ReadinessLevel (TRL), System Readiness Level (SRL), ManufacturingReadiness Level (MRL), Integration Readiness Level (IRL)and other metrics as avenues to measure maturity andreadiness of systems and technologies. These metrics areused to assess the risk associated with the development andoperation of technologies and systems; therefore they are away of ensuring that unexpected will not occur.However, literature has revealed gaps in clearly specifyingwhether the objective of the metrics and methods is tomeasure maturity or readiness. In general, maturity andreadiness are used interchangeably. In addition, in most casesthe applicability of tools and methods toward technologyversus a system is vague. Literature does not distinguishbetween maturity and readiness, and rarely specifies whethera method has been designed for a system or a technology.This research will generalize maturity and readiness as oneentity and refer to them throughout this paperinterchangeably. The objective of this research is to developa comprehensive assessment review of methods and toolsused to evaluate the maturity and readiness of technology andsystems. Although a technology and a system are not thesame, many of the methods described in this paper do notdistinguish between them.Systematically measuring technology and system maturityis a multi-dimensional process that cannot be performedcomprehensively by a one-dimensional metric. Although theTRL metric has been endorsed by the government and manyindustries, it captures only a small part of the information thatstakeholders need to support their decisions. This paperpresents other maturity assessment methods that have beendeveloped other rectify this shortcomings of the TRL.WCECS 2009

Proceedings of the World Congress on Engineering and Computer Science 2009 Vol IIWCECS 2009, October 20-22, 2009, San Francisco, USAII. BACKGROUNDA. Problem StatementLiterature repeatedly denotes that acquisition programsexperience cost overruns, schedule slips, and performanceproblems [2-14]. Based on a review of major defenseacquisition programs, the Government Accountability Office(GAO) has consistently reported that the Department ofDefense (DoD) acquisition programs are experiencingdifficulties in terms of schedule slips, cancellations, andfailure to meet performance objectives as a result ofinsufficiently mature technology, unstable design, and a lackof manufacturing maturity. The GAO claimed that “maturingnew technology before it is included in a product is perhapsthe most determinant of the success of the eventual product orweapon system” [8]. More recently, based on assessment of72 Weapons Programs, the GAO reported in March 2008 [7]thatbefore entering production [7]. All in all, the GAOconcluded that DoD programs enter various phases ofacquisition and product development knowledge gaps thatresult in design, technology, and production risks.B. DoD Acquisition Lifecycle FameworkThe DoD has adopted Evolutionary acquisition as anstrategy to deliver an operational capability over severalincrements, where each increment is dependent on asufficiently defined technology maturity level. The objectiveof evolutionary acquisition is to quickly hand off a capabilityto a user in a manner in which the technology developmentphase successively continues until the required technologymaturity is achieved and prototypes of system componentsare produced. Each increment is comprised of a set ofobjectives, entrance, and exit criteria [15]. The stages of theDoD acquisition life-cycle are depicted in figure 2.“none of them had proceeded through system developmentmeeting the best practices standards for maturetechnologies, stable design, or mature productionprocesses by critical junctures of the program, each ofwhich are essential for achieving planned cost,schedule, and performance outcomes”Further, the GAO [7] reported that in the fiscal year 2007,the total acquisition cost of major defense programs haverisen 26% from the initial estimate, while development costsgrew by 40%, and programs have failed to deliver thepromised capabilities. The trends in cost and schedulegrowth in these defense programs over the years are depictedin figure 1.Analysis of DOD Major Defense Acquisition ProgramFiscal year 2008Fiscal Year2000 Portfolio2005 Portfolio2007 PortfolioNumber of Programs759195Total Planned Commitments 790 Billion 1.5 Trillion 1.6 TrillionCommitments Outstanding 380 Billion 887 Billion 858 BillionPortfolio PerformanceChange to total RDT&E costsfrom first estimate27%33%40%Change in total acquisition costfrom first estimate6%18%26%Estimated total acquisition costgrowth 42 Billion 202 Billion 295 BillionShare of programs with 25percent or more increase inprogram acquisition unit cost37%44%44%Average schedule delay indelivering initial capabilities16 Months17 Months21 MonthsFigure 1: GAO Assessments of Major DefenseAcquisition ProgramsOver the past 6 years the GAO has been reporting to theDoD that its weapons system acquisition programs aresuffering in the area of cost growth and schedule delays, andunfortunately in a 2008 report the GAO continued to statethat these cost and schedule problems have not been rectified.The resulting cost overrun and schedule delays is of nosurprise because no program followed the best practicesstandards for maturing technology, stabilizing design, andmaturing production process. In fact the GAO (2008)reported that 88% of the assessed programs began systemdevelopment without fully maturing critical technologies;96% of the programs had not demonstrated the stability oftheir designs before entering system demonstration phase;and no program had fully matured their production processesISBN:978-988-18210-2-7Figure 2: DoD Acquisition Life-CycleThe Milestone Decision Authority (MDA) in collaborationwith the appropriate stakeholders determines if sufficientknowledge is obtained at each phase of the acquisitionlife-cycle before proceeding to the next phase. It is importantto note that the initial phase of evolutionary acquisitions ispreceded by Material Development Decision phase. Anacquisition program can begin at any stage of the acquisitionlife-cycle, but it must meet the entrance criteria to enter thenext phase. Therefore, if a program that is conceived aftermilestone B of the acquisition process framework, atechnology readiness assessment must be conducted toensure that the technologies meet the requirement for theupcoming phase [4, 5, 16].C. Technology Readiness Assessment (TRA) ProcessThe DODI 5000.2 establishes the requirement for theperformance of Technology Readiness Assessment (TRA) inany defense acquisition program. The TRA is a systematic,metric-based process that evaluates the maturity of CriticalTechnology Elements (CTEs) of a system and isaccompanied by a report that identifies how the CTEs areselected, and why are considered critical. TRA is notintended to assess the quality of the system architecture,design, or integration, but only reveal the readiness of criticalWCECS 2009

Proceedings of the World Congress on Engineering and Computer Science 2009 Vol IIWCECS 2009, October 20-22, 2009, San Francisco, USAsystem components based on what has been accomplished todate [5].The DoD requires that readiness assessment shall beperformed on Critical Technology Elements (CTE), prior tomilestone B and C of the acquisition life-cycle. The processfor performing a TRA is depicted in figure 3 [17]:Figure 3: Technology Readiness Assessment ProcessThe process outlined in figure 3 begins with thedevelopment of the program schedule for meeting variousmilestones to successfully achieve program goals andobjectives. The TRA process is initiated once CTEs of thesystem have been identified by examining all componentsacross the Work Breakdown Structure (WBS). CTEs mustbe both essential to the system and either new and novel orused in a new or novel manner. Once the CTEs have beenidentified, data concerning their performance is collected andpresented to an independent team who is expert in thetechnologies. Using the TRL metric, the independent reviewteam assesses the maturity of the CTEs and seeks theapproval of the S&T Executive, then submits the results tothe Deputy Under the Secretary of Defense (Science &Technolog) DUSD(S&T) [5].Once submitted three types of decisions can occurDUSD(S&T) concurs with the TRA, concurs withreservation, or does not concur, which then the TRA isreturned to the Service or Agency [5, 17].If theDUSD(S&T) does not concur with the results, it eitherrequests another technical assessment or sends the result backto the agency for changes. Further, DUSD(S&T) forwardsthe resulting recommendations to the Milestone DecisionAuthority (MDA) to support the acquisition decision process.The MDA ensures that the appropriate TRL is achieved priorto each milestone [5, 17, 18].The MDA ensures that the entrance criteria of achievingthe appropriate TRL prior to each milestone are met. AllCTEs must attain TRL 6 prior to Milestone B and TRL 7prior to Milestone C. The program will not advance to nextMilestone if the preceding criteria are not met, thereforeMDA must either restructure the program to use only maturetechnologies; delay the program start until all thetechnologies have adequately matured; modify the programrequirements; request a Technology Maturity Plan (TMP)that describes the rational for proceeding to the next phase [5,17]. In special circumstances, the DoD may grant a waiverfor national security reasons [17].ISBN:978-988-18210-2-7III. MATURITY ASSESSMENT METHODSTechnology maturity assessment is an avenue thatengineers and program managers utilize to make criticaldecisions about the probability that a technology cancontribute to the success of a system [13]. There has been asignificant amount of research done to develop tools andmethods that can provide insight into technology readinessand track technology maturity through the progression ofsystem development life cycle in order to provide continuousrisk management and enhanced decision support. Althoughthe government and defense industry has widely adoptedTechnology Readiness Level (TRL) as a knowledge-basedapproach, this metric has been considered insufficient. Theproposed approaches either expand on the TRL or integrateother metrics with the TRL to provide insight into technologyand system readiness and maturity.A. Technology Readiness Level (TRL)Technology Readiness Level (TRL) is a metric that wasinitially pioneered by the National Aeronautics and SpaceAdministration (NASA) Goddard Space Flight center in the1980’s as a method to assess the readiness and risk of spacetechnology [2, 14, 19-24]. Over time, NASA continued tocommonly use TRLs as part of an overall risk assessmentprocess and as means for comparison of maturity betweenvarious technologies [24]. NASA incorporated the TRLmethodology into the NASA Management Instructions(NMI) 7100 as a systematic approach to technology planningprocess [24, 25]. The DoD along with several otherorganizations later adopted this metric and tailored itsdefinitions to meet their needs.Figure 4: DoD TRL DefinitionsIn contrary to the well intention of the TRL metric toimprove technology acquisition and transition into systems,literature indicates that it can in fact introduces risks becauseof its various insufficiencies including the lack of standardguidelines for implementing TRLs [26, 27]. These flaws, asdiscussed in the next section, can potentially convey a falsesense of achievement with respect to maturation oftechnology. As a result, more robust and objective methodsof assessing technology maturity are desired in order to makewell informed procurement decisions.WCECS 2009

Proceedings of the World Congress on Engineering and Computer Science 2009 Vol IIWCECS 2009, October 20-22, 2009, San Francisco, USAB. TRL LimitationsWhile the TRL metric is sufficient at its very basic level inevaluating technology readiness, it is considered deficient invarious areas. Sauser et al. claim that the TRL index does nottake into account the integration of two technologies [9-12,23]. They believe that this metric lacks the means ofdetermining maturity of integration between technologiesand their impact on a system. Since it is highly probable thatsystems fail at integration point, Sauser et al. perceive theassessment of integration maturity as critical to the overallsystem success[9-12, 23].Further, it is mandatory by legislative and regulatory lawsthat DoD technologies must be assesses with respect to theirmaturity throughout an acquisition process. However, theproblem associated with the use of TRL is that there is no“how to” guideline when implementing the metric in aprogram. The DoD Interim Guidance simply states that“TRLs (or some equivalent assessment) shall be used” and nofurther detail is provided [14, 26].Mahafza (2005) argues that the TRL metric is insufficientbecause it does not “measure how well the technology isperforming against a set of performance criteria.” She claimsthat the TRL methodology rates the maturity of a technologyon a subjective scale and that it not adequate to label atechnology as highly or lowly mature. Moreover, Smith(2004) notes that TRLs fall short in technology maturityassessment because it combines together multiplecomponents of readiness into a single number; lacks theability to systematically weight in the criticality of eachtechnology to the entire system; and inability to account forthe relative contribution of various readiness componentsthroughout the system life cycle [24].Cornford et al. (2004) assert that although the TRLprovides a high level understanding of technology maturity,it lacks accuracy and precision. More accurate description oftechnology readiness is needed in order to make strategicdecision at critical program junctures in order to prevent costoverruns and schedule delays. Cornford et al. (2004)describe the five limitations of the TRL method of assessingtechnology maturity as the following [2]: Subjective Assessment - there exist no formal method ofimplementing TRLs; the TRL value is assigned totechnology by a technology developer who may bebiased; the definitions of each TRL level is prone tobroad interpretation. Not focused on system-to-system integration - TRLsfocus on a component of a technology and wheninfusing the particular component with other in a largerscale, imperative integration concerns come forth. Focused on hardware and not software - at the time thatthe TRLs were conceived at NASA, hardware wasemphasized significantly more than software. Not well integrated into cost and risk modeling tools errors in incorrectly assigning TRL levels to atechnology will inversely affect cost and risk modelsthat have embedded in them means of reflectingtechnology maturity.ISBN:978-988-18210-2-7 Lacking succinct definition of terminology - thedefinitions of each TRL level can be ambiguous andreliant on an individual’s interpretation. For example,defining the “relevant environment” to advancetechnology to TRL 6 is problematic because there arevarious environments that can be simulated such aslow-gravity, radiation, temperature, vacuum etc.According to the Office of the Under Secretary of Defensefor Acquisition, Technology and Logistics, the TRL conveysthe status of technology readiness on a scale only in aparticular point in time. However, it does not communicatethe possibility and difficulty of further maturing technologyto higher TRL levels. The Office of the Under Secretary ofDefense for Acquisition, Technology and Logistics alsopointed out that the TRL is a “single axis, the axis oftechnology capability demonstration,” therefore it does notgive a complete picture of risks in integrating a technologyinto a system. In order to acquire a full understanding of thereadiness and m

technology readiness assessment must be conducted to ensure that the technologies meet the requirement for the upcoming phase [4, 5, 16]. C. Technology Readiness Assessment (TRA) Process The DODI 5000.2 establishes the requirement for the performance of Technology Readiness Assessment (

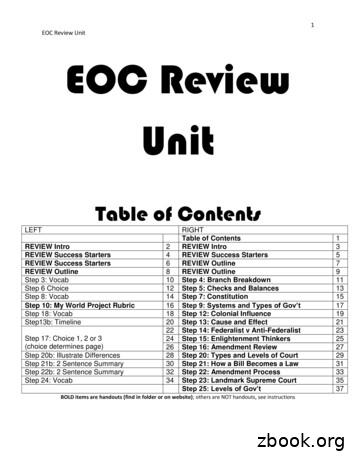

1 EOC Review Unit EOC Review Unit Table of Contents LEFT RIGHT Table of Contents 1 REVIEW Intro 2 REVIEW Intro 3 REVIEW Success Starters 4 REVIEW Success Starters 5 REVIEW Success Starters 6 REVIEW Outline 7 REVIEW Outline 8 REVIEW Outline 9 Step 3: Vocab 10 Step 4: Branch Breakdown 11 Step 6 Choice 12 Step 5: Checks and Balances 13 Step 8: Vocab 14 Step 7: Constitution 15

Collectively make tawbah to Allāh S so that you may acquire falāḥ [of this world and the Hereafter]. (24:31) The one who repents also becomes the beloved of Allāh S, Âَْ Èِﺑاﻮَّﺘﻟاَّﺐُّ ßُِ çﻪَّٰﻠﻟانَّاِ Verily, Allāh S loves those who are most repenting. (2:22

akuntansi musyarakah (sak no 106) Ayat tentang Musyarakah (Q.S. 39; 29) لًََّز ãَ åِاَ óِ îَخظَْ ó Þَْ ë Þٍجُزَِ ß ا äًَّ àَط لًَّجُرَ íَ åَ îظُِ Ûاَش

the public–private partnership law review the real estate law review the real estate m&a and private equity review the renewable energy law review the restructuring review the securities litigation review the shareholder rights and activism review the shipping law review the sports law review the tax disputes and litigation review

Middle School - Functional Skills and Adaptive Functional Skills Classes Class Type Abbreviation Comprehensive English ENG Comprehensive Reading READ Comprehensive Independent Living Skills ILS Comprehensive Mathematics MATH Comprehensive Science SCI Comprehensive Social Studies SS 20

the product regulation and liability review the shipping law review the acquisition and leveraged finance review the privacy, data protection and cybersecurity law review the public-private partnership law review the transport finance law review the securities litigation review the lending and secured finance review the international trade law .

2. Left 4 Dead 2 Review 3. Bayonetta Review 4. New Super Mario Bros Wii Review 5. F1 2009 Review 6. Gyromancer Review 7. King's Bounty: Armored Princess Review 8. Crane Simulator 2009 Review 9. Resident Evil: The Darkside Chronicles Review 10. Jambo! Safari Review List based on traffic for Eurogamer articles dated 17th - 23rd November.

Comprehensive Industry Analysis for the Food Processing Industry The food processing industry analysis for Winnebago County is being conducted in two phases. Phase I includes a national perspective on the industry s background and historical performance along with a review of overall industry trends. Phase I of this analysis will review the .