IMPROVISATION OF SEEKER SATISFACTION IN YAHOO!

K. LATHA AND R. RAJARAM: IMPROVISATION OF SEEKER SATISFACTION IN YAHOO! COMMUNITY QUESTION ANSWERING PORTALDOI: 10.21917/ijsc.2011.0024IMPROVISATION OF SEEKER SATISFACTION IN YAHOO! COMMUNITYQUESTION ANSWERING PORTALK. Latha1 and R. Rajaram21Department of Computer Science and Engineering, Anna University of Technology, Tiruchirappalli, IndiaE-mail: erklatha@gmail.com2Department of Information Technology, Thiagarajar College of Engineering, Tamil Nadu, IndiaE-mail: rrajaram@tce.eduresource. WikiAnswers.com leverages wiki technology andfundamentals, allowing communal ownership and editing ofcontent. Each question has a ―living‖ answer, which is editedand improved over time by the WikiAnswers.com community.WikiAnswers.com uses a System – where every answer canhave dozens of different Questions that ―trigger‖ it. However, itis not clear what information needs these CQA [15] portalsserve, and how these communities are evolving. Understandingthe reason for the growth, the characteristics of the informationneeds that are met by such communities, and the benefits anddrawbacks of community QA over other means of findinginformation, are all crucial questions for understanding thisphenomenon. As we will show, human assessors feel difficult inpredicting [1] asker satisfaction, thereby requiring novelprediction techniques [16] and evaluation methodology that webegin to develop in this paper.Not surprisingly, user‘s previous interactions such asquestions asked and ratings submitted are a significant factor forpredicting satisfaction. We hypothesized that asker‘s satisfactionwith contributed answers is largely determined by the askerexpectations, prior knowledge and previous experience whichare used to update the taste of the asker (History updation) andthe forth coming answers are given based on the past history(taste) and is not available in any of the CQA [15] portals. Wereport on our exploration of how to improve satisfactionprediction [16] that is, to attempt to predict whether a specificinformation seeker will be satisfied with any of the contributedanswers. Based on the time spent by the asker in the particularsession and askers voting, we can predict whether the asker issatisfied or not for a given question. If he is not satisfied, notvoted within a span of time or may not have the prior knowledge(Background knowledge) about the answers, then our Systemcan automatically rank the results with the help of rankingfunctions and assigns rank to the answers. Most of the askersmay get irritated because of the more number of answers for aquestion and also go through only the first two or three answersfor a given question. In this situation our Abstract GenerationSystem can generate the gist (most important sentences) from allthe answers in the asker‘s point of view.AbstractOne popular Community question answering (CQA) site, Yahoo!Answers, had attracted 120 million users worldwide, and had 400million answers to questions available. A typical characteristic of suchsites is that they allow anyone to post or answer any questions on anysubject. Question Answering Community has emerged as popular,and often effective, means of information seeking on the web. Byposting questions, for other participants to answer, informationseekers can obtain specific answers to their questions. However, CQAis not always effective: in some cases, a user may obtain a perfectanswer within minutes, and in others it may require hours andsometimes days until a satisfactory answer is contributed. Weinvestigate the problem of predicting information seeker satisfactionin yahoo collaborative question answering communities, where weattempt to predict whether a question author will be satisfied with theanswers submitted by the community participants. Our experimentalresults, obtained from a large scale evaluation over thousands of realquestions and user ratings, demonstrate the feasibility of modelingand predicting asker satisfaction. We complement our results with athorough investigation of the interactions and information seekingpatterns in question answering communities that correlate withinformation seeker satisfaction. We also explore automatic ranking,creating abstract from retrieved answers, and history updation, whichaims to provide users with what they want or need without explicitlyask them for user satisfaction. Our system could be useful for avariety of applications, such as answer selection, user feedbackanalysis, and ranking.Keywords:Social Media, Community Question Answering, Information SeekerSatisfaction, Ranking, History Updation1. INTRODUCTIONCommunity Question Answering (CQA) [15] emerged as apopular alternative to finding information online. It has attractedmillions of users who post millions of questions and hundreds ofmillions of answers, producing a huge knowledge repository ofall kinds of topics, so many potential applications can bepossibly made on top of it. For example, automatic questionanswering systems, which try to find the information toquestions directly, instead of giving a list of related documents,might use CQA [15] repositories as a useful information source.In addition, instead of using general-purpose web searchengines, information seekers now have an option to post theirquestions (often complex [17] and specific) on Community QAsites such as Naver or Yahoo! Answers [17], and have theirquestions answered by other users. These sites are growingrapidly. Also, Wiki Answers is a website that is an ad-supportedwebsite where knowledge is shared freely in the form ofquestions and answers (Q&A). Anyone can ask a question andanyone from anywhere in the world can answer it. This sharingof knowledge in turn becomes part of a permanent information2. LIFE CYCLE OF A QUESTION IN CQAThe process of posting and obtaining answers to a question isan important phenomenon in CQA [14]. A user posts a questionby selecting a category, and then enters the question subject(title) and, optionally, details (description). For conciseness, QAwill refer to this user as the asker for the context of the question,even though the same user is likely to also answer otherquestions or participate in other roles for other questions. Notethat to prevent abuse, the community rules typically forbid the152

ICTACT JOURNAL ON SOFT COMPUTING, JANUARY 2011, VOLUME: 01, ISSUE: 03ISSN: 2229 – 6956(ONLINE)asker from answering own questions or vote on answers. After ashort delay (which may include checking for abuse, and otherprocessing) the question appears in the respective category list ofopen questions, normally listed from the most recent down.At the point, other users can answer the question, vote onother users‘ answers, or comment on the question (e.g., to askfor clarification or provide other, non-answer feedback), orprovide various meta-data for the question. At that point, thequestion is considered as closed by the asker, and no newanswers are accepted.Fig.2. Example of ―Unsatisfied‖ question thread4. PROBLEM DEFINITIONWe do not attempt yet to analyze the distinction betweenpossibly satisfied and completely unsatisfied, or otherwisedissect the case where the asker is not satisfied. We now stateour problem formally into four different angles.4.1 ANSWER JUSTIFY PROBLEMFig.1. Example of ―satisfied‖ question threadThe asker may receive more number of answers for eachquestion. Now the asker intended to read all answers and selectone suitable answer for his question. Here the problem is, theasker may not know that which answer he has to choose?“To overcome this problem we explore Automatic Rankingsystem to provide Rank for answers”.QA believe that in such cases, the asker is likely satisfiedwith at least one of the responses, usually the one he chooses asthe best answer.But in many cases the asker never closes the answerpersonally, and instead, after some fixed period of time, thequestion is closed automatically. The QA community has―failed‖ to provide satisfactory answers in a timely manner and―lost‖ the asker‘s interest. Question Answering communities arean important application by itself, and also provideunprecedented opportunity to study feedback from the asker.Furthermore, asker satisfaction plays crucial role in the growthor decay of a question answering community.If the asker is satisfied with any of the answers, he canchoose it as best, and provide feedback ranging from assigningstars or rating for the best answer, and possibly textual feedback.QA believe that in such cases, the asker is likely satisfied with atleast one of the responses, usually the one he/she chooses as thebest answer. An example of such ―satisfactory‖ interaction isshown in Fig.1. If many of the askers in CQA are not satisfiedwith their experience, they will not post new questions and willrely on other means of finding information which creates askersatisfaction problems.4.2 ANSWER UNDERSTANDING PROBLEMHow the asker can identify the objective of each answer?“To avoid this problem Abstract generation providing abrief summary of answers and is often used to help thereader quickly ascertain the answer's purpose. When used,an abstract always appears at the beginning of all displayedanswers, acting as the point-of-entry”.4.3 ASKER TASTE CHANGESOne important problem is to determine what an asker wants?What form of answer he expects?. It is crucial to determine whatthe user thinks in his mind?“History Updation using distributed learning automata is abest solution to this problem. It is used to remember theinformation about the previous behavior of the asker whohas selected answer in the past history and in order to showrelevant answers from the learned behavior and it is updatedin the asker’s history”.3. THE ASKER SATISFACTION PROBLEMWhile the true reasons are not known, for simplicity, tocontrast with the ―satisfied‖ outcome above, we consider thisoutcome to be ―unsatisfied.‖An example of such interaction isshown in Fig.2.4.4 TIME CONSUMING PROBLEMTo read all retrieved answers, the asker needs more time. Isthe time factor affects the asker satisfaction?153

K. LATHA AND R. RAJARAM: IMPROVISATION OF SEEKER SATISFACTION IN YAHOO! COMMUNITY QUESTION ANSWERING PORTAL“The time duration is computed by how long the askerviewing the displayed answers, and is used for predictingwhether the asker is satisfied or unsatisfied”.rhetorical relations between sentences in answers, and then cutsout less important parts in the extracted structure to generate anabstract [2] of the desired length.Abstract generation is, like Machine Translation, one of theultimate goals of Natural Language Processing. This is realizedas a suitable application of the extracted rhetorical structure. Inthis paper we describe the abstract generation system based on it.5. METHODOLOGIES5.1AUTOMATICRANKINGGENERALIZATION METHODBASEDON5.3 RHETORICAL STRUTURE (RS)The objective of applying learned association rules [10], [9]is to improve QA comparison by providing a more generalizedrepresentation. Good generalization [22] will have the desiredeffect of bringing QA that are semantically related closer to eachother that previously would have been incorrectly treated asbeing further apart. Association rules [10] are able to captureimplicit relationships that exist between features of QA. Whenthese rules are applied they have the effect of squashing thesefeatures, which can be viewed as feature generalization.Initially the most important features are extracted usingMarkov Random Field (MRF) [21] model. These features areused as the initial seeds for generalization [22]. Then associationrule [10], [9] induction is employed to capture feature cooccurrence patterns.It generates rules of the form H B, where the body B is afeature from answers, and the head H is a feature from aquestion. This means that rules can be used to predict thepresence of the head feature given that all the features in thequestions are present in the answer. This means that a rulesatisfying the body, when the head feature is absent will not beconsidered.The idea of feature generalization [22] and combining thiswith feature selection to form structured representation forranking .Feature generalization [22] helps tone downambiguities that exist in free text by capturing semanticrelationships and incorporating these in the query representation.This enables a much better comparison of features in QA.An interesting observation is that with feature selection andgeneralization a more effective ranking is achieved even with arelatively small set of features. Finally the retrieved features areused for ranking answers. This is attractive because smallervocabularies can effectively be used to build concise indices thatare understandable and easier to interpret.Rhetorical structure represents relations between variouschunks of answers in the body of each question. The rhetoricalstructure is represented in terms of connective expressions andits relations. There are forty categories of rhetorical relationswhich are exemplified in Table 1.Table.1. Example of rhetorical relationsRelationsConfident co Example eg Recommend rd Reason re Assumption as Plus pl Specialization sp Serial sr Summarization su Extension ex Suggestion sg Experience ep Explanation en Advice ad Capture ca Appreciate ap Next ne Simple si Rare ra Condition cn Negative po Must mu Expectation en Trust tr Starting st Doubt dt Accurate ac Positive po Request rq Repeat rt Utilize ut Direction di While wi Memorize me Question qu Same sa Opinion op Verify ve Apology ay 5.2 ABSTRACT GENERATIONWith the rapid growth of online information, there is agrowing need for tools that help in finding, filtering andmanaging the high dimensional data. Automated textcategorization is a supervised learning task, defined as assigningcategory labels to answers based on likelihood suggested by atraining set of answers.Real-world applications of text categorization often require asystem to deal with tens of thousands of categories defined overa large taxonomy. Since building these text classifiers by hand istime consuming and costly, automated text categorization hasgained importance over the years.We have developed an automatic abstract generation [2]system for answers based on rhetorical structure extraction. Thesystem first extracts the rhetorical structure, the compound of the154ExpressionsI canFor exampleTry .thisBecauseI thinkAndAlmost, most, alwaysThusAfter all, finallyThis is, thereYou canI use, my experience,i usedSoYou need, you wouldTakeGood questionThenJust, easySome timeIf youBut, i don‘t, not sureYou shouldHope this .I believeFirst of allMay beYes, noWhy not?PleaseAgainUse thisHere isSinceRememberCan you, are youSounds likeStatementAskSorry, excuse.

ICTACT JOURNAL ON SOFT COMPUTING, JANUARY 2011, VOLUME: 01, ISSUE: 03ISSN: 2229 – 6956(ONLINE)wishes wi can be regarded as an abstract object which has finite number ofpossible actions. This action is applied to a random environmentand is used by automata [4] in further action selection. Bycontinuing this process, the automata learn to select an actionwith best grade. The learning algorithm [10] used by automata todetermine the selection of next action from the response of theenvironment.The proposed algorithm takes advantage of usage data andlink information to recommend answers to the asker based onlearned pattern. For that, it uses the rewarding and penalizingschema of actions which updates the actions probabilities in eachstep based on a learning algorithm. The rewarding factor forhistory updation is presented in equationAll the best,welcome, bestwishes, good luckThe rhetorical relation of a sentence, which is therelationship to the preceding part of the text, can be extracted inaccordance with the connective expression in the sentence.The rhetorical structure represents logical relations betweensentences or block of sentences of each answer. Linguistic clues,such as connectives, anaphoric expressions, and idiomaticexpressions in the answers are used to determine the relationshipbetween the sentences In the sentence evaluation stage, thesystem calculates the importance of each sentence in the originaltext based on the relative importance of rhetorical relations.They are categorized into three types as shown in Table.2. Forthe relations categorized into Right Nucleus, the right node ismore important, from the point of view of abstract generation[2], than the left node. In the case of the Left Nucleus relations,the situation is vice versa. And both nodes of the Both- Nucleusrelations are equivalent in their importance. A sample Question& answer is considered, the rhetorical structure is built andshown in Fig.3.a Question: does McDonald‘s veg burger in Indiacontain egg?Answer 1:Nope,In India its purely veg, I had takenone of my close associate who is purely veg and Idiscussed it with the Delhi shop and the managerconfirmed and even wanted to give in writing. MadeIndian food is my FAVVVV. I would be all over thestreet eating all the home cooked food out there I liveUSA and there‘s mD‘s on every block.Table.2. Relative importance of rhetorical cleusRelationExperience, negative,example, serial,direction, confident,specializationEspecially, reason,accurate, appropriate,simple, rare,assumption,explanation, doubt,request ,apology,Utilize, opinionPlus, extension,question, capture,appreciate, next,repeat, many,condition, since, ask,same, starting, wishes,memorize, trust,positive, recommend,expectation, advice,Summarization,(1)where ω is a constant & λ is obtained by this intuition. If a usergoes from taste i to taste j & there is no link between thesetastes, then the value of λ is set to constant value; otherwise it isset to zero.ImportantNodeRight nodeThus the Rhetorical structure for answer 1 can berepresented by a binary treeLeft node op 3 ex1 2This structure can also be represented as follows,[[1 ex 2] op 3]]Answer 2: No, way it‘s a guaranteed company co Both nodeAnswer 3: I think yes. But you can ask the managerof McDonald‘s .Good Luck.[[1 ad 2] wi 3]]Finally the abstract from all the answers will be,“I had taken one of my close associatewho is purely veg and I discussed it with the Delhishop and the manager confirmed and even wantedto give in writing- No, way it’s a guaranteedcompany- you can ask the manager ofMcDonald’s.”5.4 HISTORY UPDATION BY USING LEARNINGAUTOMATA (LA)Based on asker‘s past history (already selected answer for hisprevious question) the taste of the asker can be updated and wecan predict what kind of answer, the asker will choose for hiscurrent question.Learning automata [10] are adaptive decision-makingdevices operating on unknown random environments. Theautomata [4] approach to learning involves the determination ofan optimal action from a set of allowable actions. An automatonFig.3. Abstract generation using rhetorical structureIf there is a cycle in users‘ navigation path, the actions in thecycle indicate the change of taste of the asker over a period oftime or the dissatisfaction of asker from the previous tastes must155

K. LATHA AND R. RAJARAM: IMPROVISATION OF SEEKER SATISFACTION IN YAHOO! COMMUNITY QUESTION ANSWERING PORTALWe describe the baselines and our specific methods forpredicting asker satisfaction. In other words, our ―truth‖ labelsare based on the rating subsequently given to the best answer bythe asker himself. It is usually more valuable to correctly predictwhether a user is satisfied (e.g., to notify a user of success). Thissection describes the experimental setting, datasets, and metricsused for producing our results in Section 7.be penalized. The penalization increases with the cycle length.So, the parameter b which is penalization factor is calculatedfrom the following equationb (Steps in cyclecontaining k and l ) * (2)where, β is a constant factor. The penalization factor has directrelation with the length of cycle traversed by the

K. LATHA AND R. RAJARAM: IMPROVISATION OF SEEKER SATISFACTION IN YAHOO! COMMUNITY QUESTION ANSWERING PORTAL DOI: 10.21917/ijsc.2011.0024 152 IMPROVISATION OF SEEKER SATISFACTION IN YAHOO! COMMUNITY QUESTION ANSWERING PORTAL. K. Latha. 1. and R. Rajaram. 2. 1. Department of Comput

conventional idiom is non-idiomatic improvisation. In this course, we will listen to many sorts of non-idiomatic improvisation, that are seemingly unrelated to each other. One historic class of non-idiomatic improvisation, European Free Improvisation, is now sufficiently established to be identified as its own idiom.

Activity content and sequence were developed using Poulter's Seven Principles of Improvisation Pedagogy. Kratus' Seven Levels of Improvisation were also taken into consideration to develop an effective sequence of activities complimenting the natural progression of improvisation development. Keywords: Improvisation, Middle School, Concert Band.

Improvisation is one of the oldest musical techniques practiced. In some way, improvisation has been a part of most musical styles that have ever existed throughout the world. Ernest Ferand once said that any historical study of music that does not take into account improvisation must present an incomplete and distorted picture of music's .

2.5 Characteristics of Group Jazz Improvisation 42 2.5.1 Related Constructs in Organizational Improvisation 43 2.5.2 Conditions in Organizational Improvisation 44 2.5.3 Factors influencing the quality of Organization Improvisation 45 2.6 Creative Cognition 49 2.6.1 Geneplore Model 49 2.6.2 The Pre-inventive Structure 51

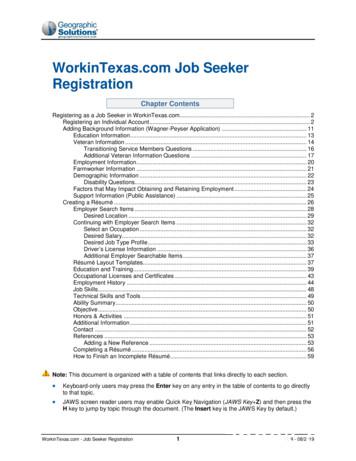

WorkinTexas.com Job Seeker Registration . WorkinTexas.com - Job Seeker Registration . 2 . V19 - 08/2019 . This chapter explains how you, as a job seeker, register a new account in WorkinTexas.com. You’ll learn how to record and re-use your personal background information in the résumés you create and job applications you fill out and submit.

Coker, Jerry Improvising Jazz 170 Grove, Dick The Encyclopedia of Basic Harmony and Theory Applied to Improvisation on All Instruments 190 Haerle, Dan Jazz Improvisation for Keyboard Players 221 Kynaston, Trent P. and Robert J. Ricci Jazz Improvisation 254 LaPorta, John Tonal Organization of Improvisational Technigues 274 Mehegan, John

This paper will focus on using jazz improvisation as a model for teaching improvisation in the standard music theory classroom in order to supplement the acquisition of basic concepts and connect these concepts to the student's applied instrument. In this paper I outline a four-tier jazz improvisation model that focuses on the melodic

dimensional structure of a protein, RNA species, or DNA regulatory element (e.g. a promoter) can provide clues to the way in which they function but proof that the correct mechanism has been elucid-ated requires the analysis of mutants that have amino acid or nucleotide changes at key residues (see Box 8.2). Classically, mutants are generated by treating the test organism with chemical or .