YARN And How MapReduce Works In Hadoop

YARN and how MapReduce works in HadoopBy Alex HolmesYARN was created so that Hadoop clusters couldrun any type of work. This meant MapReducehad to become a YARN application and requiredthe Hadoop developers to rewrite key parts ofMapReduce. This article will demystify howMapReduce works in Hadoop 2.Given that MapReduce had to go through some open-heart surgery to get it working as a YARNapplication, the goal of this article is to demystify how MapReduce works in Hadoop 2.Dissecting a YARN MapReduce applicationArchitectural changes had to be made to MapReduce to port it to YARN. Figure 1 shows theprocesses involved in MRv2 and some of the interactions between them.Each MapReduce job is executed as a separate YARN application. When you launch a newMapReduce job, the client calculates the input splits and writes them along with other jobresources into HDFS (step 1). The client then communicates with the ResourceManager tocreate the ApplicationMaster for the MapReduce job (step 2). The ApplicationMaster is actuallya container, so the ResourceManager will allocate the container when resources becomeavailable on the cluster and then communicate with a NodeManager to create theApplicationMaster container (steps 3–4).11If there aren’t any available resources for creating the container, the ResourceManager may choose to kill oneor more existing containers to free up space.For source code, sample chapters, the Online Author Forum, and other resources, go to http://manning.com/holmes2/.

2CHAPTER 2 Introduction to YARNThe MapReduce ApplicationMaster (MRAM) is responsible for creating map and reducecontainers and monitoring their status. The MRAM pulls the input splits from HDFS (step 5) sothat when it communicates with the ResourceManager (step 6) it can request that mapcontainers are launched on nodes local to their input data.Container allocation requests to the ResourceManager are piggybacked on regular heartbeatmessages that flow between the ApplicationMaster and the ResourceManager. The heartbeatresponses may contain details on containers that are allocated for the application. Data locality ismaintained as an important part of the architecture—when it requests map containers, theMapReduce ApplicationManager will use the input splits’ location details to request that thecontainers are assigned to one of the nodes that contains the input splits, and the ResourceManagerwill make a best attempt at container allocation on these input split nodes.

Hadoop slave3NodeManager2Hadoop master4Client hostJobHistoryServerClientResourceManagerMR AppMaster76Hadoop slave5HDFSNodeManager81YarnChildHadoop slaveShuffleHandlerShuffleHandlerFigure 1 The interactions of a MapReduce 2 YARN applicationOnce the MapReduce ApplicationManager is allocated a container, it talks to the NodeManager tolaunch the map or reduce task (steps 7–8). At this point, the map/ reduce process acts very similarly tothe way it worked in MRv1.THE SHUFFLEThe shuffle phase in MapReduce, which is responsible for sorting mapper outputs and distributing themto the reducers, didn’t fundamentally change in MapReduce 2. The main difference is that the mapoutputs are fetched via ShuffleHandlers, which are auxiliary YARN services that run on each slaveFor source code, sample chapters, the Online Author Forum, and other resources, go to http://manning.com/holmes2/.

node. 2 Some minor memory management tweaks were made to the shuffle implementation; forexample, io.sort.record.percent is no longer used.WHERE’S THE JOBTRACKER?You’ll note that the JobTracker no longer exists in this architecture. The scheduling part of theJobTracker was moved as a general-purpose resource scheduler into the YARN ResourceManager.The remaining part of JobTracker, which is primarily the metadata about running and completedjobs, was split in two. Each MapReduce ApplicationMaster hosts a UI that renders details on thecurrent job, and once jobs are completed, their details are pushed to the JobHistoryServer, whichaggregates and renders details on all completed jobs.Uber jobsWhen running small MapReduce jobs, the time taken for resource scheduling and process forking isoften a large percentage of the overall runtime. In MapReduce 1 you didn’t have any choice about thisoverhead, but MapReduce 2 has become smarter and can now cater to your needs to run lightweightjobs as quickly as possible.TECHNIQUE 7Running small MapReduce jobsThis technique looks at how you can run MapReduce jobs within the MapReduce ApplicationMaster.This is useful when you’re working with a small amount of data, as you remove the additional timethat MapReduce normally spends spinning up and bringing down map and reduce processes.TECHNIQUE 7 Running small MapReduce ite.xml;yarn.nodemanager.auxservices and the value is mapreduce shuffle.thepropertynameis

The JobHistory UI, showing MapReduce applications that have completed ProblemYou have a MapReduce job that operates on a small dataset, and you want to avoid the overhead ofscheduling and creating map and reduce processes. SolutionConfigure your job to enable uber jobs; this will run the mappers and reducers in the same process asthe ApplicationMaster. DiscussionUber jobs are jobs that are executed within the MapReduce ApplicationMaster. Rather than liaise withthe ResourceManager to create the map and reduce containers, the ApplicationMaster runs the mapand reduce tasks within its own process and avoids the overhead of launching and communicating withremote containers.To enable uber jobs, you need to set the following property: mapreduce.job.ubertask.enable trueTable 1 lists some additional properties that control whether a job qualities for uberization.For source code, sample chapters, the Online Author Forum, and other resources, go to http://manning.com/holmes2/.

Table 1 Properties for customizing uber xbytesDefault valueDescription9The number of mappers for a job must be less than or equalto this value for the job to be uberized.1The number of reducers for a job must be less than or equalto this value for the job to be uberized.Default block sizeThe total input size of a job must be less than or equal to thisvalue for the job to be uberized.When running uber jobs, MapReduce disables speculative execution and also sets the maximumattempts for tasks to 1.Reducer restrictions Currently only map-only jobs and jobs with one reducer aresupported for uberization.Uber jobs are a handy new addition to the MapReduce capabilities, and they only work on YARN. Thisconcludes our look at MapReduce on YARN.To read more about YARN, MapReduce, and Hadoop in action, check out Alex Holmers’ book Hadoopin Practice, 2nd edition.

YARN was created so that Hadoop clusters could run any type of work. This meant MapReduce had to become a YARN application and required the Hadoop developers to rewrite key parts of MapReduce. This article will de

The blue yarn is 43 cm long. The red yarn is 28 cm longer than the blue yarn. The green yarn is 15 cm shorter than the red yarn. What is the length of the green yarn? Answer: The length of the green yarn is cm. Step 1. Find the length of the red yarn: 43 28 71 Step 2. Find the length of the green yarn: 71 -

Cloudera Runtime Tuning Apache Hadoop YARN The common MapReduce parameters mapreduce.map.java.opts, mapreduce.reduce.java.opts, and yarn.app.mapreduce.am.command-opts

Core spun yarn shows some improved characteristics over 100% cotton yarn or 100% filament yarns. Core spun yarn was preferred to blended staple spun yarn in terms of strength and comfort [1,2]. Phenomenal improvements in durability and aesthetic properties were observed in core spun yarn [3,4]compared to cotton spun yarn. As the cotton was wrapped

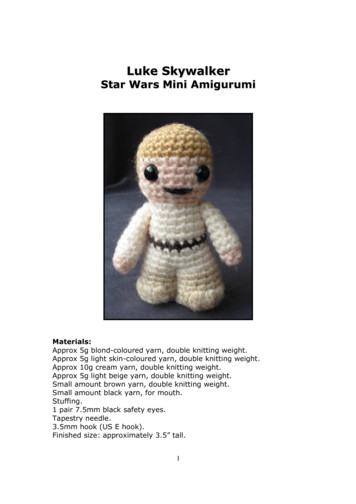

Star Wars Mini Amigurumi i Materials: Approx 5g blond-coloured yarn, double knitting weight. Approx 5g light skin-coloured yarn, double knitting weight. Approx 10g cream yarn, double knitting weight. Approx 5g light beige yarn, double knitting weight. Small amount brown yarn, double knitting weight. Small amount black yarn, for mouth. Stuffing.

A. Hadoop and MDFS Overview The two primary components of Apache Hadoop are MapReduce, a scalable and parallel processing framework, and HDFS, the filesystem used by MapReduce (Figure 1). Within the MapReduce framework, the JobTracker and the TaskTracker are the two most important modules. The Job-Tracker is the MapReduce master daemon that .

MapReduce Design Patterns. MapReduce Restrictions I Any algorithm that needs to be implemented using MapReduce must be expressed in terms of a small number of rigidly de ned components that must t together in very speci c ways. I Synchronization is di cult. Within a single MapReduce job,

affected by these yarn characteristics. . Thus if there are 10 hanks of cotton yarn that weigh one pound, this is 10s yarn. Each of the hanks is 840 yards long, so the total length of the yarn is 8,400 yards. So, if its a coarser yarn it will take fewer hanks, and less

The empirical study suggests that the modern management approach not so much substitutes but complements the more traditional approach. It comprises an addition to traditional management, with internal motivation and intrinsic rewards have a strong, positive effect on performance, and short term focus exhibiting a negative effect on performance. These findings contribute to the current .