A BRIEF STUDY ON SPEECH EMOTION RECOGNITION

International Journal of Scientific & Engineering Research, Volume 5, Issue 1, January-2014ISSN 2229-5518339A BRIEF STUDY ON SPEECH EMOTION RECOGNITIONAkalpita DasDepartment of Instrumentation & USIC, Gauhati UniversityGuwahati, India, dasakalpita@gmail.com;Laba Kr. ThakuriaDepartment of Instrumentation & USIC, Gauhati UniversityGuwahati, India, thakurialaba@gmail.comPurnendu AcharjeeDepartment of Instrumentation & USIC, Gauhati UniversityGuwahati, Indiapbacharyaa@gmail.comProf. P.H. TalukdarDepartment of Instrumentation & USIC, Gauhati UniversityGuwahati, Indiaphtassam@gmail.comAbstract—Speech Emotion Recognition is a current research topic because of its wide range of applications and it became a challenge inthe field of speech processing too. In this paper, we have carried out a brief study on Speech Emotion Analysis along with EmotionRecognition. This paper includes the study of different types of emotions, features to identify those emotions and various classifiers toclassify them properly. The first part of the paper is enriched with an introductory description. Second part covers the different featuresalong with some popular extraction method. Third part includes various classifiers used in SER and finally the conclusion part puts an endto this paper.Key Terms: - Ser; mfcc; lpcc; svm; gmm; hmm; knn; adaboost algorithm.A)The physiological level (e.g., describing nerveimpulses or muscle innervations patterns of themajor structures involved in the voice-productionprocess).B) The phonatory-articulatory level (e.g., describingthe position or movement of the major structuressuch as the vocal folds).C) The acoustic level (e.g., describing characteristicsof the speech wave form emanating from themouth).I.INTRODUCTIONSpeech Emotion Analysis refers to the use of variousmethods to analyze vocal behaviour as a marker of affect(e.g., emotions, moods, and stress), focusing on thenonverbal aspects of speech. The basic assumption is thatthere is a set of objectively measurable voice parameters thatreflect the affective state a person is currently experiencing(or expressing for strategic purposes in social interaction).This assumption appears reasonable given that mostaffective states involve physiological reactions (e.g.,changes in the autonomic and somatic nervous systems),which in turn modify different aspects of the voiceproduction process. For example, the sympathetic arousalassociated with an anger state often produce changes inrespiration and an increase in muscle tension, whichinfluence the vibration of the vocal folds and vocal tractshape, affecting the acoustic characteristics of the speech,which in turn can be used by the listener to infer therespective state [19].Usually human beings can easilyrecognize various kinds of emotions. This can be achievedby the human mind through years of practice andobservation. The human mind captures all kinds of emotionssince childhood and is taught to differentiate between theemotions based on its observations. For instance, when aperson is angry, his tone raises, his expression becomesstern and the content of his speech no longer remainspleasant [3]. Similarly, when a person is happy, he speaks ina musical tone, there is a look of glee on his face and thecontent of his speech is rather pleasant and joyous. Based onthese observations, a person can quickly identify the state ofthe speaker – whether he is happy, sad, angry, depressed,disgusted etc. Speech Emotion Recognition deals with thispart of research in which machine is able to recognizeemotions from speech like human. Emotions are expressedin the voice can be analyzed at three different levels:IJSERThe general architecture for Speech Emotion Recognition(SER) system has three steps shown in Figure 1.a)A speech processing system extracts someappropriate quantities from signal, such as pitch orenergy etc.b) These quantities are summarized into reduced setof features with the help of feature extractor.c) A classifier learns in a supervised manner withexample data how to associate the features to theemotions.Fig1:Architecture for Speech Emotion Recognition (SER)IJSER 2014http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 1, January-2014ISSN 2229-5518Both spectral and prosodic features can be used for speechemotion recognition because both of these featurescontain the emotional information. The potential features areextracted from each utterance for the computationalmapping between emotions and speech patterns. Theselected features are then used for training and testing byusing any classifier method to recognize the emotions.340Another important factor which increases the ambiguity isthe significant variability between different Individuals inthe expression of same emotion class. This wide range ofvariability can have several origins such as the culture, theage, the gender of the speaker and its spoken language.Finally, the noise contained in the emotion corpus increasesthe confusion. This noise can be induced by annotatorsduring the labelling operation. This is particularly true forblended emotions such as fear and anger.II.TYPES OF EMOTIONAL SPEECHFigure 2: Training and validation of Emotion RecognitionModelsAs reported in [19], there are three methods to constitute anemotional corpus: Natural emotions are recordings ofspontaneous emotional states naturally occurred. It ischaracterized by a high ecological validity but suffers fromthe limited number of available speakers and presentsdifficulties for the annotation. Simulated emotions areemotional states portrayed by professional or lay actorsaccording to emotion labels or typical scenarios. Althoughthis method permits easily to constitute an emotion corpushowever it has been criticized that this kind of emotion aremore exaggerated than natural or induced emotion. Inducedemotions require speaker’s own thinking of the past incidentand induce the same emotion in him by remembering theentire situation of the past incident.III.DATABASESIJSERDespite all the research efforts, the performances of SERsystems designed remain relatively low compared to relatedfields such as speaker verification. The low recognition rateis reflected through the high level of confusion betweenemotion classes. The confusion between classes can haveseveral sources. The first raison can be related to theuncertainty that characterize the definition of emotion in thepsychology domain. On the other hand, the overlap in theacoustic space is not limited to the neighbouring classes butalso between some emotions in a symmetrical position withrespect to the active / passive axis of the dimensional modelshown in Figure 3. The joy and anger classes are an exampleof ambiguity case confirmed by several studies [20][21].Generally, there are two types of databases that are used inemotion recognition – acted and real. As the name suggests,in acted emotional speech corpus, a professional actor isasked to speak in a certain emotion. In real databases,speech databases for each emotion are obtained by recordingconversations in real-life situations such as call centres andtalk shows [6]. But it has been observed that there is adifference in the features of acted and real emotionalspeeches. This is because acted emotions are not felt whilespeaking and thus come out more strongly [7].IV.FEATURES OF SPEECHFigure 3: Dimensional model for different emotionsSpeech signals are produced as a result of excitation in thevocal tract by the source signal. Speech features can herefore be found both in vocal tract as well as the excitationsource signal. Features that are extracted from the vocal tractsystem are called system features or spectral features [8].The most popular spectral features are Mel frequencycepstral coefficients (MFCCs), linear prediction cepstralcoefficients (LPCCs) and Perceptual linear predictioncoefficients (PLPCs). The features extracted from theexcitation source signal are called source features. Linearprediction (LP) and glottal volume velocity (GVV) are somesource features. Prosodic features are those features whichare extracted from long segments of speech such assentences, words and syllables. They are also known assupra-segmental features [9]. They contain speech propertiesIJSER 2014http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 1, January-2014ISSN 2229-5518such as rhythm, intonation, stress, volume and duration. Theacoustic properties of the prosodic features are pitch, energy,duration and their derivatives. The pitch signal is producedwhen vocal folds vibrate [10]. Pitch frequency and glottal airvelocity are the features related to pitch signal. Speechenergy is useful because it is related to arousal levels of theemotion. The prosodic features are used to extract emotionalexpression or excited behaviour of articulators. Glottalactivity characteristics are evaluated using source features[11]. Spectral features are used to capture the informationregarding the movement of articulators and the shape andsize of vocal tract which produces different sounds.Articulator is the part of vocal organs that helps form speechsounds. Active articulators are organs such as pharynx, softpalate, lips and tongue. Upper teeth, alveolar ridge and hardpalate are passive articulators [12].Feature extraction is based on partitioningspeech into small intervals known as frames. To selectsuitable features which are carrying information aboutemotions from speech signal is an important step in SERsystem. There are two types of features: prosodic featuresincluding energy, pitch and spectral features includingMFCC, MEDC, LPCC.341MFCC. The only one difference in extraction process is thatthe MEDC is taking logarithmic mean of energies after MelFilter bank and Frequency wrapping, while the MFCC istaking logarithmic after Mel Filter bank and Frequencywrapping. After that, we also compute 1st and 2nd differenceabout this feature [25]. Mel frequency cepstrum coefficients(MFCCs) are coefficients of Mel frequency cepstrum (MFC)which is in turn derived from power cepstrum. Cepstrum isderived from the word ‘spectrum’ by swapping the first halfof the word with the second half [13]. A cepstrum isobtained by computing the Fourier Transform of thelogarithm of the spectrum of a signal. There are differentkinds of cepstrum such as complex cepstrum, real cepstrum,phase cepstrum and power cepstrum. The power cepstrum isused in speech synthesis applications. The cepstrum arelinearly spaced frequency bands whereas MFC are equallyspaced [14]. Hence, MFCs can provide a betterapproximation of the speech.D) Linear Prediction Cepstrum CoefficientsLPCC embodies the characteristics of particular channel ofspeech, and the same person with different emotional speechwill have different channel characteristics, so we can extractthese feature coefficients to identify the emotions containedin speech. The computational method of LPCC is usually arecurrence of computing the linear prediction coefficients(LPC) [25].IJSERA)Energy and related featuresEnergy is the basic and most important feature in speechsignal. To obtain the statistics of energy feature, we useshort-term function to extract the value of energy in eachspeech frame. Then we can obtain the statistics of energy inthe whole speech sample by calculating the energy, such asmean value, max value, variance, variationrange, contour of energy [26].B)Pitch and related featuresThe vibration rate of vocal is called the fundamentalfrequency F0 or pitch frequency. The pitch signal hasinformation about emotion, because it depends on thetension of the vocal folds and the sub glottal air pressure, sothe mean value of pitch, variance, variation range and thecontour is different in seven basic emotional statuses [25].The following statistics are calculated from the pitch andused in pitch feature vector [27]:1.2.3.Mean, Median, Variance, Maximum, Minimum(for the pitch feature vector and its derivative)Average energies of voiced and unvoiced speechSpeaking rate (inverse of the average length of thevoiced part of utterance).C) MFCC and MEDC featuresMel-Frequency Cepstrum coefficients is the most importantfeature of speech with simple calculation, good ability ofdistinction, anti-noise. MFCC in the low frequency regionhas a good frequency resolution, and the robustness to noiseis also very good. MEDC extraction process is similar withBut before the extraction of features is done, it is necessarythat background noises or any other noises are removed.This is because noise or disturbances in the speech interferewith the characteristics of actual speech and the features getaltered.V.THE CLASSIFIERIn real world, human beings can easily detect various kindsof emotions. This can be achieved by the human mindthrough years of practice and observation. A humanmachine interface that can process speech having emotionalcontent makes use of a similar concept: training and thentesting [4]. In the training phase, the interface is fed withsamples of each emotion. The classifier used in the interfaceextracts features from all the samples and forms a mixturefor each emotion. In the testing phase, emotional speech isgiven as input to the classifier. The classifier extracts thefeatures from the input and compares it to all the mixtures.The input is classified into that emotion to which it isclosest. In other words, the input file will be classified intothat emotion whose features are the most similar to that ofthe input file. There are a number of features and classifiersthat can be used for the purpose of emotion detection.However, it is difficult to identify the best model amongthese since the selection of the feature set and the classifierdepends on the problem [5].IJSER 2014http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 1, January-2014ISSN 2229-5518The various classifiers that are currentlybeing used are Artificial Neural Networks (ANNs),Gaussian Mixture Models (GMMs), Hidden Markov Models(HMMs), k-nearest neighbours (KNN), Support VectorMachines (SVMs) [15] and AdaBoost Algorithm. Theclassification techniques can be divided into two categories– those that make use of the timing information and thosewhich do not [16]. Techniques based on HMMs and ANNsretain the timing information whereas classificationtechniques based on SVMs and Bayes classifier lose thetiming information. One positive aspect of the techniquesthat retain timing information is that they can be used forspeech recognition applications in addition to emotionrecognition [17]. Different classifiers are discussed below:A)Support Vector Machine (SVM):SVM, a binary classifier is a simple and efficientcomputation of machine learning algorithms, and is widelyused for pattern recognition and classification problems, andunder the conditions of limited training data, it can have avery good classification performance compared to otherclassifiers [28]. The idea behind the SVM is to transform theoriginal input set to a high dimensional feature space byusing kernel function. Therefore non-linear problems can besolved by doing this transformation.342training data points. However, the model becomes morecomplex with the increase in the number of components.D)K Nearest Neighbour (KNN):A more general version of the nearest neighbour techniquebases the classification of an unknown sample on the“votes” of K of its nearest neighbour rather than on only it’son single nearest neighbour. Among the various methods ofsupervised statistical pattern recognition, the NearestNeighbour is the most traditional one, it does not consider apriori assumptions about the distributions from which thetraining examples are drawn. It involves a training set of allcases. A new sample is classified by calculating the distanceto the nearest training case, the sign of that point thendetermines the classification of the sample. Larger K valueshelp reduce the effects of noisy points within the trainingdata set, and the choice of K is often performed throughcross validation [31].E)AdaBoost Algorithm:AdaBoost algorithm is an adaptive classifier whichiteratively builds a strong classifier from a weak classifier.In each iteration, the weak classifier is used to classify thedata points of training data set. Initially all the data pointsare given equal weights, but after each iteration, the weightof incorrectly classified data points increases so that theclassifier in next iteration focuses more on them. This resultsin decrease of the global error of the Classifier and hencebuilds a stronger classifier. AdaBoost algorithm is also usedas a feature selector for training SVMs [32].IJSERB)Hidden Markov Model (HMM):The HMM consists of the first order markov chain whosestates are hidden from the observer therefore the internalbehaviour of the model remains hidden. The hidden states ofthe model capture the temporal structure of the data. HiddenMarkov Models are statistical models that describe thesequences of events. HMM is having the advantage that thetemporal dynamics of the speech features can be trapped dueto the presence of the state transition matrix. Duringclustering, a speech signal is taken and the probability foreach speech signal provided to the model is calculated. Anoutput of the classifier is based on the maximum probabilitythat the model has been generated this signal [29]. For theemotion recognition using HMM, first the database is sortout according to the mode of classification and then thefeatures from input waveform are extracted. These featuresare then added to database. The transition matrix andemission matrix has been made according to the modes,which generates the random sequence of states andemissions from the model. Final is estimating the statesequence probability by using Viterbi algorithm [30].C)Gaussian Mixture Models (GMMs):Gaussian Mixture Models (GMMs) are considered good forevaluating density and for performing clustering [18]. Theexpectation-maximization algorithm is used for this purpose.GMMs are comprised of component functions calledGausses. The number of these Gausses in the mixture modelis also referred to as the number of components. The totalnumber of components can be altered based on the count ofVI.CONCLUSIONThe recent interest in speech emotion recognition researchhas seen applications in call centre analytics, humanmachine and human robot interfaces, multimedia retrieval,surveillance tasks, behavioural health informatics, andimproved speech recognition. In this study, the overview ofSER methods are discussed for extracting audio featuresfrom speech sample, various classifier algorithms areexplained briefly. Speech Emotion Recognition has apromising future and its accuracy depends upon theemotional speech database ,combination of featuresextracted from those database for training the model, typesof classification algorithm used to classify the emotions inappropriate emotion class (e.g. happy, sad, anger, surpriseetc.). This study aims to provide a simple guide to thebeginner who’s carried out their research in the speechemotion recognition.VII.References[1]S. G. Koolagudi, S. Maity, V. A. Kumar, S. Chakrabarti, and K.S. Rao, IITKGP-SESC : Speech Database for Emotion Analysis.Communications in Computer and Information Science, JIITUniversity, Noida, India: Springer, issn: 1865-0929 ed., August 1719 2009.IJSER 2014http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 1, January-2014ISSN 2229-5518[2] S. G. Koolagudi and K. S. Rao, “Exploring speech features forclassifying emotions along valence dimension,” in The 3rdinternationalConference on Pattern Recognition and Machine Intelligence(PReMI- 09), Springer LNCS (S. C. et al., ed.), (IIT Delhi), pp.537–542,Springer-verlag, Heidelberg, Germany, December 2009.[3] S. G. Koolagudi, S. ray, and K. S. Rao, “Emotion classificationbased on speaking rate

Abstract—Speech Emotion Recognition is a current research because of its topic wide range of applicationsand it becamea challenge in the field of speech processing too. In this paper, we have carried out a study on brief Speech Emotion Analysis along with Emotion Recognition.

speech 1 Part 2 – Speech Therapy Speech Therapy Page updated: August 2020 This section contains information about speech therapy services and program coverage (California Code of Regulations [CCR], Title 22, Section 51309). For additional help, refer to the speech therapy billing example section in the appropriate Part 2 manual. Program Coverage

speech or audio processing system that accomplishes a simple or even a complex task—e.g., pitch detection, voiced-unvoiced detection, speech/silence classification, speech synthesis, speech recognition, speaker recognition, helium speech restoration, speech coding, MP3 audio coding, etc. Every student is also required to make a 10-minute

9/8/11! PSY 719 - Speech! 1! Overview 1) Speech articulation and the sounds of speech. 2) The acoustic structure of speech. 3) The classic problems in understanding speech perception: segmentation, units, and variability. 4) Basic perceptual data and the mapping of sound to phoneme. 5) Higher level influences on perception.

1 11/16/11 1 Speech Perception Chapter 13 Review session Thursday 11/17 5:30-6:30pm S249 11/16/11 2 Outline Speech stimulus / Acoustic signal Relationship between stimulus & perception Stimulus dimensions of speech perception Cognitive dimensions of speech perception Speech perception & the brain 11/16/11 3 Speech stimulus

Speech Enhancement Speech Recognition Speech UI Dialog 10s of 1000 hr speech 10s of 1,000 hr noise 10s of 1000 RIR NEVER TRAIN ON THE SAME DATA TWICE Massive . Spectral Subtraction: Waveforms. Deep Neural Networks for Speech Enhancement Direct Indirect Conventional Emulation Mirsamadi, Seyedmahdad, and Ivan Tashev. "Causal Speech

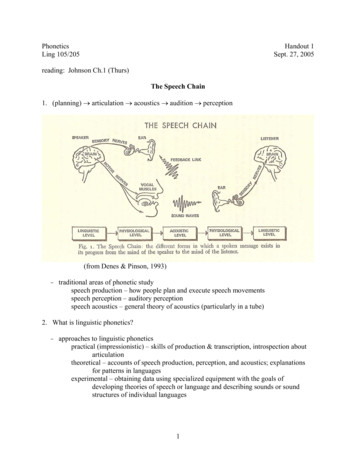

The Speech Chain 1. (planning) articulation acoustics audition perception (from Denes & Pinson, 1993) -traditional areas of phonetic study speech production – how people plan and execute speech movements speech perception – auditory perception speech acoustics – general theory of acoustics (particularly in a tube) 2.

read speech nize than humans speaking to humans. Read speech, in which humans are reading out loud, for example in audio books, is also relatively easy to recognize. Recog-conversational nizing the speech of two humans talking to each other in conversational speech, speech for example, for transcribing a business meeting, is the hardest.

literary techniques, such as the writer’s handling of plot, setting, and character. Today the concept of literary interpretation frequently includes questions about social issues as well.Both kinds of questions are included in the chart that begins at the bottom of the page. Often you will find yourself writing about both technique and social issues. For example, Margaret Peel, a student who .