Combining Geometric Nonlinear Control With Reinforcement Learning .

Combining Geometric Nonlinear Control withReinforcement Learning-Enabled ControlTyler WestenbroekDept EECSUniversity of California BerkeleyAdapted for EECS 290-005,February 24, 20211

Geometric Nonlinear Control Main idea: exploit underlying structures in thesystem to systematically design feedbackcontrollers Explicitly connects ‘global’ and ‘local’ systemstructures Gives fine-grain control over system behavior Amenable to formal analysis Difficult to learn to exploit non-parametricuncertainties2

Deep Reinforcement Learning Main idea: sample system trajectories tofind (approximately) optimal feedbackcontroller ‘Discovers’ connection between global andlocal structure Automatically generates complex[Levine et. al.](IJRR 2020)behaviors, but requires reward shaping Effectively handles non-parametricuncertainty Can require large amounts of data[Open AI](2019)3

Motivating Questions Can we design local reward signals with global structural information‘baked in’ using geometric control? Can we use these structures to provide correctness and safetyguarantees for the learning? Does reinforcement learning implicitly take advantage of thesestructures? What structures make a system ‘easy’ to control?4

Thesis ProposalPart 1: Overcomenon-parametricuncertainty by combiningRL and geometric controlMBControllerLearnedCorrection5

Tyler’s PhD researchExample: Learning a stable walking gaitwith 20 seconds of dataPart 1: Overcomenon-parametricuncertainty by combiningRL and geometric controlMBControllerLearnedCorrectionUse structures from geometric control as a‘template’ for the learning6

Project FlowPart 1: Overcomenon-parametricuncertainty by combiningRL and geometric controlPart 2: Provide correctnessand safety guarantees forspecific learningalgorithms:Part 3: Future work: What makes a rewardsignal difficult to learnfrom? What makes a systemfundamentallydifficult to control? Where shouldMBControllerLearnedCorrectiongeometric control beused in the long-run?High-probability tracking tube7

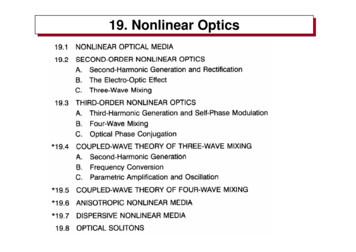

Part 1 Outline Steps in design process Example control architectures Feedback Linearization Control Lyapunov Functions Other architectures Trade-offs with ‘Model-based’ RL8

Steps in Design ProcessStep 1: Choosegeometric controlarchitecture whichproduces desired globalbehaviorStep 2: Augment thenominal controller witha learned component:learned augmentatione.g. feedback linearizingcontroller9Step 3: Formulatereward which capturesdesired local behaviorMinimize loss with RL

Feedback Linearization10

Goal: Output Tracking Consider the systemwithinput andthe state,the output.the Goal: track any smooth referencewith one controller11

Calculating a Linearizing Controller For the time being assume. To obtain a direct relationship betweenthe inputs and outputs we differentiate : Now iffor eachthen the controlleryields1

Calculating a Linearizing Controller For the time being assume. To obtain a direct relationship betweenthe inputs and outputs we differentiate : Now iffor eachthen the controllerIf this is zero the controlleris undefinedyields1

Calculating a Linearizing Controller Now ifthen we differentiate a second time and obtain andexpression of the form Now iffor eachthen the control lawyields1

Calculating a Linearizing Controller In general, we can keep differentiating At this point we can apply the controlwhich yields1until the input appears:

‘Inverting’ the Dynamics Take time derivatives of outputsto obtain an input-outputrelationship of the form Applying the control lawyieldsVector Relative Degree16

Normal Form Choose the outputs and their derivatives as new states for thesystem: Ifwe can ‘complete the basis’ by appropriatelyselectingextra variables:Can trackusing linear controlMay become unstable!Zero Dynamics17(Systems is minimum-phase if theseare asymptotically stable)

Zero Dynamics We refer to the un-driven dynamicsas the zero dynamics. We say that the overall control system is minimum-phase if thezero dynamics are asymptotically stable We say that the system is non-minimum-phase if the zerodynamics are unstable1

Tracking Desired Outputs To track the desired outputFeedback TermFeedforward Term If we designlaw drivessuch thatis Hurwitz then this controlexponentially quickly However, the zero dynamics may not stay stable!1

Model Mismatch Suppose we have an approximate dynamics model: Why not just learn the forward dynamics?May be singular!20

Directly Learning the LinearizingController We know the linearizing controllers are of the form There is a “gap” between the two controllers: To overcome the gap we approximate“Feedback linearization for uncertain systems via RL” [WFMAPST] (2020)21

Penalize Deviations fromDesired Linear Behavior We want to find a set of learned parameter such that Thus, we define the point-wise loss We then define the optimization problemDistributionof statesDistribution ofvirtual inputs22

Solutions to the ProblemTheorem: [1] Assume that the learned controller is of the formwhereandare linearly independent sets of features. Thenthe optimization problem is strongly convex.Corollary: Further assume thatsome feasible. Thenforis the unique optimizer for .Remark: There are many known bases which can recover anywhereand up to a desiredare linearlyindependentsets offeatures.continuousfunctionaccuracy(e.g radial basisfunctions).Then the optimization problem is strongly convex.23

Discrete-Time Approximations withReinforcement Learning In practice, we use a discretized version of the reward as a running costin an RL problem:Finite difference approximate toGaussian noise added for exploration,enables use of policy gradient algorithms24

12D Quadrotor Model Nominal dynamics model:After Feedback Linearization:Choose Outputs25

Improvement After 1 Hour of Data“Proximal Policy Optimization Algorithms” [Schulman et. al.] (2017)26

Effects of Model Accuracy27

7-DOF Baxter ArmAfter 1 Hour of Data28

Learning a Stable WalkingGait in 20 Minutes Feedback linearization iscommonly used to design stablewalking gates for bipedal robots Outputs are carefully designed sothat zero dynamics generated astable walking gate“Improving I-O Linearizing Controllers for Bipedal Robots Via RL” [CWAWTSS] (2020)“Continuous Control With Deep Reinforcement Learning” [Lillicrap et. al.] (2015)29

Control Lyapunov Functions30

Generalized ‘Energy’ Functions Consider the plant We say that the positive definitefunctionis a controlLyapunov function (CLF) for thesystem ifUser-specified energy dissipation rate31

Learning Min-normStabilizing Controllers Given a Control Lyapunov Functionthe associatedmin-norm controller for the plant is given by To learn the min-norm controller we want to solve:32

Penalizing the Constraint To remove the constraint we add a penalty term to the cost:scaling parameterpenalty function If the controller in linear in its parametersunder the additional assumption thatis strongly convex,“Learning Min-norm Stabilizing Control Laws for systems with Unknown Dynamics” [WCASS] (CDC 2020, To Appear)33

Learning the‘Forward’ TermsAdvantages of our approach: Other approaches estimate the terms Faster update rates for learned controllerin the constraint [1][2]: Learned controller always ‘feasible’ Does not require implicit ‘inversion’ of learnedterms: Then incorporate into QP:[Choi et. al] (2020)3[Taylor et. al] (2019)

Double Pendulum 4 Minutes of Data

Learning a Stable Walking ControllerWith 20 Seconds of DataNominal ControllerLearned Controller“Soft Actor-Critic: Off-Policy Maximum Entropy Deep RL with aStochastic Actor: [Haarnoja et. al. ] (2018)

Steps in Design ProcessStep 1: Choosegeometric controlarchitecture whichproduces desired globalbehaviorStep 2: Augment thenominal controller witha learned component:learned augmentatione.g. feedback linearizingcontroller37Step 3: Formulatereward which capturesdesired local behaviorMinimize loss with RL

Specific Architectures Control Barrier Functions[Ames et. al.] (2019) Time Varying CLFs[Kim et. al.] (2019) Geometric Controllers on[Lee et. al.] (2010)38

Trade-offs With‘Model-Based’ RL Mb-RL: learn a neural network dynamicsmodel from scratch, use for onlineplanning or training controllers offlinewith model-free RL[Nagabandi et. al.] (2018)Main Advantage of Mb-RL:Advantages of our Approach: Can be used when ‘ideal’ control Fine grain control over system behaviorarchitecture is not known39

Key Take Aways Connecting local and global geometric structure allows usto efficiently overcome model uncertainty Learning a forward dynamics model may be incompatiblewith geometric control

Relevant Papers “Feedback Linearization for Uncertain Systems via ReinforcementLearning” [WFMAPST] (ICRA 2020) “Improving Input-Output Linearizing Controllers for Bipedal Robots ViaReinforcement Learning” [CWAWTSS] ( L4DC 2020) “Learning Min-norm Stabilizing Control Laws for systems with UnknownDynamics” [WCASS] (IEEE, CDC 2020, Dec. 2020) “Learning Feedback Linearizing Controllers with Reinforcement Learning”[WFPMST] (IJRR, In Prep) “Directly Learning Safe Controllers with Control Barrier Functions” (TBD) “Learning Time-based Stabilizing Controllers for Quadrupedal Locomotion”(TBD)41

Current Work ExtensionsCan we use model-free policyoptimization to overcome modelmismatch in high dimensions forspecific control architectures?Can we use geometric control tosystematically design rewards whichare ‘easy’ to optimize over, andachieve the desired objective?Control ArchitecturesFeedbackLinearizationCLFs CBFsCombining Learningand Adaptive ControlOther Choice ofLearningAlgorithm

Project FlowPart 1: Overcomenon-parametricuncertainty by combiningRL and geometric controlPart 2: Provide correctnessand safety guarantees forspecific learningalgorithms:Part 3: Future work: What makes a rewardsignal difficult to learnfrom? What makes a systemfundamentallydifficult to control? Where y tracking tube43geometric control beused in the long-run?

Part 2 Outline Goal: show that we can safely learn a linearizing controller onlineusing standard RL algorithms Provide probabilistic tracking tracking bounds for overall learningsystem Simple policy gradient algorithms More sophisticated algorithms (Future Work) Comparison with ‘model-based’ adaptive control44

Analysis and Design StepsStep 1: Use our lossfunction from before todesign an ‘ideal’ CTupdate ruleStep 2: Model DTmodel-free policygradient algorithms asnoisy discretization ofthe CT processStep 3: Provideprobabilistic safetyguarantees for theoverall learning systemHigh-probability tracking tube45

Modeling Learningas CT Process Goal: track a desired trajectorywhile improving estimatedparameters4

Modeling Learningas CT ProcessRecall the normal form: Goal: track a desired trajectorywhile improving estimatedparameters Apply estimated controller4

Modeling Learningas CT ProcessRecall the normal form: Goal: track a desired trajectorywhile improving estimatedparameters Apply estimated controllerAssumption: Controller is linear is parameters:4

Modeling Learningas CT ProcessRecall the normal form: Goal: track a desired trajectorywhile improving estimatedparameters Apply estimated controllerAssumption: Controller is linear is parameters:Assumption: There exists a unique set of parameters4

Modeling Learningas CT ProcessRecall the normal form: Goal: track a desired trajectorywhile improving estimatedparameters Tracking Error Dynamics: Apply estimated controllerAssumption: Controller is linear is parameters:Assumption: There exists5

Modeling Learningas CT ProcessAssumption: Controller is linear is parameters: Goal: track a desired trajectorywhile improving estimatedparameters CT reward function: Ideal CT update rule:5

Modeling Online Learningas CT Process Goal: trackwhileimproving estimated parametersand using the estimatedcontroller We apply the ‘ideal’ updateAssumption 1: Controller is linear in parameters:Assumption 2: There exists a unique Under a persistency of excitationcondition we showexponentially quicklyLeast square loss from beforeDefine:52s.t.

Modeling Online Learningas CT ProcessRecall the normal form: Goal: track a desired trajectorywhile improving estimatedparameters Tracking Error Dynamics: Apply estimated controller CT reward function: Ideal CT update rule:5

Adaptive Control Approach Goal: track a desired trajectory whileimproving estimated parameters Apply estimated tracking controller: Tracking error dynamicsCT Reward:5

Persistency of Excitation We say thatis persistently exciting iffor some Under this condition we haveexponentially quickly as5

Analyzing DT RL Algorithms On the intervalwe apply the noisy controland apply noisy parameter updates of the formSamplingPeriod56LearningRateNoisy Estimateof

Implementable DT StochasticApproximations Main idea: model standard policy gradient updates as (noisy)discretization of the ideal parameter update To explore the dynamics,we apply the control5

Implementable DT StochasticApproximations Main idea: model standard policy gradient updates as (noisy)discretization of the ideal parameter update To explore the dynamics,we apply the control This leads to a discrete time process of the form5

Implementable DT StochasticApproximations Main idea: model standard policy gradient updates as (noisy)discretization of the ideal parameter update To explore the dynamics,we apply the control This leads to a discrete time process of the formLearning Rate5Estimate for gradient of

‘Vanilla’ Policy Gradient As a first step in analysis, we consider the simple policygradient estimator:6

Convergence of ‘Vanilla PolicyGradient’Theorem: For eachThen there existsput. Further assume the PE condition holds.such that,and with probability61

Double PendulumTracking With Learning62Tracking Without Learning

Step-size Selection Many convergence results from theML literature require: In a forthcoming article, wewill show that the learning‘converges’ if we take63

Trade-offs with Model-BasedAdaptive Control Advantages: Can deal with non-parametric uncertainty More freedom in choosing function approximator Disadvantages: Generally slower Loss of deterministic guarantees64

L1 Adaptive Control Model unknown nonlinearities as a disturbance to be identified:Estimate withEstimate with Control size of tracking by using fast adaptation for6

(Near) Future Work: MoreSophisticated AlgorithmsReinforcementLearningBaselining Advantage EstimationData Reuse/Off-Policy MethodsAdaptiveControlLarge Batches/Hybrid UpdatesFiltering TheoryReward Clipping Gradient RescalingBound Effects ofReconstruction Error66

Thesis ProposalPart 1: Overcomenon-parametricuncertainty by combiningRL and geometric controlPart 2: Provide correctnessand safety guarantees forspecific learningalgorithms:Part 3: Future work: What makes a rewardsignal difficult to learnfrom? What makes a systemfundamentallydifficult to control? Where y tracking tube67geometric control beused in the long-run?

Relavent Papers “Adaptive Control for Linearizable Systems Using On-Policy ReinforcementLearning” [WMFPTS] (CDC 2020, To Appear) “Reinforcement Learning for the Adaptive Control of Linearizable Systems”[WSMFTS] (Transaction on Automatic Control, In Prep) “Data-Efficient Off-Policy Reinforcement Learning for Nonlinear AdaptiveControl” [WSMFTS] (TBD)68

Where do thesetechniques fit in? Can we use geometric control topartially reduce the complexity oflearning more difficult tasks? Can we combine our approach withtechniques suchas al.]meta-learning?[Finn et.(2017) Can we automatically synthesizerewards for families of tasks?69

UnderstandingGeometric ‘Templates’ So far: use geometric structures as ‘templates’ for learning Can we formalize what makes a local reward signal ‘compatible’ withthe global structure of the problem? Can we quantify the difficulty of a RL problem in terms of how muchglobal information the reward contains? Does reinforcement learning implicitly take advantage of thestructures we’ve identified? Can we apply general structural results from geometric control?70

What makes a system difficultto control? Control theory has many colloquial ways to describe what makes asystem difficult to control Can we use sample complexity to make these notions rigorous?[1][2] Can we use ideas from geometric control to separate out different‘complexity classes’ of problems? For example, Minimum-Phase Non-Minimum Phase?[1] Dean, Mania, Matni, Recht, Tu (2018)[2] Fazel, Ge, Kakade, Mesbahi(2018)71

Non-Minimum Phase TrackingControl When zero dynamics are NMP wecannot ‘forget’ them Example: steering a bikexy72Recall the normal form:

Non-Minimum Phase TrackingControl When zero dynamics are NMP wecannot ‘forget’ them Example: steering a bikexy73Recall the normal form:

Non-Minimum Phase TrackingControl When zero dynamics are NMP weRecall the normal form:cannot ‘forget’ them Example: steering a bike“Counter-Steering” Can we learn these behaviors?74

Non-Minimum Phase TrackingControl When zero dynamics are NMP weRecall the normal form:cannot ‘forget’ them Example: steering a bike“Counter-Steering” Can we learn these behaviors? Can we quantify what makes itLook Ahead Windowdifficult to learn thesebehaviors? How much preview do we needto learn? Can we learn safely?[Devasia et. al.] (1999)75

Questions?76

Geometric Nonlinear Control Main idea: exploit underlying structures in the system to systematically design feedback controllers Explicitly connects 'global' and 'local' system structures Gives fine-grain control over system behavior Amenable to formal analysis Difficult to learn to exploit non-parametric uncertainties 2

The formula for the sum of a geometric series can also be written as Sn a 1 1 nr 1 r. A geometric series is the indicated sum of the terms of a geometric sequence. The lists below show some examples of geometric sequences and their corresponding series. Geometric Sequence Geometric Series 3, 9, 27, 81, 243 3 9 27 81 243 16, 4, 1, 1 4, 1 1 6 16 .

Outline Nonlinear Control ProblemsSpecify the Desired Behavior Some Issues in Nonlinear ControlAvailable Methods for Nonlinear Control I For linear systems I When is stabilized by FB, the origin of closed loop system is g.a.s I For nonlinear systems I When is stabilized via linearization the origin of closed loop system isa.s I If RoA is unknown, FB provideslocal stabilization

The first term in a geometric sequence is 54, and the 5th term is 2 3. Find an explicit form for the geometric sequence. 19. If 2, , , 54 forms a geometric sequence, find the values of and . 20. Find the explicit form B( J) of a geometric sequence if B(3) B(1) 48 and Ù(3) Ù(1) 9. Lesson 4: Geometric Sequences Unit 7: Sequences S.41

The first term in a geometric sequence is 54, and the 5th term is 2 3. Find an explicit form for the geometric sequence. 19. If 2, , , 54 forms a geometric sequence, find the values of and . 20. Find the explicit form B( J) of a geometric sequence if B(3) B(1) 48 and Ù(3) Ù(1) 9. Lesson 7: Geometric Sequences

been proven to be stable and effective and could significantly improve the geometric accuracy of optical satellite imagery. 2. Geometric Calibration Model and the Method of Calculation 2.1. Rigorous Geometric Imaging Model Establishment of a rigorous geometric imaging model is the first step of on-orbit geometric calibration

eigenvalue buckling analysis, nonlinear stress analysis, and graphical post-processing. In this paper a brief description of CALEB version 1.4 and of its main features is presented. INTRODUCTION CALEB is a nonlinear finite element program for geometric and material nonlinear analysis of offshore platforms and general framed structures.

Nonlinear Finite Element Analysis Procedures Nam-Ho Kim Goals What is a nonlinear problem? How is a nonlinear problem different from a linear one? What types of nonlinearity exist? How to understand stresses and strains How to formulate nonlinear problems How to solve nonlinear problems

Third-order nonlinear effectThird-order nonlinear effect In media possessing centrosymmetry, the second-order nonlinear term is absent since the polarization must reverse exactly when the electric field is reversed. The dominant nonlinearity is then of third order, 3 PE 303 εχ The third-order nonlinear material is called a Kerr medium. P 3 E