Generalized Probabilistic Lr Parsing Of Natural Language-PDF Free Download

The parsing algorithm optimizes the posterior probability and outputs a scene representation in a "parsing graph", in a spirit similar to parsing sentences in speech and natural language. The algorithm constructs the parsing graph and re-configures it dy-namically using a set of reversible Markov chain jumps. This computational framework

Model List will show the list of parsing models and allow a user of sufficient permission to edit parsing models. Add New Model allows creation of a new parsing model. Setup allows modification of the license and email alerts. The file parsing history shows details on parsing. The list may be sorted by each column. 3-4. Email Setup

the parsing anticipating network (yellow) which takes the preceding parsing results: S t 4:t 1 as input and predicts future scene parsing. By providing pixel-level class information (i.e. S t 1), the parsing anticipating network benefits the flow anticipating network to enable the latter to semantically distinguish different pixels

operations like information extraction, etc. Multiple parsing techniques have been presented until now. Some of them unable to resolve the ambiguity issue that arises in the text corpora. This paper performs a comparison of different models presented in two parsing strategies: Statistical parsing and Dependency parsing.

Concretely, we simulate jabberwocky parsing by adding noise to the representation of words in the input and observe how parsing performance varies. We test two types of noise: one in which words are replaced with an out-of-vocabulary word without a lexical representation, and a sec-ond in which words are replaced with others (with

deterministic polynomial-time algorithms. However, as argued next, we can gain a lot if we are willing to take a somewhat non-traditional step and allow probabilistic veriflcation procedures. In this primer, we shall survey three types of probabilistic proof systems, called interactive proofs, zero-knowledge proofs, and probabilistic checkable .

non-Bayesian approach, called Additive Regularization of Topic Models. ARTM is free of redundant probabilistic assumptions and provides a simple inference for many combined and multi-objective topic models. Keywords: Probabilistic topic modeling · Regularization of ill-posed inverse problems · Stochastic matrix factorization · Probabilistic .

A Model for Uncertainties Data is probabilistic Queries formulated in a standard language Answers are annotated with probabilities This talk: Probabilistic Databases 9. 10 Probabilistic databases: Long History Cavallo&Pitarelli:1987 Barbara,Garcia-Molina, Porter:1992 Lakshmanan,Leone,Ross&Subrahmanian:1997

COMPARISON OF PARSING TECHNIQUES FOR THE SYNTACTIC PATTERN RECOGNITION OF SIMPLE SHAPES T.Bellone, E. Borgogno, G. Comoglio . Parsing is then the syntax analysis: this is an analogy between the hierarchical (treelike) structure of patterns and the syntax of language.

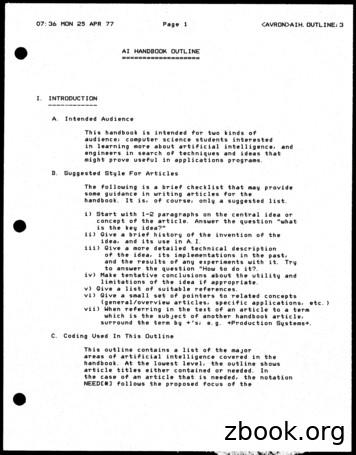

Main ideas (parsing, representation) comparison of different techniques. mention ELIZA, PARRY. Include Baseball, Sad Sam, SIR and Student articles here, see Winograd's Five Lectures, Simmon's CACM articles. B. Representation of Meaning (see section VII — HIP) C. Syntax and Parsing Techniques 1. overviews a. formal grammars b. parsing .

carve off next. ‘Partial parsing’ is a cover term for a range of different techniques for recovering some but not all of the information contained in a traditional syntactic analysis. Partial parsing techniques, like tagging techniques, aim for reliability and robustness in the face of the vagaries of natural text, by sacrificing

ture settings in comparison with other transition-based AMR parsers, which shows promising future applications to AMR parsing using more refined features and advanced modeling techniques. Also, our cache transition system is general and can be applied for parsing to other graph structures by ad-

extraction that illustrates the use of ESG and PAS. These main sections are followed by sections on use in Watson, evaluation, related work, and conclusion and future work. SG parsing The SG parsing system is divided into a large language-universal shell and language-specific grammars for English

Minimalist Program (MP). Computation from a parsing perspective imposes special constraints. For example, in left-to-right parsing, the assembly of phrase structure must proceed through elementary tree composition, rather than using using the generative operations MERGE and MOVE d

tored parsing model, and I develop for the new grammar formalism a scoring model to resolve parsing ambiguities. I demonstrate the flexibility of the new model by implement- . phrase structure tree, but phrase

then resume parsing In LR parsing: I Scan down the stack until a state s with a goto on a particular nonterminal A is found I Discard zero or more input symbols until a symbol a is found that can follow A I Stack the state GOTO(s,A)andresumenormalparsing Syntax analysis 213

Address Standardization and Parsing API User’s Guide. Page 2 of 18 NettAA dddressss .vv55 .11 Tffoorr .NNEET Introduction NetAddress for .NET allows you to quickly and easily build address validation, standardization and parsing into your custom applicati

sh3 sh4 11 sh3 sh4 12 re1 re1 re5 re5 re5 re4 re4 re4 re6 re6,sh6 re6 9 re7,sh6 re7 9 Figure 2.2. LR parsing table with multiple entries. 32 Computational Linguistics, Volume 13, Numbers 1-2, January-June 1987 . Masaru Tomita An Efficient Augmented-Context-Free Parsing Algorithm

Parsing Features of Hebrew Verbs 3 Parsing for a Hebrew verb always specifies the following Root רבד Stem Piel Conjugation Perfect Parsing includes the following, if present Person, Gender, and Number 2ms Presence depends upon the conjugation Prefixed words (e.g., conjunction ו, interrogative, ) ו

This app was . Bible Reading and Bible Study with the Olive Tree Bible App from Olive Tree Bible Software on your iPhone, iPad, Android, Mac, and Windows. greek verb parsing tool . parsing information like the Perseus.13 posts · I am looking for an online dictionary for Ancient Greek. I am mostly interested in Koine, . 8d69782dd3 6 .

is able to cover nearly all sentences contained in the Penn Treebank (Marcus et al., 1993) using a small number of unconnected memory elements. But this bounded-memory parsing comes at a price. The HHMM parser obtains good coverage within human-like memory bounds only by pur-suing an optionally arc-eager' parsing strategy,

approach and the XML format it is very easy to support other import or export formats varying across applications. 3 On Parsing Evaluation Parsing evaluation is a very actual problem in the NLP field. A common way to estimate a parser quality is comparing its output (usually in form of syntactic trees) to a gold standard data available

the fashion non-initiate, also have many occurrences - leg-gings (545), vest (955), cape (137), jumper (758), wedges (518), and romper (164), for example. 3. Clothing parsing In this section, we describe our general technical ap-proach to clothing parsing, including formal definitions of the problem and our proposed model. 3.1. Problem .

based on a scene parsing approach applied to faces. Warrell and Prince argued that the scene parsing approach is advan-tageous because it is general enough to handle unconstrained face images, where the shape and appearance of features vary widely and relatively rare semantic label classes exist, such as moustaches and hats.

NLP Course - Parsing - S. Aït-Mokhtar (Naver Labs Europe) Grammatical function ! syntactic category same syntactic category: proper noun (PROPN) distinct grammatical functions: subject (nsubj) and modifier (nmod) NLP Course - Parsing - S. Aït-Mokhtar (Naver Labs Europe)

huge variation in the sketch space (a), when resized to a very small scale (b), they share strong correlations respectively. Based on the proposed shapeness estimation, we present a three-stage cascade framework for offline sketch parsing, including 1) shapeness estimation, 2) shape recognition, and 3) sketch parsing using domain knowledge.

parsing systems with good performance include the sys-tems developed by Collins [13], Stanford parser [14], and Charniak et al. [15], etc. However, there are several challenges faced by the conventional machine learning based approaches for syntactic parsing. First, current parsers usually use a large number of features including both .

context-fiee grammar defined. A very efficient parsing algorithm was introduced by Jay Earley(1970). This dgorithm is a general context-free piuser. It is based on the top-down parsing method. It deals with any context-free and ehee nile format with- out requiring conversions to Chomsky form, as is often assumeci. Unlike LR(k) parsing,

Quasi-poisson models Negative-binomial models 5 Excess zeros Zero-inflated models Hurdle models Example 6 Wrapup 2/74 Generalized linear models Generalized linear models We have used generalized linear models (glm()) in two contexts so far: Loglinear models the outcome variable is thevector of frequencies y in a table

Keywords: Global-local, Polynomial enrichment, Stable generalized FEM, Generalized FEM, Nonlinear Analysis 1Introduction The Generalized/eXtended Finite Element Method (GFEM) [1, 2] emerged from the difficulties of the FEM to solve cracking problems due to the need for a high degree of mesh refinem

generalized cell complex to refer to a sequential composite of pushouts of “generalized cells,” which are constructed as groupoid-indexed coends, rather than mere coproducts, of basic cells. The dual notion is a generalized

Overview of Generalized Nonlinear Models in R Linear and generalized linear models Generalized linear models Problems with linear models in many applications: I range ofy is restricted (e.g.,y is a count, or is binary, or is a duration) I e ects are not additive I va

Generalized functions will allow us to handle p.d.e.s with such singular source terms. In fact, the most famous generalized function was discovered in physics by Dirac before the analysts cottoned on, and generalized functions are often known as distributions, as a nod to the charge distribution example which inspired them.

free grammar. Based on Earley parser, an activity parsing and prediction algorithm is proposed. Overall, grammar-based methods have shown effectiveness on tasks that have compositional structures. However, the above grammar-based algorithms (except [32]) ta

Probabilistic Context‐Free Grammars Raphael Hoffmann 590AI, Winter 2009. Outline PCFGs: Inference and Learning Parsing English Discriminative CFGs Grammar Induction.

Probabilistic mo deling of NLP Do cument clustering Topic mo deling Language mo deling Part-of-sp eech induction Parsing and gramma rinduction W ord segmentation W ord alignment . K training log-lik eliho od test log-lik eliho od 1 -364 3 In this example, four mixtures of Gaussians were gen

Probability and Computing Randomized Algorithms and Probabilistic Analysis '. '. . . \ Michael Mitzenmacher Eli Upfal . Probability and Computing Randomization and probabilistic techniques play an important role in modern com .

vides a language for expressing probabilistic polynomial-time protocol steps, a speci cation method based on a compositional form of equivalence, and a logical basis for reasoning about equivalence. The process calculus is a variant of CCS, with bounded replication and probabilistic polynomial-time expressions allowed in messages and boolean tests.

language for expressing probabilistic polynomial-time protocol steps, a spec-iflcation method based on a compositional form of equivalence, and a logical basis for reasoning about equivalence. The process calculus is a variant of CCS, with bounded replication and probabilistic polynomial-time expressions allowed in messages and boolean tests.

senting within the model resource bounded, probabilistic computations as well as probabilistic relations between systems and system components. Such models include Probabilistic Polynomial-Time Process Calculus (PPC) [13–15], Reac-tive Simulatability (RSIM) [16–18], Universally Composable (UC) Security [19],