Regularization In Tomographic Reconstruction Using Thresholding . - CORE

View metadata, citation and similar papers at core.ac.ukbrought to you byCOREprovided by Columbia University Academic CommonsIEEE TRANSACTIONS ON MEDICAL IMAGING, VOL. 22, NO. 3, MARCH 2003351Regularization in Tomographic ReconstructionUsing Thresholding EstimatorsJérôme Kalifa*, Andrew Laine, and Peter D. EsserAbstract—In tomographic medical devices such as single photonemission computed tomography or positron emission tomographycameras, image reconstruction is an unstable inverse problem, dueto the presence of additive noise. A new family of regularizationmethods for reconstruction, based on a thresholding procedure inwavelet and wavelet packet (WP) decompositions, is studied. Thisapproach is based on the fact that the decompositions provide anear-diagonalization of the inverse Radon transform and of priorinformation in medical images. A WP decomposition is adaptivelychosen for the specific image to be restored. Correspondingalgorithms have been developed for both two-dimensional andfull three-dimensional reconstruction. These procedures are fast,noniterative, and flexible. Numerical results suggest that theyoutperform filtered back-projection and iterative procedures suchas ordered- subset-expectation-maximization.Index Terms—Dyadic wavelet transform, PET, SPECT, tomographic reconstruction, wavelet packets.I. INTRODUCTIONWE are interested in the problem of tomographic reconstruction of images from transmission data, which wecall tomographic projections or sinograms. Although the workpresented here has a wide range of applications for various tomographic devices, we will focus on medical images with singlephoton emission computed tomography (SPECT) and positronemission tomography (PET) cameras.A slice of an object observed by a tomographic device is rep.resented by a two-dimensional (2-D) discrete imageAn estimation of must be computed with a tomographic reconstruction procedure from sinograms produced by a tomographic, and defined asdevice, denoted(1)is an observed image,whereis an additive noise, andis the discrete Radon transformwhich models the tomographic projection process. The discrete,Radon transform is derived from its continuous versionwhich is equivalent to the X-ray transform in two dimensionsand is defined as [1](2)Manuscript received October 24, 2001; revised November 22, 2002. Thiswork was supported in part by Siemens Medical Systems and the WhitakerFoundation. Asterisk indicates corresponding author.*J. Kalifa is with the Department of Biomedical Engineering, Columbia University, New York, NY 10027 USA.A. Laine is with the Department of Biomedical Engineering, Columbia University, New York, NY 10027 USA.P. D. Esser is with the Department of Radiology, Columbia-PresbyterianMedical Center, New York, NY 10027 USA.Digital Object Identifier 10.1109/TMI.2003.809691where, is the Dirac mass,,. There are several different ways to define the discreteandRadon transform based on the continuous Radon transform [2].is apTypically, a line integral alongproximated by a summation of the pixel values inside the strip.When three-dimensional (3-D) data is processed, we treattranslated 2-Dit as a series of tomographic projections ofslices of the observed object. When necessary, the tomographicprojections are transformed via rebinning techniques in orderto obtain tomographic projections of 2-D slices: this approachis in general not necessary for SPECT images, but is increasingly common in 3-D PET image acquisition [3]. Thus, the 3-Ddataset is written as(3)The noiseis usually modeled as a Gaussian white noise, which is independent of , or as Poisson noise, whose in.tensity at each pixel depends on the intensity ofA tomographic reconstruction procedure incorporates the following steps. Filtered-Back-Projection: The basis for tomographic reconstruction is the identity, in the continuous case(4)where denotes a convolution, is the one-dimensional(1-D) ramp filter whose Fourier transform satisfies, and the back-projection operatoris theadjoint ofwith,, and.The filtered back-projection (FBP) algorithm is the appliwhich is the discretizacation of a discrete operator. It can be directly computedtion of the operatorwith a radial interpolation and a deconvolution by a 1-Dfilter which is the discretized version of . The application of the filter amplifies the high-frequency components of the tomographic projectionsin the directionof . Regularization: The deconvolution comes from the factthat the Radon transform is a smoothing transform. Consequently, back-projecting in the presence of additive noiseis an ill-posed inverse problem: numerically speaking, ais contaminated by a largedirect computation of, which means that a regadditive noiseularization has to be incorporated in the reconstructionprocedure.0278-0062/03 17.00 2003 IEEE

352Current approaches for regularization in tomographic reconstruction can be classified into two families:1) Regularized FBP (RFBP) is a linear filtering techniquein the Fourier space, in which the Fourier transformof the filter is replaced by a filterwhere is alow-pass filter which attenuates the amplification of highfrequencies. RFBP suffers from performance limitationsdue to the fact that the sinusoids of the Fourier basis arenot adapted to represent spatially inhomogeneous dataas found in medical images. This has been proven byDonoho [4], who has showed the sub-optimality of RFBPto recover piece-wise regular signals, such as medicalimages.2) Iterative statistical model-based techniques are designedto implement expectation-maximization (EM) and maximum a posteriori (MAP) estimators [5], [6]. In somecases, these approaches can provide an improvementover RFBP, but these estimators suffer from the followingdrawbacks: Computation time. Almost all the corresponding algorithms are too computer-intensive for clinical applications, with the exception of ordered-subset-expectation-maximization (OS-EM) [7], which is anaccelerated implementation of an EM estimator.In MAP methods, useful priors usually give localmaxima, but the computational cost of relaxationmethods remains prohibitive. Theoretical understanding and justification. EM estimation lacks a theoretical foundation to understand and characterize the estimation error. The theoretical properties of MAP estimators have beenmore thoroughly studied and are better understood,yet no optimality for a realistic model has beenestablished. Convergence. EM estimators are ill-conditioned, inthe sense that the corresponding iterative algorithmshave to be stopped after a limited number of iterations. Beyond this critical number, the noise may bemagnified, and EM and OS-EM converge to a nonmaximum-likelihood solution. The number of iterations must be chosen by the user.In this paper, a new family of estimation procedures is studiedto address these limitations. These techniques are based on athresholding procedure in a time-frequency decomposition,namely a wavelet or wavelet packet (WP) transform.Section II introduces thresholding estimators in time-frequency decompositions and their application to tomographicreconstruction. Section III explains how the best WP transform is chosen among the variety of possible wavelet andWP representations, using a statistical estimation of the finalerror. Section IV describes the corresponding fast noniterativetomographic reconstruction algorithm to recover 2-D images.Section V describes how the reconstruction algorithm can beadapted to 3-D data to take advantage of the spatial correlationsof the data in the transaxial direction ( axis). Section VIpresents sample numerical results on SPECT and PET data.These numerical results are then compared with the resultsobtained with state of the art procedures currently used inexisting medical devices, namely RFBP and OS-EM.IEEE TRANSACTIONS ON MEDICAL IMAGING, VOL. 22, NO. 3, MARCH 2003Wavelets have been previously introduced in tomography bya large number of researchers. The most popular application ofwavelets in tomography is local reconstruction [8]–[15]. Delaney and Bressler [16] as well as Blanc-Féraud et al. [17] usedwavelet transforms to obtain accelerated implementations of astandard FBP. Bhatia, Karl, and Willsky [18], [19] combinewavelets with a MAP model to derive sparse formulations ofthe problem. Other authors have used wavelet methods to implement a postfiltering of a reconstructed image after it was reconstructed by a standard algorithm [20]. Sahiner and Yagle [21]use wavelet transforms to derive constraints on an iterative reconstruction algorithm. Finally, the wavelet-vaguelette decomposition (WVD) [4], [22]–[24], which is related to the work presented here, will be discussed in this paper.A. NotationUpper cases are used to represent signals which are the resultsof statistical processes.II. THRESHOLDING ESTIMATORSThe operatoris considered as an approximate discreteinverse Radon transform operator. Let beThe difference imageis the radial interpolation error,and is in general very low compared with the estimation errordue to the presence of noise. In this paper, our focus is not oninterpolation techniques, but on regularization: the image isconsidered to be our reference (ideal) image. Spline-based interpolation techniques are currently the most popular for tomographic reconstruction [25], [26].The estimation problem in (1) is also equivalent to the denoising problem(5)and. If the noisewaswhereGaussian white, Donoho and Johnstone have established [27]that a thresholding estimator in a properly selected vector family, typically a wavelet basis, wouldbe optimal to recover spatially inhomogeneous data as found intomographic medical images. A thresholding estimator ofin is defined as(6)is a thresholding operator. Typical simple threshwhereolding rules include hard thresholdingifif(7)and soft thresholdingififif(8)is chosen to be proportional to the standardThe thresholddeviationof the transform coefficientof the backprojected noise, which is a random variable.

KALIFA et al.: REGULARIZATION IN TOMOGRAPHIC RECONSTRUCTION USING THRESHOLDING ESTIMATORS353A. Wavelet-Vaguelette DecompositionIn our situation, the choice of the decomposition does notonly depend on the prior information on the object , but also onthe back-projected noise , whose behavior is very specific dueto the fact that it has been distorted by back-projection and deconvolution processes. The assumption underlying thresholdingestimators is that each coefficient in the decomposition canbe estimated independently without a loss of performance. Asa consequence, such estimators are efficient if the coefficientsof the noise and of the object to be recovered are indeed nearlyindependent in . This means that must provide a near-diagonalization of the noise and of the prior information in theimage .The image is a spatially inhomogeneous, piece-wise regular signal, which is compactly represented in a wavelet decomis Gaussian white, then the noiseposition. When the noiseremains Gaussian becauseis linear. To obtaina diagonal representation of the noise , one must find a decom, is nearlyposition in which the covariance of , and hencediagonal. Since the inverse Radon transform is a Calderon–Zygmund operator [28], it is also nearly-diagonal in a wavelet basis.These two properties of wavelet bases led Donoho [4] tosuggest the use of thresholding estimators in wavelet basesfor several linear inverse problems, including the inversion ofthe Radon transform. Such an estimator is given by (6), whereis an orthogonal or athe basisbi-orthogonal wavelet basis. Donoho established the minimaxoptimality of this approach, called a WVD, and showed itssuperiority with respect to other approaches such as FBP, forthe recovery of piece-wise regular signals.However, the WVD as studied by Donoho was developedfor a continuous model of the back-projection operator, andis always Gaussian white.assumes that the additive noiseMoreover, the asymptotic optimality results establish the performance of a WVD estimator for high resolution data, whichis not the case for PET and SPECT medical images. This meansthat, unfortunately, despite numerical implementations and refinements by other researchers [22]–[24], the theoretical interestof the WVD is not matched with a significant gain of performance when compared with other techniques such as RFBP,when applied to real clinical PET and SPECT data. The purposeof this paper is to build estimators which share the same theoretical properties as WVD, but also provide an important additional flexibility and adaptivity which are essential to improvethe numerical performances and image quality of the resultingalgorithms.The minimax optimality properties of the Wavelet-VagueletteDecomposition can only be established when the additive noiseis a Gaussian white noise. Whenis a Poisson noise, thecoefficients of its decomposition in a wavelet transform are notindependent, and the minimax optimality properties cannot beverified. In practice, however, the strategy of finding a decompois nearly diagonal remains valid, and guarsition in whichantees that the numerical values of the transform coefficientswill be nearlyof filtered back-projected noiseis a Poisson noise. Section III-C exindependent, even if(a)(b)Fig. 1. This figure illustrates the 2-D discrete Fourier domain for positivefrequencies. (a) Segmentation induced by a wavelet transform. The greyareas correspond to the wavelet coefficients which are always put tozero by the thresholding operator because these coefficients have beencontaminated by the numerical explosion of the back-projected noise at highfrequencies. (b) Segmentation induced by a particular WP transform. Thehighest frequencies in which the information is completely dominated bythe back-projected can be isolated more accurately, and some information atintermediate frequencies are recovered by the thresholding operator.plains how the algorithm is adapted depending on the Gaussianor Poisson nature of .B. Wavelet PacketsA major problem of the WVD comes from the relatively poorresolution in frequency of the wavelet transform. Fig. 1(a) illustrates the partitioning of the 2-D discrete Fourier domain induced by an orthogonal wavelet basis. At the finest scale ofthe wavelet transform, which corresponds to frequencies higherin the horizontal and vertical directions, all the waveletthancoefficients are contaminated by the numerical explosion of theback-projected noise . These coefficients are put to zero bydethe thresholding operator, because the threshold valuespend on the standard deviationof the back-projected noise, which is very large at highest frequencies. Alternatively, ifis chosen at a lower value, the noise remainingthe thresholdin the reconstructed image is too important.Kalifa and Mallat [29] have generalized Donoho’s approachto adapt it to other types of decompositions, including WP bases.WP bases are decompositions which can provide a compact representation of an observed image , as well as a more accuratesegmentation of the frequency domain than a wavelet basis, toimprove the near-diagonalization of the noise . It is shown in[29] that the thresholding estimation riskis of the same order, up to afactor, of the decision risk(9)

354IEEE TRANSACTIONS ON MEDICAL IMAGING, VOL. 22, NO. 3, MARCH 2003To minimize (9), we need to concentrate the energyover few vectorswhich produce coefficientslarger than, and among the remaining vectors concentrate theover few large coefficientsthat are aboveenergythe noise level .Fig. 1(b) gives an example: a more accurate segmentation ofthe Fourier domain as compared with a wavelet transform enables isolation of the highest frequencies, in which each coefof the information is below the standard deviaficientof the coefficients of the back-projected noise. Becausetionthis WP decomposition, as opposed to a Fourier transform, provides a compact representation of information, thresholding allows the recovery of most of the information in the rest of theFourier domain.The choice of the best time-frequency decomposition inwhich the thresholding estimation is computed is a matter ofcompromise between the representation of the back-projectednoise and the representation of the data to be recovered.There is no single time-frequency decomposition (such as aFourier basis, a wavelet basis, or a specific WP basis) which fitsall applications of SPECT and PET imaging. However, a WPbasis can be adaptively chosen from a dictionary of differentWP bases. This enables us to optimize the choice of the WPtransform for a specific type of observed image and for thespecific nature of the back-projected noise . This additionaladaptivity brings a significant improvement of numericalperformances with respect to a Wavelet-Vaguelette estimator.III. CHOICE OF WAVELET PACKET DECOMPOSITIONA WP dictionary is a rapidly constructible set of distinct. It is possible, withinand numerous orthogonal basesfor a specificthis dictionary, to search for a “best” basisproblem, according to a criterion chosen in advance. Thiscriterion is usually a cost function which is minimal in the bestbasis. This best basis is computed using the fast best basis algorithm of Coifman and Wickerhauser [30], withoperations for an image of samples.Regularization in tomography is an estimation problem, andfor estimating is obtained empirically bythe best basisminimizing an estimation of the final estimation error (risk)A. Use of Phantom ImagesPhantom images are synthetic images modeling observed organs or anatomical structures, without any noise or artifacts.A phantom imageprovides a reasonable representation ofhow the image of the observed object should appear. Whenphantom images of the observed organ are available, they canbe used for the computation of the best basis, assuming that thephantom image is a mathematical model of the image to berecovered.If the thresholding operator is a hard thresholding, (6)becomes(10)is either zero or one. When, the quadraticwhereestimation error on the corresponding coordinate is equal to theof the coordinate of thevariance of the random variable, the quadratic estimationback-projected noise . Whenof the coordinate of . The operror is the energydepends on the signal whichtimal choice of the values ofcan beis unknown in practice; however the phantom imageused as model for , in which case the cost function for a givenisWP basiswhich can be computed in practice with a numerical model ofthe noise (see below). The best basis algorithm is used to findsuch thatis minimal.the WP basisB. Use of The Stein Unbiased Risk Estimator (SURE)The Stein Unbiased Risk Estimator [31] is an estimator of therisk when is a soft thresholding operator. For a WP basis ,it is given by(11)withifif(12)is the standard deviation of the random variable.for estimating is obtained byThe empirical best basisminimizing the estimated riskwhereThe quadratic estimation error can also be replaced with othermeasures or error at the same computational cost. For example,is sometimes considered in the imagethe errorprocessing community as a better measure to assess the perceptual quality of reconstructed images. For the method presentedin Section III-A, we have experienced the use of both the andestimation errors with similar results. We will use the estimation error in this presentation since its theoretical propertiesare easier to manipulate and because the quadratic estimationerror is used to compute the PSNRs of reconstructed images.Two alternatives are proposed to compute the choice of theWP decomposition. In both cases, the resulting best basis isand the information indesigned to discriminate the noisethe signal. Hence a thresholding can remove most of the noisewithout removing information.(13)The estimated risk is calculated in (11) as an additive cost function over the noisy coefficients.The SURE-based approach to compute the best basis is ingeneral the most efficient to implement because it can be difficult to obtain phantoms whose properties, such as dynamics aswell as spatial and spectral behaviors, are close to the images tobe reconstructed.C. Model of the NoiseThe cost functions used to compute the best basis algorithmdepend on the back-projected noise . To generate a model of

KALIFA et al.: REGULARIZATION IN TOMOGRAPHIC RECONSTRUCTION USING THRESHOLDING ESTIMATORSthe noise , it is necessary to first generate a model of the addiobserved in the sinograms. The model of the noisetive noiseis obtained by back-projecting the model of the noise . Thegoal here is not to find an accurate estimation of the realizationon the available data, but to evaluate its ampliof the noisetude as well as its spatial and spectral behavior.is assumed toDepending on the type of images, the noisebe either Gaussian white noise or Poisson noise.is assumed to be a white Gaussian noise, the Whenproblem is to estimate its standard deviation. Donoho andJohnstone [27] showed that an accurate estimator can becalculated from the median of the finest scale wavelet coefficients. Once the standard deviation has been estimated,is computed using a white noisea numerical model ofrandom generator.is assumed to be a Poisson noise, the sinograms Whenare roughly denoised using the Poisson intensity estimation method by Fryzlewicz and Nason [32]. The resultingcannot be back-projected anddenoised sinogramsproduce tomographic images of good quality. However thebetween the original and the denoiseddifferencesinograms is a good estimation of the Poisson noise .IV. RECONSTRUCTION ALGORITHMThe tomographic reconstruction algorithm is carried out bythe following steps.1). FBP without regularization of the toto obtain themographic projections.back-projected image2). (Optional) Computation of the bestoptimized for a specificWP basisimage to be restored, using one of the twomethods presented in Section III. The bestbasis can be recomputed for each image,or can be computed once and stored in advance, to save computation time. Howeverthe computation of the best basis algorithm is very fast.3). WP transform of the back-projectedin the best basisto obtainimage.the WP coefficients4). Thresholding of the WP coefficients.5). Inverse WP transformation of thethresholded coefficients to obtain theestimate image .The thresholding operator is preferably a soft thresholding,proportional to the stanwith a threshold valueof the noise coefficients.is typicallydard deviationorchosen between 1.5 and 3. It can either be a constantdepend on the WP vector : in this case, it is smaller when theis concentrated in lowersupport of the Fourier transform offrequencies. This imposes that the remaining noise on the reconstructed image will have a nearly flat power spectrum, because355the coefficients of the noise which correspond to higher frequencies will be more attenuated, and the remaining noise will practically behave like a white Gaussian noise. This is useful whencombined with a supplemental thresholding in another decomposition, as explained in Section V. Finally, note that the softthresholding, which attenuates on the whole image the intensityof the remaining noise, guarantees that the reconstructed datawill be sufficiently regular and free of strong artifacts.The WP transform and its inverse are computed with fast filterbank algorithms of complexityfor signals of samples[33]. Numerical results are improved if the WP transform andits inverse are undecimated, i.e., translation-invariant, in whichcase the filter bank algorithm is equivalent to the “à trous” algorithm [33].V. EXTENSION TO 3-D RECONSTRUCTIONSo far, the WP reconstruction has been presented for 2-D reconstruction of slices. We now consider (3), where we have 3-Ddata in the form of a series of tomographic projections oftranslated 2-D slices of an observed object. It is useful to takeadvantage of the correlations of the signal in the transaxial direction ( axis) to obtain a better discrimination between information and noise. In this case, a regularization is computed onthe whole 3-D data, but the back-projections are still computedslice by slice.is still a 2-D operator; assuming thatThe FBP operatoris constant in everythe power spectrum of the additive noisedirection, the power spectrum of the filtered back-projectedwill remain constant in the transaxial direcnoisetion and will not depend on the position on the axis, contraryandaxis. As a consequence, there is no need toto theuse a decomposition with a good resolution in the Fourierdomain along the axis. The best decomposition must onlyprovide a compact representation of spatially inhomogeneousdata, which means that a wavelet decomposition is the mostappropriate. The best results are obtained with a combinationof a slice-by-slice 2-D regularization in a WP decomposition,using the algorithm of Section IV, and a supplemental fully3-D regularization on the whole 3-D volume, using a secondthresholding estimator in a 3-D dyadic wavelet decomposition:is first computed withfor each slice , an estimationcana thresholding of the WP coefficients. The errorbe considered as a residual noise. This noise has a powerspectrum which is nearly flat at high frequencies, and it isnearly diagonalized in a wavelet decomposition.To take full advantage of the 3-D information in the data, wewant to apply a 3-D dyadic wavelet transform on the volume,where the wavelets can be adaptively oriented perpendicular tothe singularities of the signal. This directional selectivity enables us to maximize the correlation between the vectors ofthe wavelet family and the information of the signal. The efficiency of noise removal is thus greatly improved. It should bementioned that other types of transform are currently being designed to provide geometrical adaptivity for denoising and inverse problems, see in particular [34] and [35].A 3-D dyadic wavelet transform is computed with a family ofwavelets which are the discretized translations and dilatations of

356three wavelets ,anda smoothing functionIEEE TRANSACTIONS ON MEDICAL IMAGING, VOL. 22, NO. 3, MARCH 2003that are the partial derivatives ofFor a given , ,, andhave been equally dilated andare translated in the same position, but they have respectively ahorizontal, vertical and transaxial direction; let us denotefor3). Computation of the modulus coefficientsof the 3-D dyadicwavelet coefficients, following (16).4). Thresholding of the modulus coefficients.5). Computation of the denoised 3-D dyadicwavelet coefficients from the thresholdedmodulus coefficients.6). Inverse 3-D dyadic wavelet transformfrom the denoised 3-D dyadic wavelet coefficients to obtain the regularized volume.The thresholding operator on the modulus coefficients is ahard thresholding, since a supplemental soft thresholding wouldadd an unnecessary smoothing on the reconstructed data.andVI. NUMERICAL RESULTS(14)For a volume image , the wavelet transform ofat a scale 2 has three components which can be written as frameinner products(15)are partial derivatives of , these threeBecause , , andcomponents are proportional to the coordinates of the gradientvector of smoothed by a dilated version of .From these coordinates, one can compute the angle of the gradient vector, which indicates the direction in which the partialderivation of the smoothed has the largest amplitude. The amplitude of this maximum partial derivative is equal to the modulus of the gradient vector and is, therefore, proportional to thewavelet modulus(16)We do not threshold independently each wavelet transform. Instead, we threshold the moduluscomponent. This is equivalent to selecting first a direction inwhich the partial derivative is maximum at each scale 2 , andthresholding the amplitude of the partial derivative in this direction. This can be viewed as an adaptive choice of the waveletdirection in order to best correlate the signal. The coefficientsof the dyadic wavelet transform are then computed back fromthe thresholded modulus and the angle of the gradient vector.The dyadic wavelet transform is implemented with a fast filterbank “à trous” algorithm [33]. The 3-D tomographic reconstruction algorithm is decomposed in the following steps., computation of the1). For allusing theregularized 2-D imagealgorithm described in Section IV.2). Three–dimensional dyadic wavelet decomposition of the volumeto obtain 3-D dyadic wavelet coefficients.Numerical results are provided for synthetic phantom imagesto demonstrate the metrical performances, in terms of signal-tonoise ratio (PSNR), of the WP-based reconstruction with respectto RFBP and WVD. Numerical results on real clinical SPECTand PET data are also provided to demonstrate the perceptualperformance of the WP reconstruction. Examples include bone,brain and Jaszak phantom images, which have very differentdynamics and properties.Fig. 2 compares the reconstructions of a RFBP reconstruction, a WVD reconstruction, and a WP reconstruction on a synthetic 256 256 Shepp-Logan phantom, starting from 192 projections, as in a standard PET device. Since an ideal referenceimage is available, this example enables us to compare the metrical performance directly. The filter used for RFBP has beenoptimized to obtain the best possible PSNR. Experiences havebeen conducted with different shapes of filters, including cosine, Hamming, Hann, and Shepp-Logan filters. The best filterin this example is a Hann filter. The WVD algorithm includesa translation-invariant decomposition [23] and its thresholdingstrategy has been carefully optimized to provide the highest possible PSNR, using scale-dependent thresholds. In all the experiments, the same filter bank has been used for the WVD and forthe WP algorithm, namely symmlets with four null moments,and the WP tree has been selected using the SURE-based procedure of Section III-B. As mentioned in Section IV, for both WPare proportionaland WVD, the threshold valuesto the estimated standard deviationof the noise coefficients,whereis scale-dependent for WVD and tree node-depen

Regularization in Tomographic Reconstruction Using Thresholding Estimators Jérôme Kalifa*, Andrew Laine, and Peter D. Esser Abstract— In tomographicmedical devicessuch as single photon emission computed tomography or positron emission tomography cameras, image reconstruction is an unstable inverse problem, due to the presence of additive noise.

Tomographic reconstruction of shock layer flows . tomographic reconstruction of supersonic flows faces two challenges. Firstly, techniques used in the past, such as the direct Fourier method (DFM) (Gottlieb and Gustafsson in On the direct Fourier method for computer . are only capable of using image data which .

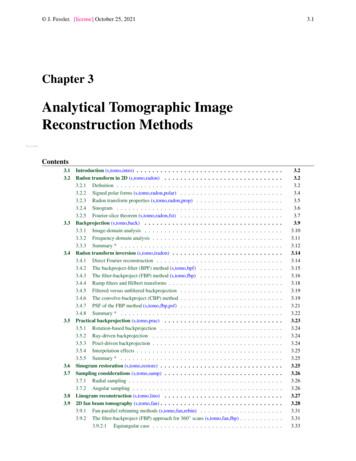

statistical reconstruction methods. This chapter1 reviews classical analytical tomographic reconstruction methods. (Other names are Fourier reconstruction methods and direct reconstruction methods, because these methods are noniterative.) Entire books have been devoted to this subject [2-6], whereas this chapter highlights only a few results.

Tomographic Reconstruction 3D Image Processing Torsten Möller . History Reconstruction — basic idea Radon transform Fourier-Slice theorem (Parallel-beam) filtered backprojection Fan-beam filtered backprojection . reconstruction more direct: 39

MULTIGRID METHODS FOR TOMOGRAPHIC RECONSTRUCTION T.J. Monks 1. INTRODUCTION In many inversion problems, the random nature of the observed data has a very significant impact on the accuracy of the reconstruction. In these situations, reconstruction teclmiques that are based on the known statistical properties of the data, are particularly useful.

ble, tomographic reconstructionsof 3D fields canbe realizedwithout TagedPspatial sweeping of the illumination fields and thus without associ-ated loss of time. Examples of volumetric tomography techniques in combusting flows include tomographic PIV for volumetric velocime-try [78,79], tomographic X-ray imaging for fuel mass distributions

mismatch and delivering reconstructed tomographic datasets just few seconds after the data have been acquired, enabling fast parameter and image quality evaluation as well as efficient post-processing of TBs of tomographic data. With this new tool, also able to accept a stream of data directly from a detector, few selected tomographic slices are

along the path. A conventional x-ray image is a two-dimensional image perpendicular to the direction of the travelling rays. The tomographic image is an image reproduced in the plane where the rays travel through. In 1972, Hounsfield invented the x-ray computed tomographic scanner [1, 2] and shared the Nobel Prize with Cormack [3] in 1979.

enFakultätaufAntragvon Prof. Dr. ChristophBruder Prof. Dr. DieterJaksch Basel,den16. Oktober2012, Prof. Dr .