Approximate Surface Reconstruction And Registration For . - Uni-bonn.de

European Conference on Mobile Robotics (ECMR), Lincoln, UK, September 2015Approximate Surface Reconstruction andRegistration for RGB-D SLAMDirk Holz and Sven BehnkeAbstract—RGB-D cameras have attracted much attention inthe fields of robotics and computer vision, especially for objectmodeling and environment mapping. A key problem in all theseapplications is the registration of sequences of RGB-D images.In this paper, we present an efficient yet reliable approach toalign pairs and sequences of RGB-D images that makes useof local surface information. We extend previous works on 3Dmapping with micro aerial vehicles to sequences of RGB-D images.The resulting alignment is based on a robust surface-to-surfaceerror metric and uses multiple surface-to-surface patch matchesbetween pairs of RGB-D images. Quantitative evaluations showthat our approach is competitive with state-of-the-art approaches.I.Fig. 1.Typical result of aligning a sequence of RGB-D images. Left:approximate surface reconstruction (unfiltered) of the first cloud. Right: thepoints of all aligned point clouds.I NTRODUCTIONConsumer color and depth cameras (RGB-D cameras) havehuge potential in improving the perception capabilities of robotsand automated vision systems in general. They acquire color(RGB) and depth (D) images both at high frame rates, e.g.,30 Hz. Intrinsic and extrinsic calibration of the two imagesources yields colored 3D point clouds (see Fig. 1). Due totheir comparably low cost, low weight, and small form factor,RGB-D cameras have attracted much attention in the fields ofrobotics and computer vision, especially for object modelingand environment mapping. What most applications have incommon is that they require RGB-D images to be taken frommultiple different viewpoints and that the acquired images needto be reliably registered such that the overlapping regions inthe images match as well as possible. In the literature, thisproblem is usually referred to as Simultaneous Localizationand Mapping (SLAM): building a map of the environment andlocalizing the information acquiring sensor(s) therein so as toconsistently update and extend the map.In recent years, many different approaches to visual odometry [1]–[3], SLAM [4]–[9], and dense mapping [10]–[13]have been proposed of which some are specifically tailored forRGB-D cameras. These methods are either based on featuresmatched and tracked over sequences of images, or directlyoperate on the (semi-) dense color and depth images. Mostapproaches select a set of keyframes and optimize the resultingpose graph in order to obtain a globally consistent trajectoryand map. State-of-the-art methods achieve globally consistenttrajectories with low errors in pose estimation at high framerates. We include some of them in a comparative evaluation.In this paper, we state and address the problem of RGB-DSLAM in terms of multi-view 3D registration based on pointcorrespondences between frames that encode a surface-tosurface error metric. The approach is based on previousAll authors are with Autonomous Intelligent Systems Group, Computer Science Institute VI, University of Bonn, 53113 Bonn, Germanyholz@ais.uni-bonn.de, behnke@cs.uni-bonn.dework [14] for 3D laser scan registration and mapping with microaerial vehicles. In order to compensate for the non-uniformpoint densities within and between individual scan lines of thefast rotating scanner, we approximated the underlying surfaceand used a generalized error metric [15] for obtaining robustregistrations and accurate 3D maps of the sensed environmentalstructures such as buildings. In this paper, we extend theapproach to be applicable to sequences of RGB-D imagesand make the following contributions:1) In order to reduce the drift during the initial tracking ofthe camera, we register a newly acquired image againsta local window of frames as opposed to the last (key)frame.2) We integrate our previous works on range image segmentation [16] for efficiently computing local features such assurface normals on an approximate mesh representation,and for edge-aware filtering of the underlying points andthe computed features to compensate for noise especiallyin the depth images.3) To cope with the larger amount of data of RGB-D imagesin our multi-edge alignment approach, we efficiently sample both points in the images and found correspondences.As a result, our approach of using multiple edges betweenviews that encode surface-to-surface constraints can be appliedto RGB-D video. Moreover, its performance is competitivewith other state-of-the-art approaches. In fact, the proposedlocal window multi-edge alignment has a huge potential ofcontributing to other SLAM and object modeling pipelines.We present results of a thorough comparative experimentalevaluation that proof these claims.II.R ELATED W ORKApproaches to SLAM using monocular cameras, RGB-Dcameras and stereo cameras can in general be split into twodifferent categories: feature-based methods that compute and— Final version submitted to ECMR —

track distinct repeatable key points and associate them usingfeature descriptors, and direct methods densely registering theacquired data.For monocular cameras, a hybrid approach is the semidense visual odometry method proposed by Engel et al. [3].It first computes inverse depth maps which are then used toalign subsequent frames. A similar approach is followed byForster et al. [7]. Engel et al. [9] extend their approach to buildglobally consistent maps even of large-scale environments.Scherer and Zell [4] present an RGB-D SLAM approachthat is efficient enough to be computed onboard an autonomousmicro aerial vehicle. It is based on tracking FAST keypointsand the fast hierarchical graph optimization of Grisetti et al.[17]. The FAST corner detector is also used by Huang et al.[2] in their visual odometry method FOVIS.A popular approach developed specifically for RGB-Dimages is RGB-D SLAM [8], [18]. It uses the color imageto extract and match visual keypoints and descriptors (SURF,SIFT and ORB). The alignment relies on the 3D coordinates ofkeypoints obtained from the depth image. A similar approachis followed by Henry et al. [5] with FAST keypoints.One of the first successful demonstrations of dense registration and mapping has been presented by Newcombe et al. andwas coined KinectFusion [10]. KinectFusion uses signed distance functions in a grid-based environment representation andICP-based registration [19] for aligning newly acquired depthimages. All components are implemented on a GPU and allow(near) real-time operation. Assuming the camera movementsbetween frames are small, this incremental registration canreliably align the data and update the environment model. Byusing more information than only a single frame against whichKinectFusion registers considerably reduces drift usually arisingin pairwise registration. In many cases, incremental registrationcan achieve globally consistent environment maps without theneed for detecting loop closures and global optimization, e.g.,as shown in a previous work on 2D laser-based mapping [20].The drawback of incremental registration is that errors madein the update of the used environment representation cannotbe corrected later. In this paper, we address the alignment ofcaptured frames in terms of multi-view registration and do notbuild a particular environment representation.Steinbruecker et al. [13] also use signed distance functions for dense mapping but organize the map in an octreestructure. Stückler and Behnke [11] proposed a surfel-basedregistration method for constructing multi-resolution surfelmaps (MRSMAPs) that are also represented in an octree. Kerlet al. [6] follow a different visual SLAM approach (DVOSLAM) by minimizing the photometric and the depth errorover all pixels. We include RGB-D SLAM [18], MRSMAP [11],DVO-SLAM [6], and an open source implementation ofKinectFusion [10] in a comparative experimental evaluation.Recently, Maier et al. [21] presented an efficient approach toRGB-D object modeling. They split the camera trajectory intochunks of equal size, and first optimize the alignments withinthe chunks before globally aligning the chunks to each other.Since the camera is moved around the object to model, thesesplits along the trajectory yield spatially coherent partitions.We achieve a similar behavior by using local windows in theinitial alignment of newly acquired frames. Moreover, the localwindows include earlier frames in case of loop closures. Thelocal alignment can then compensate for the accumulated driftor trigger a global optimization of the trajectory in case conflictsare found. Our local alignment approach is inspired by thedouble window approach in the SLAM framework of Strasdatet al. [22].In multi-view scan matching, poses are determined simultaneously by aligning all scans. In the 2D domain, a popularapproach is the one by Lu and Milios (LUM) [23]. Borrmann etal. [24] extend this approach to six degrees of freedom for thealignment of 3D scans and present methods to efficiently dealwith the resulting nonlinearities [24]. The resulting approachfirst applies the ICP algorithm to align consecutive point cloudsand then builds a graph based on the determined connectivityof view poses similar to our approach. Both the determinedtransformations and the sets of point correspondences arerepresented in the edges. From both, a measurement vector andits covariance matrix are computed which are then fed as oneblock into a large linear system for optimization. In contrast,in our approach, every correspondence pair forms a block inthe final non-linear error function. Furthermore, LUM uses apoint-to-point error metric as in the original ICP algorithm.Instead, we approximate the surface and use a probabilisticsurface-based error metric.Similar to our multi-edge alignment step are the approachesof Zlot and Bosse [25] and Ruhnke et al. [26]. For mappingmines with a continuously spinning laser scanner, Zlot andBosse use non-rigid surfel registration and graph optimizationfor aggregating point clouds and building consistent maps.Ruhnke et al. also use raw point matches as constraints in thegraph and apply a surfel-based error metric to iteratively refineboth the sensor poses and the positions of the points. Theirapproach can build highly accurate object models but requiresa rough initial alignment of the dense RGB-D data. Moreover,by optimizing the position of every point in the resulting objectmodel, the approach is computationally complex. In contrast,we aim at both initially aligning the acquired point clouds andbuilding globally consistent environment models while tryingto reduce the complexity of the involved processing steps, e.g.,by using only descriptive subsets of the dense RGB-D dataand local windows. The idea behind this paper is to apply ourpipeline for 3D mapping with MAVs [14] to dense RGB-Ddata, making the necessary adaptions to make it both applicableand feasible, and to evaluate how the resulting system comparesto state-of-the-art RGB-D SLAM approaches.III.M ETHODOur approach is split into three stages. In the first stage,we approximate, for each frame, the underlying surface in theform of a quad mesh. The mesh serves three purposes: it allows1) computing features such as surface normals directly on themesh, 2) extracting neighborhoods from the mesh topology,and 3) caching values such as computed distances and normaldeviations in its edges. Referring to Fig. 2, the extracted meshis then smoothed and used for feature extraction. In the secondstage, both the mesh and the computed features are fed intothe local alignment so as to keep track of the camera pose. Ifloop closures are detected, the so far estimated trajectory isglobally optimized in the third stage.

Per-Frame Reconstruction and Feature EstimationPreprocessingSensorraw datapointcloud PtApproximateReconstructionmesh MtsmoothedMultilateralFilteringparameters ( θ , d )SensorModelFeatureEstimationmesh Mt Points (pi,t , ni,t )Covariances Ci,tWindowEstimationt 1tT 0 Subgraphtt 1TCorrespondenceEstimationEdgesLocalOptimizationtt 1TCorrespondenceestimationIterating until convergence (with decreasing thresholds)Local Window AlignmentEdgesGlobalOptimizationIterating until convergenceGlobal AlignmentFig. 2. System overview and data flow. For a newly acquired point cloud, we first approximate the underlying surface reconstruction. Using the mesh topology,we compute approximate local surfaces and apply a multilateral filter to smooth both points and normals. We then compute local covariances and feed the pointclouds as well as the computed features into the local alignment. Global optimization then yields globally consistent trajectories.A. Approximate Surface ReconstructionSurface reconstructions are compact representations of theunderlying sensed environmental structures and can becomehandy in a variety of pre-processing tasks such as computingpoint neighborhoods, local surface normals or smoothed (andupsampled or downsampled) representations [16]. In order tocompute an approximate surface reconstruction, we traverse anorganized point cloud P once and build a simple quad mesh byconnecting every point p P (u, v) (in the u-th row and thev-th column) to its neighbors P (u, v 1), P (u 1, v 1), andP (u 1, v) in the next row and column. We only add a newquad to the mesh if P (u, v) and its three neighbors are validmeasurements, and if all connecting edges between the pointsare not occluded. The first check accounts for possibly missingor invalid measurements in the organized structure. For thelatter occlusion checks, we examine if one of the connectingedges falls into a common line of sight with the viewpointv 0. If so, one of the underlying surfaces occludes the otherand the edge is not valid: valid ( cos θi,j cos θ ) di,j 2d , (1) (pi v) · pi pjwith θi,j ,(2)kpi vk kpi pj kanddi,j kpi pj k2 ,(3)where θ and d denote maximum angular and length tolerances,respectively. The latter accounts for sensor noise, i.e., tolerabledepth continuities such as quantization effects in the depthimages. We use a simple isotropic noise model for MicrosoftKinect cameras that we developed for range image segmentation [16]. It depends on the measured distance z: d (z) n 2 σ(z),(4)with σ(z) 0.00263z 2 0.00519z 0.00755, (5)where n is the subsampling factor applied, i.e., using onlyevery n-th row and column for constructing the quad mesh.If both distance and occlusion checks pass, we add a newquad. Otherwise, holes arise. After construction, we simplifythe resulting mesh by removing unused vertices.and quantization effects. In order to compensate for local noisein depth measurements, we apply a filter for smoothing both thepoints and their normals while preserving edges in the sensedgeometric structures. The formulation of our filter is motivatedby the concept of multilateral filtering [27] and measures thesimilarity of points w.r.t. their position, surface orientation, andappearance. We filter both a point pi and its normal ni overits 1-ring-neighborhood Ni , i.e., all points that are directlyconnected to pi by an edge in the mesh:PPj Ni wij pjj N wij njPpi , and ni P i, (6)j Ni wijj Ni wij βkni nj k1 γ(Ii Ij )/cIi pj kwith wij e αkp{z} e{z} e {z}.distance termnormal term(7)intensity termThe normalization constant cI is used to scale the intensitydifferences to lie in the interval [0, 1]. Weights α, β, andγ can be used to adjust the behavior of the filter. Equallyweighting distance, surface normal and color deviation termalready achieves considerable smoothing while preserving edgesand corners (α β γ 1). Depending on the desiredsmoothing level, we extend the point neighborhood to includethe neighbors of neighbors and ring neighborhoods farther awayfrom the point.C. Approximate Normal and Covariance EstimatesIn order to estimate local surface normal and covariancematrix of a point, we directly extract its local neighborhoodfrom the topology in the mesh instead of searching forneighbors. We compute the normal ni for a point pi directlyon the mesh as the weighted average of the plane normals ofthe NT faces surrounding pi (extracted from the topology):PNTj 0 (pj,a pj,b ) (pj,a pj,c ),(8)ni PNTk j 0 (pj,a pj,b ) (pj,a pj,c )kB. Multilateral Filteringwith face vertices pj,a , pj,b and pj,c . We then compute thelocal covariance matrix Σi as in [15]: 0 0 Σi Rni 0 1 0 Rni T(9)Naturally, sensor measurements are affected by noise.Especially depth images suffer from distance-dependent noisewith a rotation matrix Rni so that reflects the uncertaintyalong the approximated local surface normal ni . The intuition001

behind this is that we assume the point to lie on the approximated surface while not knowing where the point is lying on thesurface. The lower the uncertainty the more we assume localplanarity around measured points. Consequently, with a lowvalue (0 10 3 ), the registration error to be minimized(introduced in the following) converges to a plane-to-planeerror metric.D. Surface-to-Surface AlignmentIn order to align, respectively, two approximated surfacesand two organized point clouds A and B, we search for closestneighbors in B for points ai A and iteratively minimize thedistances between the found matches. Instead of minimizing(T )the point-to-point distances dij bj T ai of the set of foundcorrespondences C to determine the transformation T as inthe original Iterative Closest Point algorithm [19], we use thegeneralized error metric introduced by Segal et al. [15]. Itgeneralizes over the different available error metrics (pointto-point, point-to-plane, plane-to-plane) and thus takes intoaccount information about the underlying surface. Instead of(T )minimizing the distances dij between corresponding pointsai and bj , it models the distribution (T )A Tdij N bj T ai , ΣB(10)j RΣi Rwhere R is the rotation matrix of T under the assumptionthat both points in A and points in B are itself drawn fromindependent normal distributions, i.e., ai N (âi , ΣAi ) andbj N (b̂j , ΣB).Giventhecorrespondencesij C, thejoptimal transformation T ? best aligning A to B can then befound using maximum likelihood estimation (MLE): Y (T ) X(T )T ? arg maxp dij arg maxlog p dijT' arg minTTij CX (T ) TdijΣBj ij C 1TRΣAi R(T )dij .(11)ij C {z simplified Likelihood L(T )}The effect of minimizing (11) is that corresponding points arenot directly dragged onto another, but the underlying surfacesBrepresented by the covariance matrices ΣAi and Σj are aligned.In previous work [14], we have used this approach for3D SLAM with a light-weight continuously rotating 3D laserscanner carried by a micro aerial vehicle. In order to compensatefor smaller inaccuracies in pair-wise registration, we usedmultiple edges between neighboring poses in the final trajectoryoptimization, where every single edge encoded a surface-tosurface error correspondence using the error metric in (11). Inthis paper we no longer distinguish between pairwise initialalignment and subsequent global optimization. Instead, both theinitial alignment in the local windows and the global trajectoryoptimization in case of loop closures are formulated in exactlythe same multi-edge graph optimization approach.E. Multi-Edge Graph OptimizationIn a graph G(V, E), neighboring poses in the trajectory formthe vertices v i V and spatial constraints (transformations)between two vertices v i and v j are represented by edgeseij E. Instead of adding only a single edge between twov i 1ii 1 Tvii0Tv0Fig. 3. Example of connecting a frame at vertex v i to the first frame v 0 andthe last frame v i 1 . Instead of using a single edge encoding the transformations(dashed lines), we use one edge per point correspondence. Instead of repeatablefeatures, we use raw points and iteratively refine the matching.vertices that encodes a transformation and the correspondingcovariance matrix, we search for corresponding points betweenthe respective point clouds and add multiple edges, one foreach found correspondence (see Fig. 3).Each edge in the graph encodes two entities: a localcontribution to the measurement error e and an informationmatrix H which represents the uncertainty of the measurementerror. The information matrix is defined as the inverse of thecovariance matrix, i.e., it is symmetric and positive semi-definite.For the error measurement between, respectively, two verticesv i and v j and the correspondence pair (pi,m , pj,n ), we use thepoint-to-point difference vector and approximate its informationmatrix using the error generalized error metric (11):meaneij,mn (ij T )and H ij,mn (ij T )pj,n ij T pi,m ,(12) 1Pi Tj ΣP. (13)m RΣn R The effect is that every edge contributes its approximate surfaceto-surface error term to the system information matrix—thusautomatically giving lower influence on incompatible or falsecorrespondences and quickly leading to alignment even in caseof larger initial displacements.For the actual optimization, we follow an iterative procedureby 1) estimating correspondence pairs for all (or a subset of)points pi,m Pi in Pj for every two vertices (v i , v j ) thatare to be connected and 2) optimizing the resulting linearizedsystem for a maximum of ten inner iterations. We repeat thesetwo steps for a maximum of ten outer iterations. For a fastinitial coarse alignment in early and an accurate refinementin later outer iterations, we use a linearly decreasing distancethreshold for correspondence pairs, starting with 2 m ( inthe first iteration) and going to two times the expected localnoise (4). In every outer iteration step, the graph is optimizedusing dense Cholesky decomposition and Levenberg Marquardtwithin the g2o framework [28]. For both inner and outeriterations, we stop when the system has converged. Convergencein graph optimization (inner iterations) can be detected basedon the changes in both view poses and system error as wellas the damping factor applied by Levenberg Marquardt. Fordetecting convergence in the overall graph refinement in theouter iterations, we check whether the view pose connectivityand the correspondences between connected view poses havechanged. When no more changes are found and the inneroptimization has converged, we stop optimizing the trajectory.F. Local Window AlignmentIn order to estimate a rough initial pose estimate for a newlyacquired frame, standard SLAM procedures would first register

v0viv i 1wndoLocal wiowdniExtended local wGlobal AlignmentFig. 4. Alignment in local windows as opposed to pairwise alignments.Aligning a frame (x-axis red, y-axis green, and z-axis red) to multiple otherframes tends to be more stable and to drift less. In this example of a cameramoving along a circular trajectory, the local window around the vertex v iincludes the last frame (at v i 1 ) and the starting pose v 0 upon loop closure.the new frame against the last (key) frame, and then searchfor possible loop closures for a subsequent global optimization.Instead, we determine a local window of neighboring posesand simultaneously align the new frame against all framesacquired at poses within the local window. Referring to Fig. 4,for a pose at v i we search for closest poses (i.e., cameraorigins) in 3D. The found candidates are checked for asimilar viewing direction by means of the angle between thecamera z axes. This rough initial check suffices since thefollowing alignment accurately deals with overlapping and nonoverlapping measurement volumes. We define the local windowto contain 1) the last acquired frame and the last acquired keyframe, respectively, as well as 2) all poses within a radius raround the camera origin w.r.t. the current pose estimate thathave a similar orientation. In order to obtain constant-timeinitial alignments, we additionally use an upper limit of wfor the number of neighboring poses in the local window andsample the matches in between neighboring frames to keepboth the number of vertices and the number of edges in theconstructed subgraph constant.Once the local window is determined, the newly acquiredframe is aligned to all frames in the local window by estimatingonly the pose of the frame being aligned. The other poses arefixed during optimization. In contrast to pairwise registration,this one-to-many alignment is more stable and tends to driftless (see the experimental evaluation in Sec. IV). Moreover,it allows for efficiently detecting loop closures and possibleinconsistencies in the trajectory estimate as described next.G. Loop Closure Detection and Global OptimizationOur primary mean for detecting loop closures is to inspectthe poses found in the local window. Naturally, if a similarpose is found that has been acquired long ago (in terms of timeand frame index), a loop closure is detected. Since our localinitial alignment is quite stable without considerable drifts, loopclosures can be easily detected as long as the camera trajectoryis bound to a single room. In larger environments, drifts in thelocal alignments accumulate and more sophisticated means forrecognizing previously visited places are needed [29].Once, the local window contains an earlier pose alongthe trajectory, we compare the transformations obtained fromthe alignment in the local window with those in the so farbuilt global graph. In case of conflicts, e.g., larger jumps inthe estimated pose or no convergence in the optimization, wetrigger an alignment in an extended local window. This extendedwindow includes the 1-ring neighborhood of the local window.For the alignment, all poses in the local window are nowoptimized, and only the poses in the extended border (i.e.,in the 1-ring neighborhood) are fixed. If the extended localwindow contains earlier poses or shows conflicts after localalignment, global trajectory optimization is triggered.The global optimization of the trajectory follows the sameprinciple as the local alignment. In order to save processingtime, however, the global graph does not contain all poses butonly a limited set of keyframes.H. Keyframe SelectionSeveral strategies exist to select whether or not to add a newkey frame, e.g., adding every n-th frame, applying rotational andtranslational thresholds, or applying thresholds on registrationerror variances or the number of matched features. We applya rotational threshold and a translational threshold as fixedupper limits in order to avoid larger distances between keyframes even if the alignments in between are good. In addition,we use a measure based on matching quality and uncertaintyalong the dimensions of the transformation. After the localalignment of a newly acquired frame, we inspect the determinedtransformation T to the last keyframe and compute an estimateof the uncertainty. We use the approximation by Censi [30] tocompute the covariance matrix: 2 1 2T 2 1 2L L L LΣ(c)ΣT , (14)2 x c x c x x2where L is the simplified likelihood function in (11), cdenotes the individual found correspondences C between thetwo point clouds Pi and Pj , and Σ(c) is the covarianceof the correspondence pairs. Note that in (14), the relativetransformation between two view poses is not represented as ahomogeneous transformation matrix T , but in a parameterizedform x (t, q)T with translation t and rotation by the unitquaternion q. We then follow the approach of Kerl et al. [6]to compute an entropy-based measureH (T , ΣT ) ln ( ΣT ) ,(15)using the determinant of ΣT . The entropy of the currenttransformation (against the last key frame) is then compared tothe stored entropy of the last key frame (when it was added).If the ratio between the two entropy measures falls below apredefined threshold, the last (not the currently aligned) frameis added as a key frame, and the local alignment is repeated.I. Point Subsampling and Correspondence FilteringAn important aspect in the alignment is determiningcorrespondences between frames. Every such correspondencewill contribute in the form of an edge to both subgraphand global graph optimization. In order to use only a smallnumber of correspondences without loosing much information,we follow a multi-stage strategy: we first subsample querypoints from the frame to be aligned and then reject foundcorrespondences that are unlikely to contribute to the alignment.We sample query points from two distributions: uniformlyover the rows and columns of the image and uniformly innormal space. The intuition behind the latter is that we want to

TABLE I.R ELATIVE P OSE E RROR (RPE) IN I NITIAL A LIGNMENTS ( WITHOUT GLOBAL OPTIMIZATION ), RMSE of RPE( ) in m/s with 1 sPairwise Mesh Registration Mesh Registration Filtering (n 4)Local Alignment Filtering (n 4, k 4)Datasetn 1n 2n 4k 1k 2k 3k 4w 2w 3w 4w 5w desklong office . . .Avg. improvement Mesh Registration: the input images are subsampled by using only every n-th row and column, e.g., n 4 corresponds to a 160 120 image.Filtering: the local neighborhood used by the multilateral filter is sequentially expanded to include the 1 to k-ring neighrborhoods.Local alignment: the window size w determines the number of (closest) vertices used for optimization of the subgraph.draw samples from all sur

holz@ais.uni-bonn.de, behnke@cs.uni-bonn.de Fig. 1. Typical result of aligning a sequence of RGB-D images. Left: approximate surface reconstruction (unfiltered) of the first cloud. Right: the points of all aligned point clouds. work [14] for 3D laser scan registration and mapping with micro aerial vehicles. In order to compensate for the non .

Issue #2 for image reconstruction: Incomplete data For “exact” 3D image reconstruction using analytic reconstruction methods, pressure measurements must be acquired on a 2D surface that encloses the object. There remains an important need for robust reconstruction algorithms that work with limited data sets.

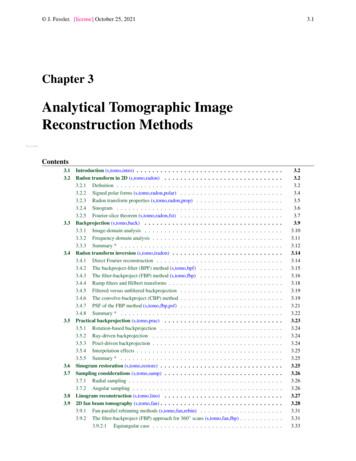

statistical reconstruction methods. This chapter1 reviews classical analytical tomographic reconstruction methods. (Other names are Fourier reconstruction methods and direct reconstruction methods, because these methods are noniterative.) Entire books have been devoted to this subject [2-6], whereas this chapter highlights only a few results.

Step-by-Step Guide to Registration Step 1: Prepare for Registration Make sure you meet the eligibility requirements for enrolling. Check the Registration Timeline to ensure registration is open. Note the following: Registration and Payments All registration and payments must be done online using the steps below. Plan Ahead:

approximate string joins. More formally, we wish to ad-dress the following problem: Problem 1 (Approximate String Joins) Given tables 1 and # 3with string attributes 1 ,an integer ), retrieve all pairs of ecords 1 3 such that edit distance(1 0) 3) . Our techniques for approximate string processing in databases share a principlecommon .

is a query string. Approximate selections are special cases of the similarity join operation. While several predicates are introduced and benchmarked in [5], the extension of ap-proximate selection to approximate joins is not considered. Furthermore, the effect of threshold values on accuracy for approximate joins is also not considered. 3 .

Zeagle Ranger BCD from Boston Scuba. Approximate value 750. * Hollis 200 LX Regulator. Approximate value 650. * 2016 Raffle! 5 per ticket, 3 for 10 The amazing prizes for the 2016 Raffle include: 1-Week Trip to Saba/St. Kitts with Explorer Ventures - Approximate value 2,000. * 4-Night Stay at Maluku Divers, in Indonesia - Approximate value .

Comments on Lab 1 24 Sampling part of Lab 1 24 Reconstruction part of Lab 1 25 Lowpass reconstruction filters 26 DT lowpass reconstruction filters 29 Reading: EE 224 handouts 2, 16, 18, 19, and lctftsummary (review); § 1.2.1, § 2.2.2, § 4.3, and § 7.1–§ 7.3 in the textbook1. 1 A. V. Oppenheim and A. S. Willsky. Signals & Systems .

eral thousands of genes, but only for a few hundred tissue samples. The classical statistical methods are often simply not applicable in these \high-dimensional" situations. The course is divided into 4 chapters (of unequal size). Our rst chapter will start by introducing ridge regression, a simple generalisation of ordinary least squares. Our study of this will lead us to some beautiful .