Creating Deep Learning Based Speech Products In Record Time

Samer HijaziCTOBabbleLabs Inc.

The New World of Speech TechnologyLive 2-wayaudio/video commsAudio/video sharing &distribution8B speakersSpeaker IdentityConsumerbrowsing,shopping &supportProtranscription &forensicsFull language dialogand captureSpeech DeviceControl

The Opportunity 22B microphones by 2020 7B phones radios TVs delivering voice YouTube uploads: 13B minutes per year 200T minutes per year of device interaction 1Q words per year in voice callsSpeech Market Growth: 38.3%-Statista 2018: speech recognition technology market 2016-2014

AI meets speechmore sophisticated models, more data, more training0dBMassiveData CorpusMassiveCompute10s of 1000 hr speech10s of 1,000 hr noise10s of 1000 RIRNEVER TRAIN ONTHE SAME DATATWICE88 TFLOPSPer Engineer21dBSpeech EnhancementSpeech RecognitionSpeech UI Dialog

Technology Product Customers End UsersConsumer audio/video sharer:Recording in the real worldEnd user:their echnologylicenseProduct: Platform-optimized solutionsProduct: Deep learning speechsoftwareSpeech EnhancementTechnology: Unique data-sets andtrainingComputeDataAlgorithms

Clear Speech EverywhereIn production for real-world video sharing, production, streaming, and audioCommon product delivered across platformsOn-Device LicenseWeb UIAndroid & iPhone AppsFoundation toproduct releasein 28 weeks!For visibility and demonstrationPrimary productsCloud API

What is Speech Ennhancment

Human – Human Interface Challenges

Human – Machine Interface Challenges

BabbleLabs Answer to these Challenges: Clear CloudTMNoisyEnhanced

OutlineA bit about noisy speechTraditional speech enhancementDeep neural network approachesClosing thoughts

Acoustic Impairments Modelambient /stationarynoisereverberationnon-stationary noisecompeting talkers

Solutions to Acoustic Impairments speech enhancement source separation beamforming dereverberation

Classes ofSpeech SoundsARPABETPhonetic SymbolsPhonePhonemeVoweliy, ih, eh, ey, ae, aa,aw, ay, ah, ao, oy, ow,uh, uw, ux, er, ax, ix,axr, axhSemivowell, r, w, y, hh, hv, elAffricatejh, chStopsb, d, g, p, t, k, dx, qm, n, ng, em, en, eng,NasalnxFricatives, sh, z, zh, f, th, v, dhproblemspraabcl b l axmz

Speech SpectrumFundamentalFrequency F0(e.g. 150Hz)Formant F1Associated w/size of mouthopening;proportional tofrequencye.g. AA 580HzSpectralCharacteristicsFrequency Range [Hz]FundamentalFrequency, F0Females/Children: 200 to 400Males: 60 to 150HarmonicsUp to 20KHearing Range20 to 20KTypical Audio SamplingRatesIn KHz: 8 (Telephony), 11.025, 22.05 (MP3s),32 (Cassette), 44.1 (CD), 48 (DVD)Formant F2Associated w/changes in oralcavity such astongue positionand lip activityFormant F3Associated w/front vs. backconstriction inoral cavitySine-wave speech: formants are estimatedand used to synthesize speech. HarmonicsExamples generated using Dan Ellis SW /

Noise and Speech LevelsLevel [dB]Classroom,HospitalHome, StoreTrains,AirplanesRestaurantsSpeech SPL60 to 7060 to 7060 to 70Noise SPL50 to 5570 to 7559 to 80SNR 5 to 20-15 to 0-20 to 11SPL: Sound Pressure Level relative to threshold of human hearing(20 micro-Pascals (force per square meter) mosquito flying 3m away)Typical target range for speechenhancement: -5 to 15dB

SirensStrong, structured frequency modulated tones & overtones

Wind NoiseStrong low frequency bursts stationary broad spectrum

CrowdBroad, non-stationary spectrum in speech range

Evaluating Performance of Speech Enhancers Quality measures assess how a speaker produces anutterance. Is the utterance “natural”, “raspy”, “hoarse”, “scratchy”? Does is sound good or bad?Intelligibility measures what a speaker said. What did you understand? What is the word error rate?

Subjective Measures of QualityITU-T P.835 Standard for Speech EnhancementQuality AssessmentRatingSignal Distortion (SIG)Background Distortion(BAK)Overall Quality (OVL)Based on Mean Opinion ScoreRating Scale (MOS)5Very natural, nodegradationNot noticeableExcellent: Imperceptible4Fairly natural, littledegradationSomewhat noticeableGood: Just perceptible, but notannoying3Somewhat natural,somewhat degradedNoticeable but not intrusiveFair: Perceptible and slightlyannoying2Fairly unnatural, fairlydegradedFairly conspicuous,somewhat intrusivePoor: Annoying, but notobjectionable1Very unnatural, verydegradedVery conspicuous, veryintrusiveBad: Very annoying andobjectionable

Objective Measures of Quality and IntelligibilityQualityIntelligibilitySegmental SNR (SNRseg)Frequecy Weighted Segmental SNR (fwSNRseg)Weighted Spectral Slope (WSS)Log-likelihood Ratio (LLR)Itakura-Saito (IS)Cepstral Distance (CEP)Hearing Aid Speech Quality Index (HASQI)Perceptual Evaluation of Video Quality (PEVQ)Perceptual Evaluation of Audio Quality (PEAQ)Perceptual Evaluation of Speech Quality (PESQ)Perceptual Objective Listening Quality Analysis(POLQA)Composite MetricsNormalized Covariance Metrics (NCM)Speech Intelligibility Index (SII)High-energy Glimpse Proportion MetricCoherence and Speech Intelligibility Index (CSII)Quasi-stationary Speech Transmission Index (QSTI)Short-time Objective Intelligibility Measure (STOI)Extended STOI Measure (ESTOI)Hearing-Aid Speech Perception Index (HASPI)K-Nearest Neighbor Mutual Information IntelligibilityMeasure (MIKNN)Speech Intelligibility Prediction based on a MutualInformation Lower Bound (SIMI)Speech Intelligibility in Bits (SIIB)Speech-based Envelop Power Spectrum Model withShort-Time correlation (sEPSM)Automatic Speech Recognition (ASR)Effectiveness of metrics is evaluated by measuringcorrelation of metric predictions against subjective test data

Speech Intelligibility in Bits (SIIB) Measures amount of information between speaker andlistener.Linguistic models for “clean” speech communicationmeasure 50-100 bps typical information rate.Mutual Info between“text” message andclean speechMutual Infobetween cleanand noisespeechFrom: S. Van Kuyk; W. B. Kleijn; R. C.Hendriks; “An instrumentalintelligibility metric based oninformation theory,” in IEEE SignalProcessing Letters, 2018

Traditional Methods of Speech Enhancement Most commonly employ a short-time Fourier transformbased analysis-modification-synthesis framework Frequency dependent noise suppression function Noises suppression based on estimates of speech andnoise statistics

Traditional Methods: Spectral Subtraction𝑅 𝜔 𝑆ถ𝜔 𝐷(𝜔)noisycleannoisespeech speech 𝐷2 E 𝑅 E 𝐷22𝑤ℎ𝑒𝑛 𝑆 0𝑅2 𝑆𝑆መ𝑆መ 𝜔 22 𝐷2 2Re 𝑆𝐷 ignorethis term!! 𝑅2 𝐷2𝑆መ 𝜔exp 𝑗 𝛷𝑟 𝜔noisycleanmagnitudephaseestimateNoisy speech modelNoise magnitude estimate measuredduring period of speech inactivityusing Voice Activity DetectorNoisy speech magnitudeCross term is ignored because cleanspeech and noise are uncorrelatedClean speech magnitude estimateClean speech synthesized from noisyphase and magnitude estimateDifference in noisy and clean phase notperceptible for SNRs 8dB

Spectral Subtraction: Spectrograms

Spectral Subtraction: Waveforms

Deep Neural Networks for Speech amadi, Seyedmahdad, and Ivan Tashev. "Causal SpeechEnhancement Combining Data-Driven Learning and Suppression RuleEstimation." INTERSPEECH. 2016.

Common Ideal Target MasksH. Erdogan, J. R. Hershey, S. Watanabe, and J. L. Roux, “Phasesensitive and recognition-boosted speech separation using deeprecurrent neural networks,” in Proc. Int. Conf. Acoust., Speech, SignalProcess., 2015, pp. 708–712

GeorgiaTech SystemDNN Input Features: 7x noisyspeech frames 1 frame noiseonly of concatenated LogSpectrum Mel Cepstrum withGlobal mean removed &normalized by Global varianceLog SpectrumMagnitudeMelCepstrum ostprocessingnoisy magnitude & biasDerive IRM from speech and noisespectrum estimatesMix DNN output with bias & noisymagnitude according to IRMSynthesisenhancedspeechnoisy phaseSpeech Noise LogSpectrumXu, Yong, et al. "A regression approach to speech enhancementbased on deep neural networks." IEEE/ACM Transactions on Audio,Speech and Language Processing (TASLP) 23.1 (2015): 7-19.

Spectral Subtractive vs. BabbleLabs DNN

Spectral Subtractive vs. BabbleLabs DNNMetricNoisySubtractiveBabbleLabs DNNSNR 1655693SIIB Gauss [bps]

BabbleLabs Production y magnitude & biasSpeech ReSynthesisenhancedspeechnoisy phase 90% of the code in the blue boxes 90% of the compute in the orange box Prototyping is in blocking format, while deployment is in streaming format. Using Matlab and the GPU coder, we were able to covert from reference todeployment code in 6 man-weeks.Currently we are porting the DNN using other open source tools. Exploring the migration to GPU coder to unify the flow if possible.

References Loizou, Philipos C. Speech enhancement: theory and practice. CRC press, 2007.Van Kuyk, Steven, W. Bastiaan Kleijn, and Richard C. Hendriks. "An evaluation ofintrusive instrumental intelligibility metrics." arXiv preprint arXiv:1708.06027 (2017).Mirsamadi, Seyedmahdad, and Ivan Tashev. "Causal Speech EnhancementCombining Data-Driven Learning and Suppression RuleEstimation." INTERSPEECH. 2016.Xu, Yong, et al. "A regression approach to speech enhancement based on deepneural networks." IEEE/ACM Transactions on Audio, Speech and LanguageProcessing (TASLP) 23.1 (2015): 7-19.H. Erdogan, J. R. Hershey, S. Watanabe, and J. L. Roux, “Phase-sensitive andrecognition-boosted speech separation using deep recurrent neural networks,” inProc. Int. Conf. Acoust., Speech, Signal Process., 2015, pp. 708–712 From ment/ https://looking-to-listen.github.io/

s p e a ky o u rm i n d

Speech Enhancement Speech Recognition Speech UI Dialog 10s of 1000 hr speech 10s of 1,000 hr noise 10s of 1000 RIR NEVER TRAIN ON THE SAME DATA TWICE Massive . Spectral Subtraction: Waveforms. Deep Neural Networks for Speech Enhancement Direct Indirect Conventional Emulation Mirsamadi, Seyedmahdad, and Ivan Tashev. "Causal Speech

Deep Learning: Top 7 Ways to Get Started with MATLAB Deep Learning with MATLAB: Quick-Start Videos Start Deep Learning Faster Using Transfer Learning Transfer Learning Using AlexNet Introduction to Convolutional Neural Networks Create a Simple Deep Learning Network for Classification Deep Learning for Computer Vision with MATLAB

2.3 Deep Reinforcement Learning: Deep Q-Network 7 that the output computed is consistent with the training labels in the training set for a given image. [1] 2.3 Deep Reinforcement Learning: Deep Q-Network Deep Reinforcement Learning are implementations of Reinforcement Learning methods that use Deep Neural Networks to calculate the optimal policy.

Speech enhancement based on deep neural network s SE-DNN: background DNN baseline and enhancement Noise-universal SE-DNN Zaragoza, 27/05/14 3 Speech Enhancement Enhancing Speech enhancement aims at improving the intelligibility and/or overall perceptual quality of degraded speech signals using audio signal processing techniques

speech 1 Part 2 – Speech Therapy Speech Therapy Page updated: August 2020 This section contains information about speech therapy services and program coverage (California Code of Regulations [CCR], Title 22, Section 51309). For additional help, refer to the speech therapy billing example section in the appropriate Part 2 manual. Program Coverage

speech or audio processing system that accomplishes a simple or even a complex task—e.g., pitch detection, voiced-unvoiced detection, speech/silence classification, speech synthesis, speech recognition, speaker recognition, helium speech restoration, speech coding, MP3 audio coding, etc. Every student is also required to make a 10-minute

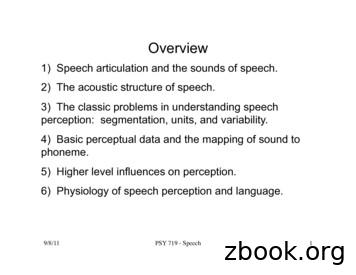

9/8/11! PSY 719 - Speech! 1! Overview 1) Speech articulation and the sounds of speech. 2) The acoustic structure of speech. 3) The classic problems in understanding speech perception: segmentation, units, and variability. 4) Basic perceptual data and the mapping of sound to phoneme. 5) Higher level influences on perception.

1 11/16/11 1 Speech Perception Chapter 13 Review session Thursday 11/17 5:30-6:30pm S249 11/16/11 2 Outline Speech stimulus / Acoustic signal Relationship between stimulus & perception Stimulus dimensions of speech perception Cognitive dimensions of speech perception Speech perception & the brain 11/16/11 3 Speech stimulus

accounting requirements for preparation of consolidated financial statements. IFRS 10 deals with the principles that should be applied to a business combination (including the elimination of intragroup transactions, consolidation procedures, etc.) from the date of acquisition until date of loss of control. OBJECTIVES/OUTCOMES After you have studied this learning unit, you should be able to .