Computer Architecture: A Constructive Approach

Computer Architecture: A Constructive ApproachUsing Executable and Synthesizable SpecificationsArvind 1 , Rishiyur S. Nikhil 2 ,Joel S. Emer 3 , and Murali Vijayaraghavan 11MIT2Bluespec, Inc.3Intel and MITwith contributions fromProf. Li-Shiuan Peh, Abhinav Agarwal, Elliott Fleming,Sang Woo Jun, Asif Khan, Myron King (MIT);Prof. Derek Chiou (University of Texas, Austin);and Prof. Jihong Kim (Seoul National University)c 2012-2013 Arvind, R.S.Nikhil, J.Emer and M.VijayaraghavanRevision: December 31, 2012

AcknowledgementsWe would like to thank the staff and students of various recent offerings of this course atMIT, Seoul National University and Technion for their feedback and support.

Contents1 Introduction1-12 Combinational circuits2-12.1A simple “ripple-carry” adder . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-12.1.1A 2-bit Ripple-Carry Adder . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-32.2Static Elaboration and Static Values . . . . . . . . . . . . . . . . . . . . . . . . . . .2-62.3Integer types, conversion, extension and truncation . . . . . . . . . . . . . . . . . . .2-72.4Arithmetic-Logic Units (ALUs) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-92.4.1Shift operations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-92.4.2Enumerated types for expressing ALU opcodes . . . . . . . . . . . . . . . . . 2-122.4.3Combinational ALUs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-132.4.4Multiplication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-142.5Summary, and a word about efficient ALUs . . . . . . . . . . . . . . . . . . . . . . . 2-163 Sequential (Stateful) Circuits and Modules3.13-1Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-13.1.1Space and time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-13.1.2D flip-flops . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-23.1.3Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-33.2Sequential loops with registers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-43.3Sequential version of the multiply operator . . . . . . . . . . . . . . . . . . . . . . .3-63.4Modules and Interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-73.4.1Polymorphic multiply module . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-113.5Register files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-123.6Memories and BRAMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-14i

iiCONTENTS4 Pipelining Complex Combinational Circuits4-14.1Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-14.2Pipeline registers and Inelastic Pipelines . . . . . . . . . . . . . . . . . . . . . . . . .4-24.2.1Inelastic Pipelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-34.2.2Stalling and Bubbles . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-44.2.3Expressing data validity using the Maybe type . . . . . . . . . . . . . . . . .4-54.3Elastic Pipelines with FIFOs between stages . . . . . . . . . . . . . . . . . . . . . . .4-84.4Final comments on Inelastic and Elastic Pipelines . . . . . . . . . . . . . . . . . . . . 4-105 Introduction to SMIPS: a basic implementation without pipelining5.1Introduction to SMIPS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5.1.15-15-1Instruction Set Architectures, Architecturally Visible State, and Implementation State . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5-25.1.2SMIPS processor architectural state . . . . . . . . . . . . . . . . . . . . . . .5-25.1.3SMIPS processor instruction formats . . . . . . . . . . . . . . . . . . . . . . .5-35.2Uniform interface for our processor implementations . . . . . . . . . . . . . . . . . .5-45.3A simple single-cycle implementation of SMIPS v1 . . . . . . . . . . . . . . . . . . .5-55.4Expressing our single-cycle CPU with BSV, versus prior methodologies . . . . . . . . 5-135.5Separating the Fetch and Execute actions . . . . . . . . . . . . . . . . . . . . . . . . 5-155.5.1Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-176 SMIPS: Pipelined6.16.26.36-1Hazards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6-16.1.1. . . . . . . . . . . . . . . . . . .6-2Two-stage pipelined SMIPS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6-26.2.1A first step: a single rule, inelastic solution . . . . . . . . . . . . . . . . . . .6-36.2.2A second step: a two-rule, somewhat elastic solution . . . . . . . . . . . . . .6-46.2.3A third step: a two-rule, elastic solution using epochs . . . . . . . . . . . . .6-56.2.4A final step: a two-rule, elastic, fully-decoupled, distributed solution . . . . .6-7Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6-9Modern processors are distributed systems7 Rule Semantics and Event Ordering7-17.1Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .7-17.2Parallelism: semantics of a rule in isolation . . . . . . . . . . . . . . . . . . . . . . .7-17.2.1What actions can be combined (simultaneous) in a rule? . . . . . . . . . . . .7-27.2.2Execution semantics of a single rule . . . . . . . . . . . . . . . . . . . . . . .7-57.3Logical semantics vs. implementation: sequential rule execution . . . . . . . . . . . .7-77.4Concurrent rule execution, and scheduling rules into clocks . . . . . . . . . . . . . .7-7

iii7.4.1Schedules, and compilation of schedules . . . . . . . . . . . . . . . . . . . . .7-97.4.2Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .7-97.4.3Nuances due to conditionals . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-127.5Hardware intuition for concurrent scheduling . . . . . . . . . . . . . . . . . . . . . . 7-127.6Designing performance-critical ciruits using EHRs . . . . . . . . . . . . . . . . . . . 7-147.77.6.1Intra-clock concurrency and semantics . . . . . . . . . . . . . . . . . . . . . . 7-157.6.2EHRs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-167.6.3Implementing the counter with EHRs . . . . . . . . . . . . . . . . . . . . . . 7-17Concurrent FIFOs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-187.7.1Multi-element concurrent FIFOs . . . . . . . . . . . . . . . . . . . . . . . . . 7-187.7.2Semantics of single element concurrent FIFOs . . . . . . . . . . . . . . . . . . 7-207.7.3Implementing single element concurrent FIFOs using EHRs . . . . . . . . . . 7-228 Data Hazards (Read-after-Write Hazards)8-18.1Read-after-write (RAW) Hazards and Scoreboards . . . . . . . . . . . . . . . . . . .8-18.2Concurrency issues in the pipeline with register file and scoreboard . . . . . . . . . .8-68.3Write-after-Write Hazards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .8-78.4Deeper pipelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .8-78.5Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .8-89 Branch Prediction9-19.1Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-19.2Static Branch Prediction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-29.3Dynamic Branch Prediction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-39.4A first attempt at a better Next-Address Predictor (NAP) . . . . . . . . . . . . . . .9-59.5An improved BTB-based Next-Address Predictor . . . . . . . . . . . . . . . . . . . .9-69.5.1Implementing the Next-Address Predictor . . . . . . . . . . . . . . . . . . . .9-69.6Incorporating the BTB-based predictor in the 2-stage pipeline . . . . . . . . . . . . .9-89.7Direction predictors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-99.8Incorporating multiple predictors into the pipeline . . . . . . . . . . . . . . . . . . . 9-109.8.19.9Extending our BSV pipeline code with multiple predictors . . . . . . . . . . . 9-12Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-1510 Exceptions10-110.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-110.2 Asynchronous Interrupts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-210.2.1 Interrupt Handlers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-210.3 Synchronous Interrupts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-310.3.1 Using synchronous exceptions to handle complex and infrequent instructions10-310.3.2 Incorporating exception handling into our single-cycle processor . . . . . . . . 10-310.4 Incorporating exception handling into our pipelined processor . . . . . . . . . . . . . 10-510.4.1 BSV code for pipeline with exception handling . . . . . . . . . . . . . . . . . 10-8

ivCONTENTS11 Caches11-111.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11-111.2 Cache organizations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11-411.2.1 Replacement policies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11-611.2.2 Blocking and Non-blocking caches . . . . . . . . . . . . . . . . . . . . . . . . 11-611.3 A Blocking Cache Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11-711.4 Integrating caches into the processor pipeline . . . . . . . . . . . . . . . . . . . . . . 11-1011.5 A Non-blocking cache for the Instruction Memory (Read-Only) . . . . . . . . . . . . 11-1111.5.1 Completion Buffers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11-1311.6 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11-1512 Virtual Memory12-112.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12-112.2 Different kinds of addresses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12-212.3 Paged Memory Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12-212.4 Page Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12-412.5 Address translation and protection using TLBs . . . . . . . . . . . . . . . . . . . . . 12-512.6 Variable-sized pages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12-712.7 Handling TLB misses. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12-912.8 Handling Page Faults. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12-1012.9 Recursive Page Faults . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12-1112.10Integating Virtual Memory mechanisms ino the processor pipeline . . . . . . . . . . 12-1212.11Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12-1413 Future Topics13-113.1 Asynchronous Exceptions and Interrupts . . . . . . . . . . . . . . . . . . . . . . . . . 13-113.2 Out-of-order pipelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13-113.3 Protection and System Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13-113.4 I and D Cache Coherence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13-113.5 Multicore and Multicore cache coherence . . . . . . . . . . . . . . . . . . . . . . . . . 13-113.6 Simultaneous Multithreading . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13-213.7 Energy efficiency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13-213.8 Hardware accelerators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13-2

vA SMIPS ReferenceSMIPS-1A.1 Basic Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-2A.2 System Control Coprocessor (CP0) . . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-3A.2.1 Test Communication Registers . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-3A.2.2 Counter/Timer Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-3A.2.3 Exception Processing Registers . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-4A.3 Addressing and Memory Protection . . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-6A.4 Reset, Interrupt, and Exception Processing . . . . . . . . . . . . . . . . . . . . . . . SMIPS-7A.4.1 Reset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-7A.4.2 Interrupts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-7A.4.3 Synchronous Exceptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-8A.5 Instruction Semantics and Encodings . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-9A.5.1 Instruction Formats . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-9A.5.2 Instruction Categories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-10A.5.3 SMIPS Variants . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-15A.5.4 Unimplemented Instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-15A.6 Instruction listing for SMIPS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . SMIPS-16BibliographyBIB-1

viCONTENTS

Chapter 1IntroductionThis book is intended as an introductory course in Computer Architecture (or ComputerOrganization, or Computer Engineering) for undergraduate students who have had a basicintroduction to circuits and digital electronics. This book employs a constructive approach,i.e., all concepts are explained with machine descriptions that transparently describe architecture and that can be synthesized to real hardware (for example, they can actually be runon FPGAs). Further, these descriptions will be very modular, enabling experimentationwith alternatives.Computer Architecture has traditionally been taught with schematic diagrams and explanatory text. Diagrams describe general structures, such as ALUs, pipelines, caches,virtual memory mechanisms, and interconnects, or specific examples of historic machines,such as the CDC 6600, Cray-1 (recent machines are usually proprietary, meaning the details of their structure may not be publicly available). These diagrams are accompanied bylectures and texts explaining principles of operation. Small quantitative exercises can bedone by hand, such as measuring cache hit rates for various cache organizations on smallsynthetic instruction streams.In 1991, with the publication of the classic Computer Architecture: A QuantitativeApproach by Hennessy and Patterson [4] (the Fith Edition was published in 2011), the pedagogy changed from such almost anecdotal descriptions to serious scientific, quantitativeevaluation. They firmly established the idea that architectural proposals cannot be evaluated in the abstract, or on toy examples; they must be measured running real programs(applications, operating systems, databases, web servers, etc.) in order properly to evaluatethe engineering trade-offs (cost, performance, power, and so on). Since it has typically notbeen feasible (due to lack of time, funds and skills) for students and researchers actuallyto build the hardware to test an architectural proposal, this evaluation has typically takenthe route of simulation, i.e., writing a program (say in in C or C ) that simulates thearchitecture in question. Most of the papers in leading computer architecture conferencesare supported by data gathered this way.Unfortunately, there are several problems with simulators written in traditional programming languages like C and C . First, it is very hard to write an accurate modelof complex hardware. Computer system hardware is massively parallel (at the level ofregisters, pipeline stages, etc.); the paralleism is very fine-grain (at the level of individualbits and clock cycles); and the parallelism is quite heterogeneous (thousands of dissimilar1-1

1-2Ch 1: Introduction (DRAFT)activities). These features are not easy to express in traditional programming languages,and simulators that try to model these features end up as very complex programs. Further,in a simulator it is too easy, without realizing it, to code actions that are unrealistic orinfeasible in real hardware, such as instantaneous, simultaneous access to a global pieceof state from distributed parts of the architecture. Finally, these simulators are very farremoved from representing any kind of formal specification of an architecture, which wouldbe useful for both manual and automated reasoning about correctness, performance, power,equivalence, and so on. A formal semantics of the interfaces and behavior of architecturalcomponents would also benefit constructive experimentation, where one could more easilysubtract, replace and add architectural components in a model in order to measure theireffectiveness.Of course, to give confidence in hardware feasibility, one could write a simulator in thesynthesizable subset of a Hardware Description Language (HDL) such as Verilog, VHDL,or the RTL level of SystemC. Unfortunately, these are very low level languages comparedto modern programming languages, requiring orders of magnitude more effort to develop,evolve and maintain simulators; they are certainly not good vehicles for experimentation.And, these languages also lack any useful notion of formal semantics.In this book we pursue a recently available alternative. The BSV language is a highlevel, fully synthesizable hardware description language with a strong formal semantic basis [1, 2, 7]. It is very suitable for describing architectures precisely and succinctly, andhas all the conveniences of modern advanced programming languages such as expressiveuser-defined types, strong type checking, polymorphism, object orientation and even higherorder functions during static elaboration.BSV’s behavioral model is based on Guarded Atomic Actions (or atomic transactional“rules”). Computer architectures are full of very subtle issues of concurrency and ordering,such as dealing correctly with data hazards or multiple branch predictors in a processorpipeline, or distributed cache coherence in scalable multiprocessors. BSV’s formal behavioral model is one of the best vehicles with which to study and understand such topics (itseems also to be the computational model of choice in several other languages and tools forformal specification and analysis of complex hardware systems).Modern hardware systems-on-a-chip (SoCs) have so much hardware on a single chip thatit is useful to conceptualize them and analyze them as distributed systems rather than asglobally synchronous systems (the traditional view), i.e., where architectural componentsare loosely coupled and communicate with messages, instead of attempting instantaneousaccess to global state. Again, BSV’s formal semantics are well suited to this flavor of models.The ability to describe hardware module interfaces formally in BSV facilitates creatingreusable architectural components that enables quick experimentation with alternativesstructures, reinforcing the “constructive approach” mentioned in this book’s title.Architectural models written in BSV are fully executable. They can be simulated inthe BluesimTM simulator; they can be synthesized to Verilog and simulated on a Verilogsimulator; and they can be further synthesized to run on FPGAs, as illustrated in Fig. 1.1.This last capability is not only excellent for validating hardware feasibility of the models, butit also enables

Dec 31, 2012 · Computer Architecture: A Constructive Approach Using Executable and Synthesizable Speci cations . This book is intended as an introductory course in Computer Architecture (or Computer Organization, or Computer Engineering) for undergraduate students who have had a basic . (the Fith

What is Computer Architecture? “Computer Architecture is the science and art of selecting and interconnecting hardware components to create computers that meet functional, performance and cost goals.” - WWW Computer Architecture Page An analogy to architecture of File Size: 1MBPage Count: 12Explore further(PDF) Lecture Notes on Computer Architecturewww.researchgate.netComputer Architecture - an overview ScienceDirect Topicswww.sciencedirect.comWhat is Computer Architecture? - Definition from Techopediawww.techopedia.com1. An Introduction to Computer Architecture - Designing .www.oreilly.comWhat is Computer Architecture? - University of Washingtoncourses.cs.washington.eduRecommended to you b

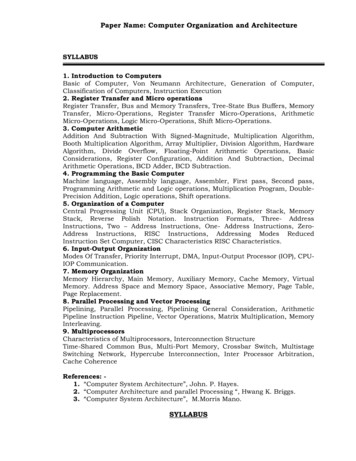

Paper Name: Computer Organization and Architecture SYLLABUS 1. Introduction to Computers Basic of Computer, Von Neumann Architecture, Generation of Computer, . “Computer System Architecture”, John. P. Hayes. 2. “Computer Architecture and parallel Processing “, Hwang K. Briggs. 3. “Computer System Architecture”, M.Morris Mano.

Constructive Computer Architecture Arvind Computer Science & Artificial Intelligence Lab Massachusetts Institute of Technology 6.175: L01 -September 6, 2017

Brief History (Impredicative) Type Theory. 1971 Per Martin-Löf,A theory of Types. (Predicative) Type Theory as Constructive Set Theory. 1979 Per Martin-Löf,Constructive Mathematics and Computer Programming . 1984 Per Martin-Löf,Intuitionistic Type Theory. (Predi

The Basic Law as to the Remedy of a Constructive Trust A constructive trust is an involuntary equitable trust created as a remedy to compel the transfer of property from the person wrongfully holding it to the rightful owner. In re Real Estate Associates Ltd. Partnership Litig., 223 F. Supp. 2d 1109, 1139 (C.D. Cal. 2002). .

to Gandhian constructive programme. He struggle for Puran Swaraj for all irrespective of their caste, class or religion. This meant building up or constructing the nation through nonviolent and truth full means. Constructive programmes formed an integral part of Gandhi’s

constructive and destructive processes. a. Identify surface features caused by constructive processes. Deposition (deltas, sand dunes, etc.) Earthquakes Volcanoes Faults b. Identify and find examples of surface features caused by destructive processes. Erosion (water –rivers and oceans, wind) Weathering Impact of organisms Earthquake Volcano

Advanced Engineering Mathematics 1. First-order ODEs 25 Problems of Section 1.3. The differential equation becomes Advanced Engineering Mathematics 1. First-order ODEs 26 1.4 Exact differential equations Now we want to consider a DE as That is, M(x,y)dx N(x,y)dy 0. The solving principle can be