Tutorial Workshop Speech Corpus Production And Validation .

Tutorial Workshop"Speech Corpus Production and Validation"LREC 2004, LisbonMonday, 24th May 2004Time: 14:00 to 19:00Schedule14:00 - 14:15 Welcome, Introduction of Speakers and Tutorial Overview14:15 - 14:45 Schiel: Speech Corpus Specification14:45 - 15:45 Draxler: Speech Data Collection in Practice15:45 - 16:15 coffee break16:15 - 16:45 Schiel: Speech Corpus Annotation16:45 - 17:45 Draxler: Speech Annotation in Practice17:45 - 18:00 short break18:00 - 18:45 van den Heuvel: Validation and Distribution of Speech Corpora18:45 - 19:00 Discussion and Conclusion

Workshop OrganisersChristoph Draxler, BAS Bavarian Archive for Speech SignalsLudwig-Maximilian-University Munich, Germanydraxler@bas.uni-muenchen.deHenk van den Heuvel, SPEX Speech Processing Expertise CentreCentre for Language and Speech Technology,Radboud University Nijmegenhenk@spex.nlFlorian Schiel, Bavarian Archive for Speech SignalsLudwig-Maximilian-University Munich, Germanyschiel@bas.uni-muenchen.de

Table of ContentsSpeech Corpus SpecificationSpeech Data Collection in PracticeRecording Script DTDSpeechRecorder Sample XML Recording ScriptSpeech Corpus AnnotationSpeech Annotation in PracticeValidation and Distribution of Speech CorporaTemplate Validation Report SALA IIMethodology for a Quick Quality Check of SLR and Phonetic LexiconsA Bug Report Service for ELRASLR Validation: Current Trends and DevelopmentsAuthor IndexBaum, MichaDraxler, ChristophHeuvel, Henk van denIskra, DorotaSanders, EricSchiel, FlorianVriendt, Folkert de4101617182328366596107

Speech Corpus SpecificationBefore getting started: Some general rulesFlorian Schiel All specifications should be fixed in a version numbereddocument called ‘Specification X.Y’ First step of corpus production If you produce data as a contractor for a client let all versionsbe signed by your client. Defines all properties of desiredspeech corpus Allocate considerable time for the specification phase(10-25% of total project time); observe Hofstetter’s Law Basis for cost estimate Use the check list on page 65 of book Basis for production schedule Specify tolerance measures whenever applicable If not sure about feasibility, ask experts(LDC,ELDA,SPEX,BAS)Overview: Important Parts of a SpeechCorpus Specification Speakers: number, profiles, distribution Spoken content Speaking style Recording procedureIn the following slides everything we deem to be absolutelynecessary for a speech corpus specification will beunderlined Annotation Meta data, documentationExamples will appear in italic Technical specifications Final release: corpus structure, media, release planLREC 2004 Workshop: Speech Corpus Production and Validation4/111

Speaker number, profiles, distributionSpoken Content Distribution of sex: m : f 50 : 50Specify the spoken content by one of:5% tolerance Distribution of age: 16-25 : 20%, 26-50 : 60%, 50 : 20%5% tolerance Vocabulary14 commands spoken 10 times by 5000 speakers L0 (mother tongue): German, max 3% non-native speakers Domainweather, fairy tales, last night’s TV program Dialects:Distribution over classified dialectsNorth German 50%, South G. 50%5% tolerance Education / Proficiency / ProfessionUse a closed vocabulary!School, College, UniversityNovice, Computer user, Expert Tasktravel planning, program the VCR, find a route on a map Phonological distributiondistribution of phonemes, syllables, morphs Others: pathologies, foreign accent, speech rate, jewelry etc.or a combination (recommended):14 commands spoken 10 times 1 minute monologueabout the weatherEliciting Speaking StylesEliciting Speaking StylesRecommendation: use more than one speaking style! Answering Speechquestions on prompt sheets or screen, acoustic promptingHints:- speakers very likely deviate from the intended closedvocabulary:“Have you been to the cinema today?”(intended: ‘No.’)“Of course not!”- avoid questions that are funny or intimate:“Are you male?”(intended: ‘Yes/No.’)“ laugh What a revolting idea!”- questions should clearly indicate the length of the expectedresponse:Bad:“What did you have for breakfast?”Good: “What is your phone number?”Select from the following basic speaking styles: Read speechprompt sheets, prompting from screenHints:- avoid words with ambiguous spellings(acronyms, numbers!)- avoid foreign names- define how punctuations are to be treated- avoid tongue twisters- for dictation task: define exact rules- avoid inappropriate, offensive languageLREC 2004 Workshop: Speech Corpus Production and Validation5/111

Eliciting Speaking StylesEliciting Speaking Styles Command / Control Speechprompt sheets, prompting from screen, Wizard-of-OzHints:- read commands are not equal to real commands (prosody)- real command speech can only obtained with convincingWizard-of-Oz settings or a real life system Non-prompted Speechguided conversation, role models, task solving, Wizard-of-OzHints:- very similar to spontaneous speech- restricted vocabulary- requires speakers that can act convincingly Descriptive Speechshow a picture or movie and ask for descriptionHints:- more spontaneous than read or answered speech- easy way to get speech with restricted vocabulary Spontaneous Speechstealth recording of a conversationHints:- legally problematic- technical quality often compromised Emotional Speechtwo possibilities: acted or realRecording ProcedureRecording ProcedureSpecifies the recording situation (not the technical specs) Background noisenone, natural, controlled: type and level (only in studio) Acoustical environmentecho canceled studio, studio, quiet office,office with printer/telephone,office with 1/2/5/10 employees,quiet living room (furniture, open/closed windows)etc. Type, number, position and distance of microphonesHint:- Use a simple sketch in the specs to clarify the description- Make some pictures (if recording site is accessible) The ‚script‘Defines how the speaker acts:speaker follows instructions while not changing position,speaker drives a car, speaker moves in the living room,speaker points to certain objects while speaking,speaker uses a phoneetc.LREC 2004 Workshop: Speech Corpus Production and Validation6/111

Annotation( all kinds of segmentation or labelling)The specifications should contain:Which?Types, conventions/definitions/standards, coverageHow?Procedures, manual/automatical, training of labelersQuality?Error tolerance, double checks, formal checksTechnical Specifications( the formal properties of signals and annotations, metadata, documentation) Signals (minimum requirements)- Sample frequency- Sample type and width- Byte order (if applicable)- Number of channels (in one file or separate files)- File format (mostly WAV or NIST)Multimodal corpora require adequate descriptions of allmodalities other than speech.LREC 2004 Workshop: Speech Corpus Production and ValidationDocumentation and Meta Data Specify DocumentationOnly necessary in the specification, if working withlarge group of partners:text formats, documentation templates Specify Meta data( formal documentation of the corpus data)Meta data are an essential part of each speech corpus.Therefore their minimum contents should be definedin the specification:speaker profiles, recording protocolsHint:Extensive formal (computer readable) meta data helpwith the later documentation!Collect meta data from the very beginning!Technical Specifications Annotation formatRecommendation: Use existing format that fits requirements SAM : ASCII, line structured, no hierarchy, no linking,not extendableEAF : XML, extendable, no hierarchy, no linking,no points in timeBPF : ASCII, line structured, no hierarchy, linking on wordlevel, extendable, overlapped speechESPS : ASCII, very simple, not supported any moreAGS : XML, very powerful, tool libraries(see lecture ‚Speech Corpus Annotation‘ for details)7/111

Technical SpecificationsTechnical Specifications Meta data format Lexicon format( list of all words together with additional information)No widely accepted format yet.No widely accepted formats yet.Hints:Recommendation:IMDI (tools available, web-based)www.mpi.nl/IMDI/(see papers in parallel workshop) code othography in unicode whenever possible code pronunciation in SAM-PA or X-SAM-PA use non-formatted, plain text or XML clearly define the type of pronunciation:canonical, citation form, most-likely minimum content:unambiguous orthography, word count, pronunciationFinal ReleaseWhat is not part of the specification: Specify Corpus structure( file system structure on media)- only necessary in large projects with several partners- separate signal data from symbolic data- avoid directories with more than 1024 files Logistics Specify MediaReliable, durable, platform independent media:CD-R, DVD-RAvoid unreliable media: disk, tape, magneto-optical disk,CD-RW, DVD RW, hard disk Recording tools, software Recruiting scheme Postprocessing procedures Annotation tools, software Distribution tools, software Define Release Plan- mile stones for preparation, pre-validation, collection,postprocessing, annotation, validation phases- define topics of pre-validation and validationLREC 2004 Workshop: Speech Corpus Production and Validation8/111

Considerations for the cost estimate Incentives for speakers Advertising for recruitment Special hardware Training paid working time! Offline time for maintenance Costs for media and backup media Maintenance of web site / corpus after project terminationLREC 2004 Workshop: Speech Corpus Production and Validation9/111

Motivation You are here:Speech Data Collection inPracticeChristoph Draxlerdraxler@bas.uni-muenchen.de– specification is done Now you have to– recruit speakers– allocate resources– prepare recordings set up equipment implement recordingscript test, test, testOverview Equipment Software: SpeechRecorder– introduction– demonstration DiscussionLREC 2004 Workshop: Speech Corpus Production and ValidationTypes of speech corpora Speech technology––––speech recognitionspeech synthesisspeaker verificationlanguage identification Speech eethnologysociology10/111

Equipment: Studio 2 microphones with pre-amplifiers––––headset or clip microphonetable or room microphonemicrophone arrayconference microphones with push-to-talk buttons Sensor data– laryngograph, palatograph, etc. Professional digital audio mixer and audio cardSoftware: SpeechRecorder Multi-channel audio recordingMulti-modal promptingMultiple configurable screensURL addressingPlatform independentLocalizable graphical user interfaceLREC 2004 Workshop: Speech Corpus Production and ValidationEquipment: in the field 2 microphones with preamplifiers– headset and table microphone Digital recording device– laptop with external audio interface– portable hard disk recorder– tape devices DAT recorder DV camera with external microphonesMulti-channel audio recording ipsk.audio library– abstracts from Java Sound API details– wrapper classes for ASIO drivers Digidesign, M-Audio, Emagic, etc. Alternative media APIs– QuickTime for Java (Mac and Windows)– Java Media Framework11/111

Multi-modal prompting Prompt display– Unicode text– jpeg, gif image– wav audio Prompt sequence– sequential or random order– manual or automatic progressMultiple configurable screens Speaker screen– recording indicator– instructions and prompt Experimenter screen– speaker screen– progress monitor– signal display, level meters– recording control buttonsURL addressing All resources are addressed via URLs– recording script– prompt data– signal files Perform recordings via the WWW– uncompressed audioLREC 2004 Workshop: Speech Corpus Production and Validation12/111

SpeechRecorder configuration Project: via configuration file Session: via parametersSpeechRecorderrecording script[speaker database[recording directory]]Project configuration Input sourcesSignal parametersAudio libraryRecording sequence and modeScreen configurationdefault values: anonymous speaker and user directorySample project configurations BITS synthesis corpus– headset and tablemicrophone, laryngograph– digital mixer, Digidesignaudio card, standard PC– 48 KHz/16 bit– speaker and experimenterscreen– text prompts– 5 speakers 2000 utterances each Car command corpus– 2 mouse microphonesinside the car– USB audio device, laptopoperated by co-driver– 44.1 KHz/16 bit– experimenter screenonly– audio prompts– 25 speakers 90 utterances eachLREC 2004 Workshop: Speech Corpus Production and ValidationRecording session configuration Recording script in XML format Tags for– metadata– media items– instructions to speakers– comments for experimenter– recording and nonrecording items13/111

Recording scriptRecording item !ELEMENT recording(recinstructions?, recprompt, reccomment?) !ELEMENT recinstructions mediaitem !ELEMENT recprompt mediaitem !ELEMENT reccomment mediaitem Nonrecording items– speaker information and distraction– status feedback– any media type !ATTLIST recording file CDATA #REQUIREDrecduration CDATA #REQUIREDprerecdelay CDATA #IMPLIEDpostrecdelay CDATA #IMPLIEDfinalsilence CDATA #IMPLIEDbeep CDATA #IMPLIEDrectype CDATA #IMPLIED Recording items– elicit speech from speaker– provide hints to experimenterRecording phasespassive promptdisplayactive prompt displaySample recording scriptpassive strecdelayidleLREC 2004 Workshop: Speech Corpus Production and Validation recording file "004.wav" prerecdelay "2000"recduration "60000" postrecdelay "500" recinstructions mimetype "text/UTF-8" Please describe the picture /recinstructions recprompt mediaitem mimetype "image/jpeg"src "002.jpg"/ /recprompt /recording 14/111

Demo SpeechRecorder installationWeb recording: Access Project specific configuration– Edit recording script– Configure displays– Setup audio input– Java WebStart application– predefined recording script– bundled image, audio and video prompts– preset signal and display settings Perform recording Optional login– check speaker identityWeb recording: Technology Record to client memory– perform signal quality checks– no cleanup on client hard disk necessary– potential danger of loss of data Automatic upload of recorded signals– background process– resume after connection failureLREC 2004 Workshop: Speech Corpus Production and ValidationProjects using SpeechRecorder Bosch spoken commands in carBITS synthesis corpusIPA recordings in St. PetersburgRegional Variants of German - JuniorAphasia studies at Klinikum Bogenhausenyour project here 15/111

SpeechRecorder recording script DTDThis Document Type Description specifies the format for SpeechRecorder recording script files.Version: 1.0 of April 2004Author: Christoph Draxler, Klaus Jänsch; Bayerisches Archiv für Sprachsignale, Universität München !ELEMENT session (metadata*, recordingscript) !ATTLIST session id CDATA #REQUIRED !ELEMENT metadata (key, value) !ELEMENT key (#PCDATA) !ELEMENT value (#PCDATA)* !ELEMENT recordingscript (nonrecording recording) !ELEMENT nonrecording (mediaitem) !ELEMENT recording (recinstructions?, recprompt, reccomment?) !ATTLIST recordingfile CDATA #REQUIREDrecduration CDATA #REQUIREDprerecdelay CDATA #IMPLIEDpostrecdelay CDATA #IMPLIEDfinalsilence CDATA #IMPLIEDbeep CDATA #IMPLIEDrectype CDATA #IMPLIED !ELEMENT recinstructions (#PCDATA) !ATTLIST recinstructionsmimetype CDATA #REQUIREDsrc CDATA #IMPLIED !ELEMENT recprompt (mediaitem) !ELEMENT reccomment (#PCDATA) !ELEMENT mediaitem (#PCDATA)* !ATTLIST mediaitemmimetype CDATA #REQUIREDsrc CDATA #IMPLIEDalt CDATA #IMPLIEDautoplay CDATA #IMPLIEDmodal CDATA #IMPLIEDwidth CDATA #IMPLIEDheight CDATA #IMPLIEDvolume CDATA #IMPLIED LREC 2004 Workshop: Speech Corpus Production and Validation16/111

Sample XML recording scriptThis XML document is a sample recording script for the SpeechRecorder application. ?xml version "1.0" encoding "UTF-8" standalone "no" ? !DOCTYPE session SYSTEM "SpeechRecPrompts.dtd" session id "LREC Demo recordings" metadata key Database name /key value LREC Demo 2004 /value /metadata recordingscript recording prerecdelay "500" recduration "60000" postrecdelay "500" file "US10031030.wav" recinstructions mimetype "text/UTF-8" Please answer /recinstructions recprompt mediaitem mimetype "text/UTF-8" How did you get here today? /mediaitem /recprompt /recording recording prerecdelay "500" recduration "60000" postrecdelay "500" file "US10031031.wav" recinstructions mimetype "text/UTF-8" Please answer /recinstructions recprompt mediaitem mimetype "text/UTF-8" What did you do during the last hour? /mediaitem /recprompt /recording recording prerecdelay "500" recduration "60000" postrecdelay "500" file "US10031032.wav" recinstructions mimetype "text/UTF-8" Please answer /recinstructions recprompt mediaitem mimetype "text/UTF-8" What day is it? /mediaitem /recprompt /recording recording prerecdelay "500" recduration "6000" postrecdelay "500" file "US10031034.wav" recinstructions mimetype "text/UTF-8" Please read the text /recinstructions recprompt mediaitem mimetype "text/UTF-8" ATATADEU /mediaitem /recprompt /recording recording prerecdelay "500" recduration "6000" postrecdelay "500" file "US10031050.wav" recinstructions mimetype "text/UTF-8" Please read the text /recinstructions recprompt mediaitem mimetype "text/UTF-8" ATALADEU /mediaitem /recprompt /recording /recordingscript /session LREC 2004 Workshop: Speech Corpus Production and Validation17/111

Speech Corpus AnnotationBefore getting started: Some general rulesFlorian Schiel Minimum required annotation is a basic transcript Use standards All information related to signals Use existing tools or libraries Without annotation no corpus! Allocate considerable time for the annotation phase(40-60% of total project time); observe Hofstetter’s Law Often the most costly part! Quality is everything!Overview: Annotation of a Speech Corpus Use the check list on page 119 If not sure about feasibility, ask experts(LDC,ELDA,SPEX,BAS)Types of Annotation General points: types, dependencies, hierarchyANNOTATION Transcription Tagging Segmentation and Labelling (S&L) Manual annotation tools Automatic annotation toolsTRANSCRIPTSEGMENTATION &LABELLING (S&L)TAGGING Formats Quality: double check, logging, inter-labeler agreementLREC 2004 Workshop: Speech Corpus Production and ValidationRelated to signal viasemantics onlyRelated to signal viatime references18/111

Dependencies and HierarchiesTranscriptionGeneralTURN S&L One transcription file (line) per signal file Minimum: spoken wordsTRANSCRIPTtimeTAGGINGSEGMENTATION& LABELLING Other:Other noise, syntax, pronunciation, breaks, hesitations,accents, boundaries, overlaps, names, numbers, spellings etc. Standard for spelling (Webster, German Duden, Oxford Dict.) No capital letters at sentence begin No punctuation (or separate them from words by blanks) Transscribe digits as words ( ‘12.5’ - ‘twelve point five’)PROSODYTranscriptionFormat Use or provide transformation into standard format (Use ‘readable’ intermediate format for work) Common formats: SAM, (SpeechDat),Verbmobil, MATE, EAFTranscriptionMinimal software requirements text editor with ‘hot keys’, syntax parser (e.g. Xemacs) simple replay tool, markup and replay of parts use WebTranscribe (see demo)Logistics train transcribers ‚check-out‘ mechanism (data base) for parallel work two steps: labeling correction (preferable one person!) formal checks: syntax extract word list (typos)LREC 2004 Workshop: Speech Corpus Production and Validation19/111

TranscriptionTagging Markup of words or chunks based on categorical systemExample: Often based on an existing transcriptw253 hfd 001 AEW: hallo [PA] [B3 fall] . # "ahm [B2] ich wollt'fragen [NA] [B2] , was heute abend [NA] im Fernsehen [PA] kommt [B3fall] .Examples: Dialog acts, parts-of-speech, canonical pronunciation, prosodyw253 hfw 002 SMA: hallo . P # was kann ich f"ur Sie tun ?ORT: 0 goodw253 hfd 003 AEW: "ah [B2] ich w"urde ganz gern [NA] dasAbendprogramm [PA] wissen [B3 fall] .ORT: 1 morningORT: 2 havew253 hfw 004 SMA: wenn ich Ihnen einen Tip geben darf , P # heutekommt Der Bulle von T"olz auf Sat-Eins um #zwanzig Uhr #f"unfzehn .ORT: 3 weORT: 4 metw253 hfd 005 AEW: -/und wa /- [B9] "ah [NA] [B2] gibt es heute [NA]abend eine *Sportshow [PA] [B3 cont] ? P zum Beispiel [NA] Fu"sball[PA] [B3 rise] ?ORT: 5 beforeDIA: 0,1 GREETING ABDIA: 2,3,4,5 QUERY ABSegmentation & Labeling (S&L) segment:( start, end, label )Segmentation & Labeling (S&L)Labelstart point-in-time event: ( time, label)Labelendtimetimetime S&L requires more knowledge than transcript (training budget!) time effort 1 / length of units maximize inter- and intra-labeler agreementExamples:turns/sentences, dialog acts, phrases, words, syllables, phonemes,phonetic features, prosodic eventsLREC 2004 Workshop: Speech Corpus Production and ValidationSoftware requirements:Praat (www.praat.org)Logistics:same as transcriptionExample:SAP tier of BAS Partitur Format (BPF)SAP: 1232 167 0 Q% SAP: 1399 2860 0 E:SAP: 4259 884 1 vSAP: 5143 832 1 aSAP: 5975 914 1 s% SAP: 6889 599 2 h% SAP: 7488 545 2 aqSAP: 8033 662 2 lqSAP: 8695 431 2 tSAP: 9126 480 2 -HSAP: 9606 0 2 @SAP: 9606 429 2 nSAP: 10035 628 3 zSAP: 10663 733 3 i:-ISAP: 10663 733 3 i:-ITier markerBeginEndWord numberPhonemic substitution20/111

Manual Annotation ToolsTranscript:Automatic Annotation ToolsWebTranscribe (see demo)Transcript:-ELAN (www.mpi.nl/tools/elan.html)Segmentation into words:Viterbi Alignment of HMMse.g. HTK, Aligner, MAUSS&L:- Viterbi Alignment of HMMs- MAUS- Elitist Approach: segmentationof phonetic featuresProsody:ToBi Light, e.g. IMS StuttgartParts-of-Speech:POS tagger, e.g. IMS StuttgartVideo Transcript:-S&L:Praat (www.praat.org)Video:ANVIL (www.dfki.de/ kipp/anvil)Video Transcript:CLAN (childes.psy.cmu.edu/clan/)Annotation File Formats SAM (www.phon.ucl.ac.uk/resource/eurom.html)- ASCII, good for simple, single speaker corpora- used in SpeechDat EAF (Eudico Annotation Format, MPI Nijmegen)- Unicode, XML- powerful, but not widely used in technical corpora BPF (BAS Partitur Format)- ASCII, simple, extendable- relates tiers over time and word order XWAVES- basic, but is used by EMU for hierarchical label databaseQuality Control & Assessment Comprehensive, clear, constant guidelines Extensive, consistent, on-going training (forum, meetings) Second pass / correction pass, preferably by one person / trainerError logging: documents progress, may be used in training Formal checks (syntax, label inventory) Double/triple annotations of parts of the corpus:- inter labeler agreement- intra labeler agreement (over time)1. symmetric label accuracy (Kipp, 1998)2. histograms of boundary deviations AGS (Annotation Graphs)- XML, extendable, C-library, Java APILREC 2004 Workshop: Speech Corpus Production and Validation21/111

ExamplesExamples WebCommand TranscriptionCMT: *** Label file body ***LBD:LBR: 0,149216,,,,start my computerLBO: 0,74608,149216,start my computerELF: Verbmobil POS Tagging Verbmobil ADJDVAFINPPERAPPRARTORDNN0123456Q'alzo Q'aUsg@r"ECn@th'a:b@ Q'IC Q'am dr'It@nj'u:li: Verbmobil S&LMAU:MAU:MAU:MAU:MAU:MAU:MAU:0799 -1 p: 800 799 0Q1600 799 0a2400 799 0z3200 799 0o4000 1599 1aU5600 479 1sURL BAS Home Page:http://www.bas.uni-muenchen.de/Bas SPEX Home Page:http://www.spex.nl/ Steven Greenberg Elitist Approach:http://www.icsi.berkeley.edu/ steveng/ AUS Praat:http://www.praat.org HTK:http://htk.eng.cam.ac.uk/ ANVIL:http://www.dfki.de/ kipp/anvil WebTranscribe:http://www.bas.uni-muenchen.de/Bas CLAN:http://childes.pry.cmu.edu/clan SAM:http://www.phon.ucl.ac.uk/resource/eurom.html Eudico Annonation Format (EAF), ELAN: BAS Partitur .bas.uni-muenchen.de/Bas/BasFormatseng.htmlLREC 2004 Workshop: Speech Corpus Production and Validation22/111

Motivation You are here:Speech Annotation in Practice– recordings have started Now you have to– annotate your data– implement quality control– submit data for(pre-)validationChristoph Draxlerdraxler@bas.uni-muenchen.deOverview Annotation editors WebTranscribe– architecture– configuration– demonstration DiscussionLREC 2004 Workshop: Speech Corpus Production and ValidationAnnotation editor requirements Tailored to the taskIntuitive to useExtensibleScalablePlatform independent23/111

There is not one editor Different annotation tiers – phonetic segmentation, phonemic labelling– orthographic transcription– POS tagging, dialogue markup, syntax trees, etc. and formats – free form text, implicitly structured text, text markup and different types of data but the procedure is always the same1.2.3.4.5.6.get signal dataenter or edit the annotationassess the signal qualityperform formal checks on annotationsave annotation and meta-datago back to square 1– audio, video, sensorAnnotations in a client/server systemClient Clientannotate signaltransfersignalsendannotationServermonitor progressstore annotationfetch next signal– annotation editor Server– manages workflow– data repository signal dataannotationmetadatashared resources, lexiconstatus filesWebTranscribe Tailored to the taskIntuitive to useExtensibleScalablePlatform independent task-specific modulesclean interfaceplug-in architectureany number of clientsJava and any RDBMSzero client configurationlocalizable user interfaceDBLREC 2004 Workshop: Speech Corpus Production and Validation24/111

WebTranscribe server Simple web server with cgi-functionality– dynamic HTML pages– generated by scripts, e. g. perlWebTranscribe client Signal display– segmentation– audio output Annotation edit panel Current implementation– Java servlets with Tomcat– annotations held in relational database– signals stored in file systemClient implementation Applet– browser compatibility problems– limited access to local resources Java WebStart– annotation field– editing support Quality assessment Progress controlsAnnotation edit panel Text area for annotation text Editing support for often-needed tasks– digit and string conversion– marker insertion– easy software distribution– controlled access to local resources– secure environment– independent of browserLREC 2004 Workshop: Speech Corpus Production and Validation25/111

Formal consistency checks Annotation accepted only if formal checksucceeds Tokenizer, parser implemented in edit panelmodule Error handling on client Updates of central resources immediatelyavailable to clientWebTranscribe demo Download software Configuration Sample annotations– RVG-J signal files– annotations according to SpeechDat DiscussionLREC 2004 Workshop: Speech Corpus Production and ValidationConfiguration Server contains project specific package– server URL and signal paths– database access– mapping of database table names to annotationvariables– type of annotation No client configuration necessaryFinal steps Signal files are stored in place Database stores all annotations– export database contents– export metadata Write documentation Submit speech database to validation26/111

Extending WebTranscribe Additional annotation tiers– plug-in architecture– graphical annotations Enhanced signal display– zoom, scroll, multiple selections– additional display types, e.g. sonagramLREC 2004 Workshop: Speech Corpus Production and Validation27/111

Validation and Distribution ofSpeech CorporaHenk van den HeuvelSPEX: Speech Processing ExpertiseCentreCLST: Centre for Language and SpeechTechnologySPEX: Mission statementThe mission statement of SPEX is:1.1.to provide and enrich spoken language resources andconcomitant tools which meet high quality standards2.2.to assess spoken language resources3.and to create and maintain expertise in these fieldsSPEX aims to operate: for both academic and commercial organisationsas an independent academically embedded institutionRadboud University Nijmegen,NetherlandsSPEX: OrganisationEmployees (in chronological order):Lou Boves(0.0 fte)Henk van den Heuvel (0.6 fte)Eric Sanders(0.5 fte)Andrea Diersen(0.7 fte)Dorota Iskra(1.0 fte)Folkert de Vriend(1.0 fte)Micha Baum(1.0 fte)SPEX: ActivitiesSPEX's main activities at present are the creation,annotation and validation of spoken language resources. SPEX has been selected as the ELRA’s primary Validation Centrefor speech corpora. Further, SPEX acts as validation centre forseveral European projects in the SpeechDat framework.SPEX is also involved in the creation and/or annotation of SLR.SPEX fulfilled several tasks in the construction of the DutchSpoken Corpus (CGN ). LREC 2004 Workshop: Speech Corpus Production and ValidationPublication of results in proceedings, journals28/111

Overview of thepresentationValidation What is SLR Validation? (1) DistributionWhat is SLR validation Models of Overview of validation checksdistribution History of SLR validation ELRA & LDC Aims of validation Dimensions of validation Validation flow and types What can be checked automatically Validation software On the edge of SLR validation: phonetic lexica SPEX and SLR validation Validation at ELRA & LDC Basic question: What is a “good” SLR? What is SLR Validation? (2) Validation criteria Specifications Tolerance margins Specs & Checks have a matrimony in validation Validation and SLR repair are differentthings: Diagnosis and cure Dangerous to combine !LREC 2004 Workshop: Speech Corpus Production and Validation“good” is what serves its purposesEvaluation and Validation Validation of SLRs:1. Checking a SLR against a fixed set of require

Wizard-of-Oz settings or a real life system Descriptive Speech show a picture or movie and ask for description Hints: - more spontaneous than read or answered speech - easy way to get speech with restricted vocabulary Eliciting Speaking Styles Non-prompted Speech guided conversation, role models, task solving, Wizard-of-Oz Hints:

between singing and speech at the phone level. We hereby present the NUS Sung and Spoken Lyrics Corpus (NUS-48E corpus) as the first step toward a large, phonetically annotated corpus for singing voice research. The corpus is a 169-min collection of audio recordings of the sung and spoken lyrics of 48 .

(It does not make sense to collect spoken language data only from children if one is interested in an overall picture including young and old speakers.) . Niko Schenk Corpus Linguistics { Introduction 36/48. Introduction Corpus Properties, Text Digitization, Applications 1 Introduction 2 Corpus Properties, Text Digitization, Applications .

XSEDE HPC Monthly Workshop Schedule January 21 HPC Monthly Workshop: OpenMP February 19-20 HPC Monthly Workshop: Big Data March 3 HPC Monthly Workshop: OpenACC April 7-8 HPC Monthly Workshop: Big Data May 5-6 HPC Monthly Workshop: MPI June 2-5 Summer Boot Camp August 4-5 HPC Monthly Workshop: Big Data September 1-2 HPC Monthly Workshop: MPI October 6-7 HPC Monthly Workshop: Big Data

speech 1 Part 2 – Speech Therapy Speech Therapy Page updated: August 2020 This section contains information about speech therapy services and program coverage (California Code of Regulations [CCR], Title 22, Section 51309). For additional help, refer to the speech therapy billing example section in the appropriate Part 2 manual. Program Coverage

speech or audio processing system that accomplishes a simple or even a complex task—e.g., pitch detection, voiced-unvoiced detection, speech/silence classification, speech synthesis, speech recognition, speaker recognition, helium speech restoration, speech coding, MP3 audio coding, etc. Every student is also required to make a 10-minute

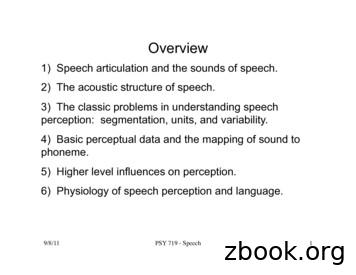

9/8/11! PSY 719 - Speech! 1! Overview 1) Speech articulation and the sounds of speech. 2) The acoustic structure of speech. 3) The classic problems in understanding speech perception: segmentation, units, and variability. 4) Basic perceptual data and the mapping of sound to phoneme. 5) Higher level influences on perception.

1 11/16/11 1 Speech Perception Chapter 13 Review session Thursday 11/17 5:30-6:30pm S249 11/16/11 2 Outline Speech stimulus / Acoustic signal Relationship between stimulus & perception Stimulus dimensions of speech perception Cognitive dimensions of speech perception Speech perception & the brain 11/16/11 3 Speech stimulus

RP-2 ISO 14001:2015 Issued: 8/15/15 DQS Inc. Revised: 5/12/17 Introduction This Environmental Management System Assessment Checklist is a tool for understanding requirements of ISO14001:2015 “Environmental management systems – Requirements with guidance for use”. The Checklist covers Clauses 4-10 requirements with probing questions about how an organization has addressed requirements and .