Electronic Health Record Usability - Agency For Healthcare Research And .

Electronic Health Record UsabilityVendor Practices and PerspectivesPrepared for:Agency for Healthcare Research and QualityU.S. Department of Health and Human Services540 Gaither RoadRockville, Maryland 20850http://www.ahrq.govPrepared by:James Bell AssociatesThe Altarum InstituteWriters:Cheryl McDonnellKristen WernerLauren WendelAHRQ Publication No. 09(10)-0091-3-EFMay 2010HEALTH IT

This document is in the public domain and may be used and reprinted without specialpermission. Citation of the source is appreciated.Suggested Citation:McDonnell C, Werner K, Wendel L. Electronic Health Record Usability: Vendor Practices andPerspectives. AHRQ Publication No. 09(10)-0091-3-EF. Rockville, MD: Agency for HealthcareResearch and Quality. May 2010.This project was funded by the Agency for Healthcare Research and Quality (AHRQ), U.S.Department of Health and Human Services. The opinions expressed in this document are thoseof the authors and do not reflect the official position of AHRQ or the U.S. Department of Healthand Human Services.ii

Expert Panel MembersNameMark Ackerman, PhDDaniel Armijo, MHSAAffiliationAssociate Professor, School of Information; Associate Professor,Department of Electrical Engineering and Computer Science,University of MichiganPractice Area Leader, Information & Technology Strategies,Altarum InstituteClifford Goldsmith, MDHealth Plan Strategist, Microsoft, Eastern U.S.Lee Green, MD, MPHProfessor & Associate Chair of Information Management,Department of Family Medicine, University of Michigan; Director,Great Lakes Research Into Practice Network; Co-Director,Clinical Translation Science Program in the Michigan Institute forClinical and Health Research (MICHR)Michael Klinkman, MD, MSAssociate Professor, Department of Family Medicine, Universityof Michigan; Director of Primary Care Programs, University ofMichigan Depression CenterRoss Koppel, PhDProfessor, University of Pennsylvania Sociology Department;Affiliate Faculty Member, University of Pennsylvania School ofMedicine; President, Social Research CorporationDavid KredaIndependent Computer Software ConsultantSvetlana LowryDonald T. Mon, PhDNational Institute of Standards and TechnologyVice President of Practice Leadership, American HealthInformation Management Association; Co-Chair, Health LevelSeven (HL7) EHR Technical CommitteeCatherine Plaisant, PhDUniversity of Maryland, Human Computer Interaction Laboratory,Institute for Advanced Computer Studies, Associate DirectorBen Shneiderman, PhDProfessor, Department of Computer Science; Founding Director,Human-Computer Interaction Laboratory, Institute for AdvancedComputer Studies, University of MarylandAndrew Ury, MDChief Medical Officer, McKesson Provider TechnologiesJames Walker, MDChief Health Information Officer, Geisinger Health SystemAndrew M. Wiesenthal, MD, SMAssociate Executive Director for Clinical Information Support,Kaiser Permanente FederationKai Zheng, PhDAssistant Professor, University of Michigan School of PublicHealth; Assistant Professor, University of Michigan School ofInformation; Medical School’s Center for Computational Medicineand Biology (CCMB); Michigan Institute for Clinical and HealthResearch (MICHR)Michael Zaroukian MD, PhDProfessor and Chief Medical Information Officer, Michigan StateUniversity; Director of Clinical Informatics and CareTransformation, Sparrow Health System; Medical Director, EMRProjectiii

ContentsExecutive Summary . 1Background . 3Vendor Profiles . 4Standards in Design and Development . 4End-User Involvement . 4Design Standards and Best Practices . 4Industry Collaboration . 5Customization . 5Usability Testing and Evaluation . 6Informal Usability Assessments . 6Measurement . 6Observation . 7Changing Landscape . 7Postdeployment Monitoring and Patient Safety . 7Feedback Solicitation . 7Review and Response . 8Patient Safety . 8Role of Certification in Evaluating Usability . 9Current Certification Strategies . 9Subjectivity . 9Innovation . 9Recognized Need . 10Conclusion . 10Implications. 11Standards in Design and Development . 11Usability Testing and Evaluation . 12Postdeployment Monitoring and Patient Safety . 12Role of Certification in Evaluating Usability . 13References . 14AppendixesAppendix I: Summary of Interviewed Vendors . A-1Appendix II: Description of Electronic Health Record Products . B-1iv

Executive SummaryOne of the key factors driving the adoption and appropriate utilization of electronichealth record (EHR) systems is their usability.1 However, a recent study funded by the Agencyfor Healthcare Research and Quality (AHRQ) identified information about current EHR vendorusability processes and practices during the different phases of product development anddeployment as a key research gap.2To address this gap and identify actionable recommendations to move the field forward,AHRQ contracted with James Bell Associates and the Altarum Institute to conduct a series ofstructured discussions with selected certified EHR vendors and to solicit recommendations basedon these findings from a panel of multidisciplinary experts in this area.The objectives of the project were to understand processes and practices by these vendorswith regard to: The existence and use of standards and “best practices” in designing, developing, anddeploying products. Testing and evaluating usability throughout the product life cycle. Supporting postdeployment monitoring to ensure patient safety and effective use.In addition, the project solicited the perspectives of certified EHR vendors with regard to the roleof certification in evaluating and improving usability.The key findings from the interviews are summarized below. All vendors expressed a deep commitment to the development and provision of usableEHR product(s) to the market. Although vendors described an array of usability engineering processes and the use ofend users throughout the product life cycle, practices such as formal usability testing, theuse of user-centered design processes, and specific resource personnel with expertise inusability engineering are not common. Specific best practices and standards of design, testing, and monitoring of the usability ofEHR products are not readily available. Vendors reported use of general (software) andproprietary industry guidelines and best practices to support usability. Reportedperspectives on critical issues such as allowable level of customization by customersvaried dramatically. Many vendors did not initially address potential negative impacts of their products as apriority design issue. Vendors reported a variety of formal and informal processes for1

identifying, tracking, and addressing patient safety issues related to the usability of theirproducts. Most vendors reported that they collect, but do not share, lists of incidents related tousability as a subset of user-reported “bugs” and product-enhancement requests. While allvendors described a process, procedures to classify and report usability issues of EHRproducts are not standardized across the industry. No vendors reported placing specific contractual restrictions on disclosures by systemusers of patient safety incidents that were potentially related to their products. Disagreement exists among vendors as to the ideal method for ensuring usabilitystandards, and best practices are evaluated and communicated across the industry as wellas to customers. Many view the inclusion of usability as part of product certification aspart of a larger “game” for staying competitive, but also as potentially too complex orsomething that will “stifle innovation” in this area. Because nearly all vendors view usability as their chief competitive differentiator,collaboration among vendors with regard to usability is almost nonexistent. To overcome competitive pressures, many vendors expressed interest in an independentbody guiding the development of voluntary usability standards for EHRs. This bodycould build on existing models of vendor collaboration, which are currently focusedpredominantly on issues of interoperability.Based on the feedback gained from the interviews and from their experience withusability best practices in health care and other industries, the project expert panel made thefollowing recommendations: Encourage vendors to address key shortcomings that exist in current processes andpractices related to the usability of their products. Most critical among these are lack ofadherence to formal user-design processes and a lack of diversity in end users involved inthe testing and evaluation process. Include in the design and testing process, and collect feedback from, a variety of end-usercontingents throughout the product life cycle. Potentially undersampled populationsinclude end users from nonacademic backgrounds with limited past experience withhealth information technology and those with disabilities. Support an independent body for vendor collaboration and standards development toovercome market forces that discourage collaboration, development of best practices, andstandards harmonization in this area. Develop standards and best practices in use of customization during EHR deployment. Encourage formal usability testing early in the design and development phase as a bestpractice, and discourage dependence on postdeployment review supporting usabilityassessments.2

Support research and development of tools that evaluate and report EHR ease of learning,effectiveness, and satisfaction both qualitatively and quantitatively. Increase research and development of best practices supporting designing for patientsafety. Design certification programs for EHR usability in a way that focuses on objective andimportant aspects of system usability.BackgroundEncouraged by Federal leadership, significant investments in health informationtechnology (IT) are being made across the country. While the influx of capital into the electronichealth record (EHR)/health information exchange (HIE) market will undoubtedly stimulateinnovation, there is the corresponding recognition that this may present an exceptionalopportunity to guide that innovation in ways that benefit a significant majority of potential healthIT users.One of the key factors driving the adoption and appropriate utilization of EHR systems istheir usability.1 While recognized as critical, usability has not historically received the same levelof attention as software features, functions, and technical standards. A recent analysis funded bythe Agency for Healthcare Research and Quality (AHRQ) found that very little systematicevidence has been gathered on the usability of EHRs in practice. Further review established afoundation of EHR user-interface design considerations, and an action agenda was proposed forthe application of information design principles to the use of health IT in primary care settings.2,3In response to these recommendations, AHRQ contracted with James Bell Associates andthe Altarum Institute to evaluate current vendor-based practices for integrating usability duringthe entire life cycle of the product, including the design, testing, and postdeployment phases ofEHR development. A selected group of EHR vendors, identified through the support of theCertification Commission for Health Information Technology (CCHIT) and AHRQ, participatedin semistructured interviews. The discussions explored current standards and practices forensuring the usability and safety of EHR products and assessed the vendors’ perspectives on howEHR usability and information design should be certified, measured, and addressed by thegovernment, the EHR industry, and its customers. Summary interview findings were thendistributed to experts in the field to gather implications and recommendations resulting fromthese discussions.Vendor ProfilesThe vendors interviewed were specifically chosen to represent a wide distribution ofproviders of ambulatory EHR products. There was a representation of small-sized businesses(less than 100 employees), medium-sized businesses (100-500 employees), and large-sizedbusinesses (greater than 500 employees). The number of clinician users per company varied from1,000 to over 7,000, and revenue ranged from 1 million to over 10 billion per year. The EHRproducts discussed came on the market in some form in the time period from the mid-1990s to2007. All vendors except one had developed their EHR internally from the ground up, with the3

remaining one internally developing major enhancements for an acquired product. Many of theseproducts were initially designed and developed based on a founding physician’s practice and/orestablished clinical processes. All companies reported that they are currently engaged in groundup development of new products and/or enhancements of their existing ambulatory products.Many enhancements of ambulatory products center on updates or improvements in usability.Examples of new developments include changes in products from client-based to Web-basedEHRs; general changes to improve the overall usability and look and feel of the product; and theintegration of new technologies such as patient portals, personal health records, and tabletdevices.The full list of vendors interviewed and a description of their key ambulatory EHRproducts are provided in Appendixes I and II. The following discussion provides a summary ofthe themes encountered in these interviews.Standards in Design and DevelopmentEnd-User InvolvementAll vendors reported actively involving their intended end users throughout the entiredesign and development process. Many vendors also have a staff member with clinicalexperience involved in the design and development process; for some companies the clinicianwas a founding member of the organization.“We want to engage with leadership-levelWorkgroups and advisory panels are the mostpartners as well as end users from all venuescommon sources of feedback, with some vendorsthat may be impacted by our product.”utilizing a more comprehensive participatorydesign approach, incorporating feedback from allstakeholders throughout the design process. Vendors seek this information to develop initialproduct requirements, as well as to define workflows, evaluate wireframes and prototypes, andparticipate in initial beta testing. When identifying users for workgroups, advisory panels, or betasites, vendors look for clinicians who have a strong interest in technology, the ability to evaluateusability, and the patience to provide regular feedback. Clinicians meeting these requirements aremost often found in academic medical centers. When the design concerns an enhancement to thecurrent product, vendors often look toward users familiar with the existing EHR to provide thisfeedback.Design Standards and Best PracticesA reliance on end-user input and observation for ground-up development is seen as arequirement in the area of EHR design, where specific design standards and best practices are notyet well defined. Vendors indicated that appropriate“There are no standards most of the time,and comprehensive standards were lacking for EHRand when there are standards, there is nospecific functionalities, and therefore they rely onenforcement of them. The softwaregeneral software design best practices to informindustry has plenty of guidelines and gooddesign, development, and usability. While thesebest practices, but in health IT, there aresoftware design principles help to guide theirnone.”processes, they must be adjusted to fit specific end4

user needs within a health care setting. In addition to following existing general designguidelines such as Microsoft Common User Access, Windows User Interface, Nielsen NormanGroup, human factors best practices, and releases from user interface (UI) and usabilityprofessional organizations, many vendors consult with Web sites, blogs, and professionalorganizations related to health IT to keep up to date with specific industry trends. Supplementingthese outside resources, many vendors are actively developing internal documentation as theirproducts grow and mature, with several reporting organized efforts to create internaldocumentation supporting product-specific standards and best practices that can be appliedthrough future product updates and releases.Industry CollaborationAs these standards and best practices are being developed, they are not beingdisseminated throughout the industry. Vendors receive some information through professionalorganizations and conferences, but they would like to see a stronger push toward an independentbody, either governmental or research based, to establish some of these standards. Theindependent body would be required, as allvendors reported usability as a key“The field is competitive so there is little sharing ofbest practices in the community. The industrycompetitive differentiator for their product;should not look toward vendors to create these bestthis creates a strong disincentive for industrypractices. Other entities must step up and definewide collaboration. While all were eager to[them] and let the industry adapt.”take advantage of any resources commonlyapplied across the industry, few werecomfortable with sharing their internally developed designs or best practices for fear of losing amajor component of their product’s competitiveness. Some vendors did report they collaborateinformally within the health IT industry, particularly through professional societies, tradeconferences, and serving on committees. For example, several vendors mentioned participationin the Electronic Health Record Association (EHRA), sponsored by the Healthcare Informationand Management Systems Society (HIMSS), but noted that the focus of this group is on clinicalvocabulary modeling rather than the usability of EHRs. Some interviewees expressed a desire tocollaborate on standards issues that impact usability and patient safety through independentvenues such as government or research agencies.CustomizationIn addition to the initial design and development process, vendors commonly work withend users to customize or configure specific parts of the EHR. Vendors differed in the extent towhich they allowed and facilitated customization and noted the potential for introducing errorswhen customization is pursued. Most customizations involve setting security rules based on roleswithin a clinic and the creation of document“You cannot possibly adapt technology totemplates that fit a clinic’s specific workflow. Manyeveryone’s workflow. You must provide thevendors view this process as a critical step toward amost optimum way of doing somethingsuccessful implementation and try to assist users towhich [users] can adapt.”an extent in developing these items. While somevendors track these customizations as insight forfuture product design, they do not view the customizations as something that can be generalizedto their entire user base, as so many are context specific. The level of customization varies5

according to vendor since vendors have different views about the extent to which their productcan or should be customized. Vendors do not routinely make changes to the code or underlyinginterface based on a user request; however, the level to which end users can modify templates,workflows, and other interface-related characteristics varies greatly by vendor offering.Usability Testing and EvaluationInformal Usability AssessmentsFormal usability assessments, such as task-centered user tests, heuristic evaluations,cognitive walkthroughs, and card sorts, are not a common activity during the design anddevelopment process for the majority of vendors. Lack of time, personnel, and budget resourceswere cited as reasons for this absence; however, themajority expressed a desire to increase these types of“Due to time and resource constraints,we do not do as much as we would likeformal assessments. There was a common perceptionto do. It is an area in which we areamong the vendors that usability assessments arelooking to do more.”expensive and time consuming to implement during thedesign and development phase. The level of formalusability testing appeared to vary by vendor size, with larger companies having more staff andresources dedicated to usability testing while smaller vendors relied heavily on informal methods(e.g., observations, interviews), which were more integrated into the general developmentprocess. Although some reported that they conduct a full gamut of both formal and informalusability assessments for some parts of the design process, most reported restricting their use offormal methods to key points in the process (e.g., during the final design phase or for evaluationof specific critical features during development).MeasurementFunctions are selected for usability testing according to several criteria: frequency of use,task criticality and complexity, customer feedback, difficult design areas, risk and liability,effects on revenue, compliance issues (e.g., Military HealthSystem HIPAA [Health Insurance Portability and“Testing is focused more onAccountability Act], and the American Recovery andfunctionality rather thanReinvestment Act) and potential impacts on patient safety.usability.”The most common or frequent tasks and tasks identified asinherently complex are specifically prioritized for usability testing. Neither benchmarks andstandards for performance nor formalized measurements of these tasks are common in theindustry. While some vendors do measure number of clicks and amount of time to complete atask, as well as error rates, most do not collect data on factors directly related to the usability ofthe product, such as ease of learning, effectiveness, and satisfaction. Many vendors reported thatthe amount of data collected does not allow for quantitative analysis, so instead they rely onmore anecdotal and informal methods to ensure that their product works more effectively thanpaper-based methods and to inform their continuous improvements with upgrades and releases.6

ObservationObservation is the “gold standard” among all vendors for learning how users interact withtheir EHR. These observations usually take place within the user’s own medical practice, eitherin person or with software such as TechSmith’sMorae.4 Vendors will occasionally solicit feedback on“[Methods with] low time and resourceprototypes from user conferences in an informal labefforts are the best [to gather feedback];like setting. These observations are typically used towherever users are present, we will gatherdata.”gather information on clinical workflows or processflows, which are incorporated into the product design,particularly if the vendor is developing a newenhancement or entire product.Changing LandscapeWhile informal methods of usability testing seem to be common across most vendors, thelandscape appears to be changing toward increasing the importance of usability as a designnecessity. Multiple vendors reported the current or recent development of formal in-houseobservation laboratories where usability testing could be more effectively conducted. Othersreported the recent completion of policies and standards directly related to integrating usabilitymore formally into the design process, and one reported a current contract with a third-partyvendor to improve usability practices. While it is yet to be seen if these changes will materialize,it appeared that most respondents recognized the value of usability testing in the design processand were taking active steps to improve their practices.Postdeployment Monitoring and Patient SafetyFeedback SolicitationVendors are beginning to incorporate more user feedback into earlier stages of productdesign and development; however, most of this feedback comes during the postdeploymentphase. As all vendors interviewed are currently engaged in either the development ofenhancements of current products or the creation of new products, the focus on incorporatingfeedback from intended end users at all stages of“A lot of feedback and questions are oftendevelopment has increased. Many of the EHRs haveturned into enhancements, as they speak tobeen on the market for over 10 years; as a result,the user experience of the product.”many vendors rely heavily on this postdeploymentfeedback to evaluate product use and inform futureproduct enhancements and designs. Maintaining contact with current users is of high priority toall EHR vendors interviewed and in many ways appeared to represent the most important sourceof product evaluation and improvement. Feedback is gathered through a variety of sources,including informal comments received by product staff, help desk support calls, training andimplementation staff, sales personnel, online user communities, beta clients, advisory panels, anduser conferences. With all of these avenues established, vendors appear to attempt to make it aseasy as possible for current users to report potential issues as well as seek guidance from otherusers and the vendor alike.7

Review and ResponseOnce the vendors receive both internal and external feedback, they organize it through aformal escalation process that ranks the severity of the issue based on factors such as number ofusers impacted, type of functionality involved, patientsafety implications, effects on workflow, financial“Every suggestion is not a good suggestion;impact to client, regulation compliance, and thesome things do not help all users becausenot all workflows are the same.”source of the problem, either implementation based orproduct based. In general, safety issues are given ahigh-priority tag. Based on this escalation process, priorities are set, resources within theorganization are assigned, and timelines are created for directly addressing the reported issue.Multiple responses are possible depending on the problem. Responses can include additionaluser training, software updates included in the next product release, or the creation and release ofimmediate software patches to correct high-priority issues throughout the customer base.Patient SafetyAdoption of health IT has the potential for introducing beneficial outcomes along manydimensions. It is well recognized, however, that the actual results achieved vary from setting tosetting,5 and numerous studies have reported health IT implementations that introducedunintended adverse consequences detrimental to patient care practice.6 Surprisingly, in manyinterviews patient safety was not initially verbalized as a priority issue. Initial comments focusedon creating a useful, usable EHR product, not one that addresses potential negative impacts onpatient safety. Vendors rely heavily on physicians to notice“Physicians are very acutelypotential hazards and report these hazards to them through theiraware of how technology isinitial design and development advisory panels andgoing to impact patient safety;postdeployment feedback mechanisms. After further questioningthat’s their focus andmotivation.”specific to adverse events

health record (EHR) systems is their usability.1 However, a recent study funded by the Agency for Healthcare Research and Quality (AHRQ) identified information about current EHR vendor usability processes and practices during the different phases of product development and deployment as a key research gap.2

usability testing is a very frequently used method, second only to the use of iterative design. One goal of this chapter is to provide an introduction to the practice of usability testing. This includes some discussion of the concept of usability and the history of usability testing, various goals of usability testing, and running usability tests.

Usability Testing Formal usability testing involves real users and more resources and budget than do the discount usability techniques. Usability testing is the observation and analysis of user behavior while users use a prod- uct or product prototype to achieve a goal. It has five key components: 1. The goal is to improve the usability of a .

ELECTRONIC HEALTH RECORD USABILITY TESTING Report based on ISO/IEC 25062: 2006 Common Industry Format for Usability Test Reports . Product Name: Evolution EHR . Version: 3.0 . Date of Usability Test: August 28, 2018 . Date of Report: July 19, 2018 . Report Prepared By: Adamaris Miranda

Usability is the quality attribute that measures the easiness of an interface [4]. Battleson et al. (2001) asserted that usability testing is the best approach to asses a website's usability [5]. The meaning of usability, overall, proposes that there are four common factors that influence usability of the interactive

The Usability Metric for User Experience (UMUX) scale is a new addition to the set of standardized usability questionnaires, and aims to measure perceived usability employing fewer items that are in closer conformity with the ISO 9241 (1998) definition of usability (Finstad, 2010).

Kareo EHR Usability Study Report of Results EXECUTIVE SUMMARY A usability test of Kareo EHR version 4.0 was conducted on August 21 & 23, 2017, in Irvine, CA by 1M2Es, Inc. The purpose of this study was to test and validate the usability of the current user interface, and provide evidence of usability in the EHR Under Test (EHRUT). During the

usability test of the DrFirst.com Rcopia V4 system. The test was conducted in the Fairfax, VA office of The Usability People over remote tele-conferencing sessions using GotoMeeting. The purpose was to test and validate the usability of the current user interface and provide evidence of usability of Rcopia V4 as the EHR Under Test (EHRUT).

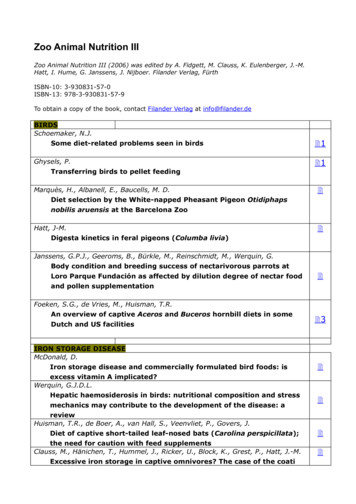

Zoo Animal Nutrition III (2006) was edited by A. Fidgett, M. Clauss, K. Eulenberger, J.-M. Hatt, I. Hume, G. Janssens, J. Nijboer. Filander Verlag, Fürth ISBN-10: 3-930831-57-0 ISBN-13: 978-3-930831-57-9 To obtain a copy of the book, contact Filander Verlag at info@filander.de BIRDS Schoemaker, N.J. Some diet-related problems seen in birds 1 Ghysels, P. Transferring birds to pellet feeding 1 .